5 Free Resources for Getting Started with Deep Learning for Natural Language Processing

5 Free Resources for Getting Started with Deep Learning for Natural Language Processing

This is a collection of 5 deep learning for natural language processing resources for the uninitiated, intended to open eyes to what is possible and to the current state of the art at the intersection of NLP and deep learning. It should also provide some idea of where to go next.

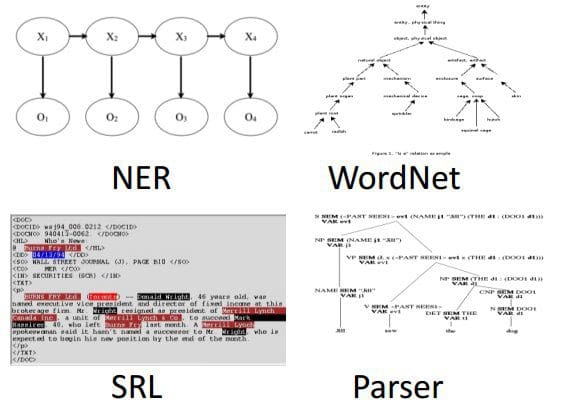

From Richard Socher's slides, below.

Interested in applying deep learning to natural language processing (NLP)? Don't know where or how to start learning?

This is a collection of 5 resources for the uninitiated, which should open eyes to what is possible and the current state of the art at the intersection of NLP and deep learning. It should also provide some idea of where to go next. Hopefully this collection is of some use to you.

1. Deep Learning for NLP (without Magic)

This is a set of slides by Richard Socher of Stanford and MetaMind, originally given at the Machine Learning Summer School in Lisbon. You'll find it to be a solid primer for both NLP and deep learning, as well as deep learning for NLP. Expect no magic.

Also, learning from Socher is a pretty good idea.

2. Deep Learning applied to NLP

This is a survey paper by Marc Moreno Lopez and Jugal Kalita. From the abstract:

Convolutional Neural Network (CNNs) are typically associated with Computer Vision. CNNs are responsible for major breakthroughs in Image Classification and are the core of most Computer Vision systems today. More recently CNNs have been applied to problems in Natural Language Processing and gotten some interesting results. In this paper, we will try to explain the basics of CNNs, its different variations and how they have been applied to NLP.

This is a more concise survey than the paper below, and does a good job at 1/5 the length.

3. A Primer on Neural Network Models for Natural Language Processing

A thorough overview by Yoav Goldberg. From the abstract:

This tutorial surveys neural network models from the perspective of natural language processing research, in an attempt to bring natural-language researchers up to speed with the neural techniques. The tutorial covers input encoding for natural language tasks, feed-forward networks, convolutional networks, recurrent networks and recursive networks, as well as the computation graph abstraction for automatic gradient computation.

As a bonus, you may want to read this short set of recent blog posts by Yoav as well, which discuss this same topic, albeit from a different angle:

- An Adversarial Review of “Adversarial Generation of Natural Language”

- Clarifications re “Adversarial Review of Adversarial Learning of Nat Lang” post

- A Response to Yann LeCun’s Response

Regardless of your position in the above (should you even have a "position"), this quote is absolute gold:

What I am against is a tendency of the “deep-learning community” to enter into fields (NLP included) in which they have only a very superficial understanding, and make broad and unsubstantiated claims without taking the time to learn a bit about the problem domain.

4. Natural Language Processing with Deep Learning (Stanford)

From Stanford's CS224n: Natural Language Processing with Deep Learning.

One of the most popular and well-respected NLP courses available online, taught by Chris Manning & Richard Socher. From the course website:

The course provides a thorough introduction to cutting-edge research in deep learning applied to NLP. On the model side we will cover word vector representations, window-based neural networks, recurrent neural networks, long-short-term-memory models, recursive neural networks, convolutional neural networks as well as some recent models involving a memory component.

If you are interested in skipping directly to the lecture videos, here you go.

5. Deep Learning for Natural Language Processing (Oxford)

Another great courseware resource from another top notch university, this one taught by Phil Blunsom at Oxford. From the course website:

This is an advanced course on natural language processing. Automatically processing natural language inputs and producing language outputs is a key component of Artificial General Intelligence. The ambiguities and noise inherent in human communication render traditional symbolic AI techniques ineffective for representing and analysing language data. Recently statistical techniques based on neural networks have achieved a number of remarkable successes in natural language processing leading to a great deal of commercial and academic interest in the field.

You can follow this link directly to the course material Github repository.

Related:

5 Free Resources for Getting Started with Deep Learning for Natural Language Processing

5 Free Resources for Getting Started with Deep Learning for Natural Language Processing