7 Steps to Mastering Natural Language Processing

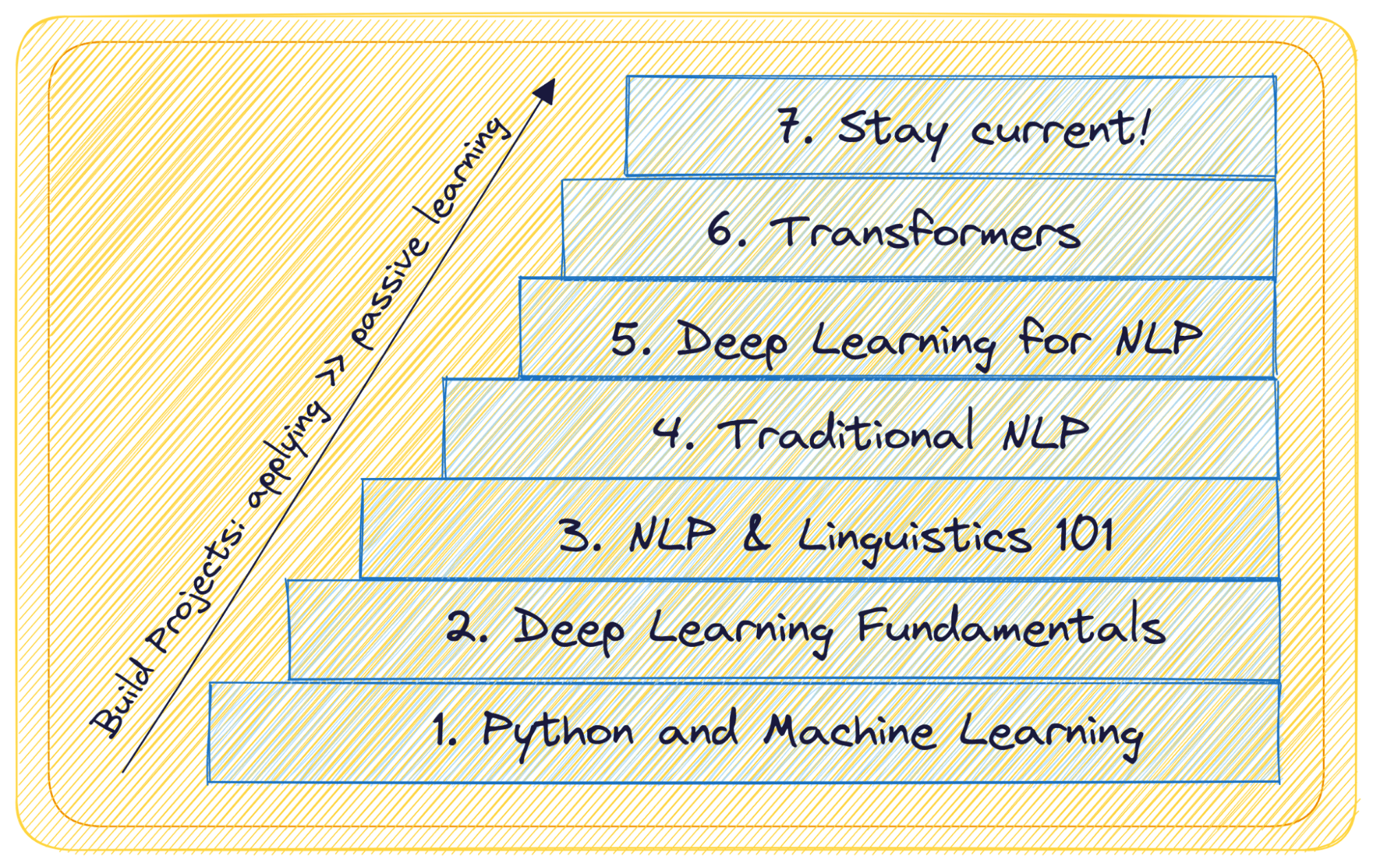

Want to learn all about Natural Language Processing (NLP)? Here is a 7 step guide to help you go from the fundamentals of machine learning and Python to Transformers, recent advances in NLP, and beyond.

Image by Author

There has never been a more exciting time to get into natural language processing (NLP). Do you have some experience building machine learning models and are interested in exploring natural language processing? Perhaps you’ve used LLM-powered applications like ChaGPT—and realize their usefulness—and want to delve deep into natural language processing?

Well, you may have other reasons, too. But now that you’re here, here’s a 7-step guide to learning all about NLP. At each step, we provide:

- An overview of the concepts you should learn and understand

- Some learning resources

- Projects you can build

Let’s get started.

Step 1: Python and Machine Learning

As a first step, you should build a strong foundation in Python programming. Additionally, proficiency in libraries like NumPy and Pandas for data manipulation is also essential. Before you dive into NLP, grasp the basics of machine learning models, including commonly used supervised and unsupervised learning algorithms.

Become familiar with libraries like scikit-learn, which make it easier to implement machine learning algorithms.

In summary, here’s what you should know:

- Python programming

- Proficiency with libraries like NumPy and Pandas

- Machine Learning basics (from data preprocessing and exploration to evaluation and selection)

- Familiarity with both supervised and unsupervised learning paradigms

- Libraries like Scikit-Learn for ML in Python

Check out this Scikit-Learn crash course by freeCodeCamp.

Here are some projects you can work on:

- House price prediction

- Loan default prediction

- Clustering for customer segmentation

Step 2: Deep Learning Fundamentals

After you’ve gained proficiency in machine learning and are comfortable with model building and evaluation, you can proceed to deep learning.

Start by understanding neural networks, their structure, and how they process data. Learn about activation functions, loss functions, and optimizers that are essential for training neural networks.

Understand the concept of backpropagation, which facilitates learning in neural networks, and the gradient descent as an optimization technique. Familiarize yourself with deep learning frameworks like TensorFlow and PyTorch for practical implementation.

In summary, here’s what you should know:

- Neural networks and their architecture

- Activation functions, loss functions, and optimizers

- Backpropagation and gradient descent

- Frameworks like TensorFlow and PyTorch

The following resources will be helpful in picking up the basics of PyTorch and TensorFlow:

You can apply what you’ve learned by working on the following projects:

- Handwritten digit recognition

- Image classification on CIFAR-10 or a similar dataset

Step 3: NLP 101 and Essential Linguistics Concepts

Begin by understanding what NLP is and its wide-ranging applications, from sentiment analysis to machine translation, question answering, and beyond.

Understand linguistic concepts like tokenization, which involves breaking text into smaller units (tokens). Learn about stemming and lemmatization, techniques that reduce words to their root forms.

Also explore tasks like part-of-speech tagging and named entity recognition.

To sum up, you should understand:

- Introduction to NLP and its applications

- Tokenization, stemming, and lemmatization

- Part-of-speech tagging and named entity recognition

- Basic linguistics concepts like syntax, semantics, and dependency parsing

The lectures on dependency parsing from CS 224n provide a good overview of the linguistics concepts you’d need. The free book Natural language Processing with Python (NLTK) is also a good reference resource.

Try building a Named Entity Recognition (NER) app for a use case of your choice (parsing resume and other documents).

Step 4: Traditional Natural Language Processing Techniques

Before deep learning revolutionized NLP, traditional techniques laid the groundwork. You should understand the Bag of Words (BoW) and TF-IDF representations, which convert text data into numerical form for machine learning models.

Learn about N-grams, which capture the context of words, and their applications in text classification. Then explore sentiment analysis and text summarization techniques. Additionally, understand Hidden Markov Models (HMMs) for tasks like part-of-speech tagging, matrix factorization and other algorithms like Latent Dirichlet Allocation (LDA) for topic modeling.

So you should familiarize yourself with:

- Bag of Words (BoW) and TF-IDF representation

- N-grams and text classification

- Sentiment analysis, topic modeling, and text summarization

- Hidden Markov Models (HMMs) for POS tagging

Here’s a learning resource: Complete Natural Language Processing Tutorial with Python.

And a couple of project ideas:

- Spam classifier

- Topic modeling on a news feed or similar dataset

Step 5: Deep Learning for Natural Language Processing

At this point, you’re familiar with the basics of NLP and deep learning. Now, apply your deep learning knowledge to NLP tasks. Start with word embeddings, such as Word2Vec and GloVe, which represent words as dense vectors and capture semantic relationships.

Then delve into sequence models such as Recurrent Neural Networks (RNNs) for handling sequential data. Understand Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU), known for their ability to capture long-term dependencies in text data. Explore sequence-to-sequence models for tasks such as machine translation.

Summing up:

- Word embeddings (Word2Vec, GloVe)

- RNNs

- LSTM and GRUs

- Sequence-to-sequence models

CS 224n: Natural Language Processing with Deep Learning is an excellent resource.

A couple of project ideas:

- Language translation app

- Question answering on custom corpus

Step 6: Natural Language Processing with Transformers

The advent of Transformers has revolutionized NLP. Understand the attention mechanism, a key component of Transformers that enables models to focus on relevant parts of the input. Learn about the Transformer architecture and the various applications.

You should understand:

- Attention mechanism and its significance

- Introduction to Transformer architecture

- Applications of Transformers

- Leveraging pre-trained language models; fine-tuning pre-trained models for specific NLP tasks

The most comprehensive resource to learn NLP with Transformers is the Transformers course by HuggingFace team.

Interesting projects you can build include:

- Customer chatbot/virtual assistant

- Emotion detection in text

Step 7: Build Projects, Keep Learning, and Stay Current

In a rapidly advancing field like natural language processing (or any field in general), you can only keep learning and hack your way through more challenging projects.

It's essential to work on projects, as they provide practical experience and reinforce your understanding of the concepts. Additionally, staying engaged with the NLP research community through blogs, research papers, and online communities will help you keep up with the advances in NLP.

ChatGPT from OpenAI hit the market in late 2022 and GPT-4 released in early 2023. At the same time (we’ve seen and still are seeing) there are releases of scores of open-source large language models, LLM-powered coding assistants, novel and resource-efficient fine-tuning techniques, and much more.

If you’re looking to up your LLM game, here’s a two-part compilation two part compilation of helpful resources:

You can also explore frameworks like Langchain and LlamaIndex to build useful and interesting LLM-powered applications.

Wrapping Up

I hope you found this guide to mastering NLP helpful. Here’s a review of the 7 steps:

- Step 1: Python and ML fundamentals

- Step 2: Deep learning fundamentals

- Step 3: NLP 101 and essential linguistics concepts

- Step 4: Traditional NLP techniques

- Step 5: Deep learning for NLP

- Step 6: NLP with transformers

- Step 7: Build projects, keep learning, and stay current!

If you’re looking for tutorials, project walkthroughs, and more, check out the collection of NLP resources on KDnuggets.

Bala Priya C is a developer and technical writer from India. She likes working at the intersection of math, programming, data science, and content creation. Her areas of interest and expertise include DevOps, data science, and natural language processing. She enjoys reading, writing, coding, and coffee! Currently, she's working on learning and sharing her knowledge with the developer community by authoring tutorials, how-to guides, opinion pieces, and more.