LangChain 101: Build Your Own GPT-Powered Applications

LangChain is a Python library that helps you build GPT-powered applications in minutes. Get started with LangChain by building a simple question-answering app.

Image by Author

The success of ChatGPT and GPT-4 have shown how large language models trained with reinforcement can result in scalable and powerful NLP applications.

However, the usefulness of the response depends on the prompt, which led to users exploring the prompt engineering space. In addition, most real-world NLP use cases need more sophistication than a single ChatGPT session. And here’s where a library like LangChain can help!

LangChain is a Python library that helps you leverage large language models to build custom NLP applications.

In this guide, we’ll explore what LangChain is and what you can build with it. We’ll also get our feet wet by building a simple question-answering app with LangChain.

Let's get started!

What is LangChain?

LangChain, created by Harrison Chase, is a Python library that provides out-of-the-box support to build NLP applications using LLMs. You can connect to various data and computation sources, and build applications that perform NLP tasks on domain-specific data sources, private repositories, and much more.

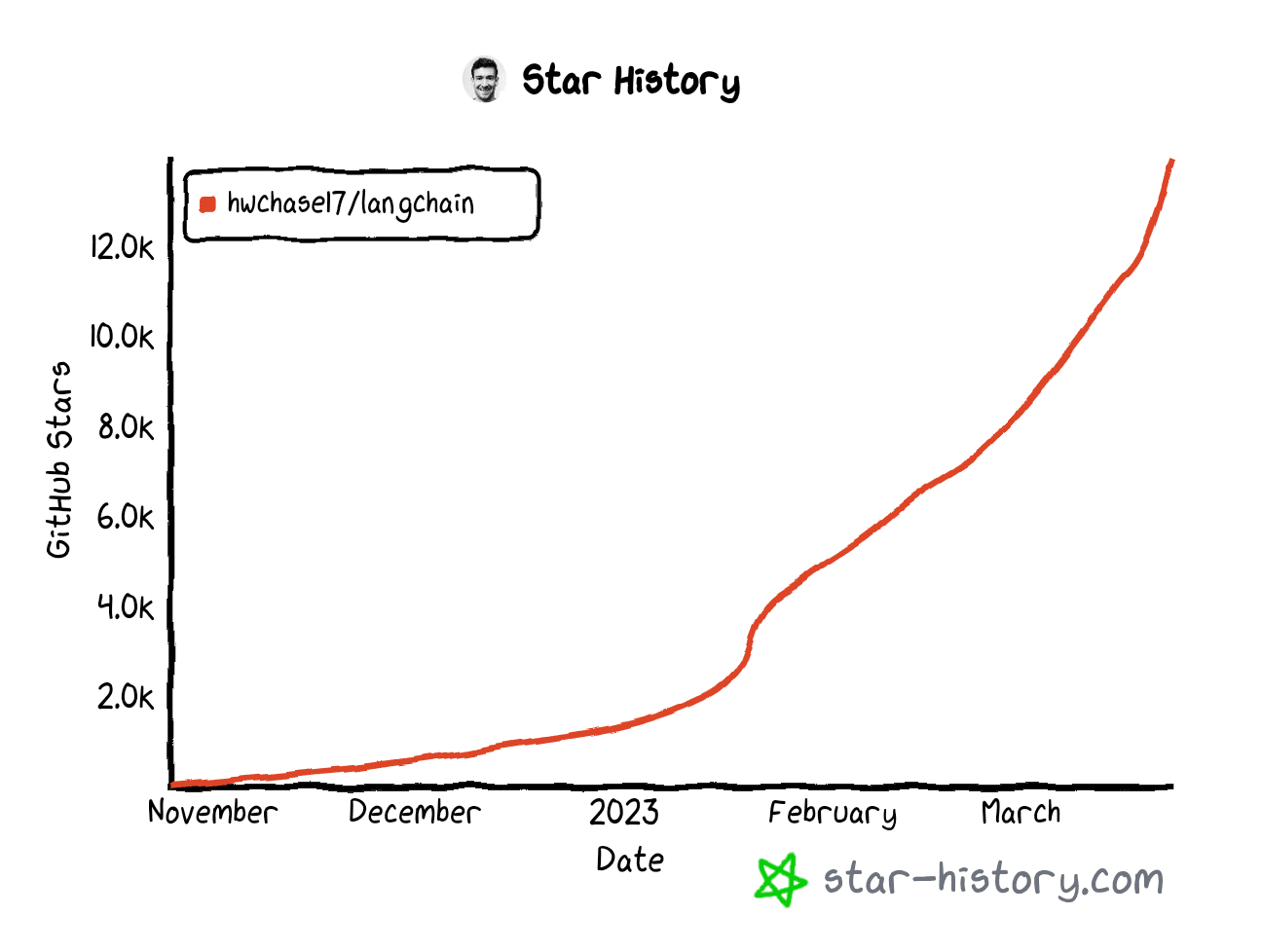

As of writing this article (in March 2023), the LangChain GitHub repository has over 14,000 stars with more than 270 contributors from across the world.

LangChain Github Star History | Generated on star-history.com

Interesting applications you can build using LangChain include (but are not limited to):

- Chatbots

- Summarization and Question answering over specific domains

- Apps that query databases to fetch info and then process them

- Agents that solve specific like math and reasoning puzzles

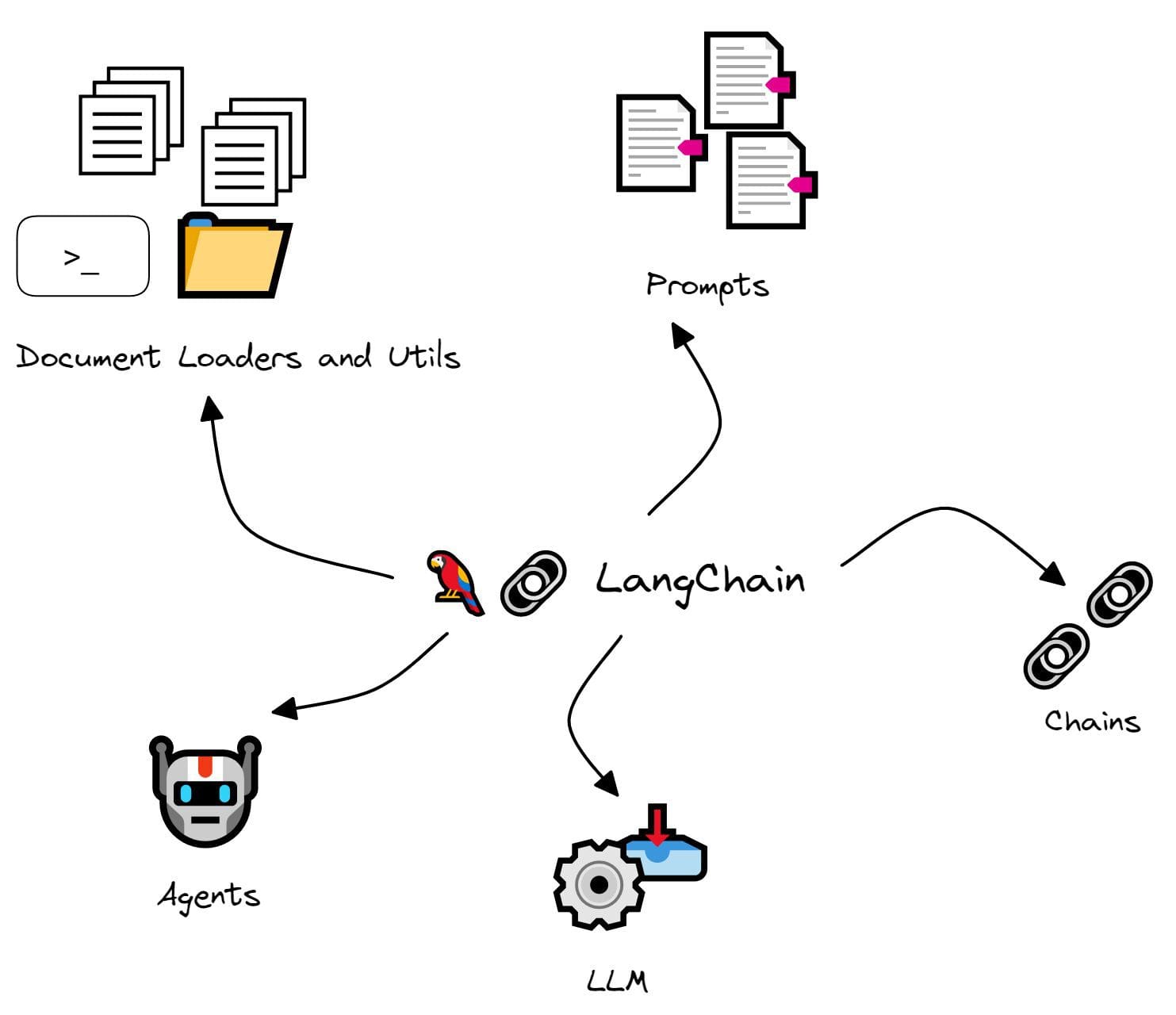

An Overview of LangChain Modules

Next let's take a look at some of the modules in LangChain:

Image by Author

LLM

LLM is the fundamental component of LangChain. It is essentially a wrapper around a large language model that helps use the functionality and capability of a specific large language model.

Chains

As mentioned, LLM is the fundamental unit in LangChain. However, as the name LangChain suggests, you can chain together LLM calls depending on specific tasks.

For example, you may need to get data from a specific URL, summarize the returned text, and answer questions using the generated summary.

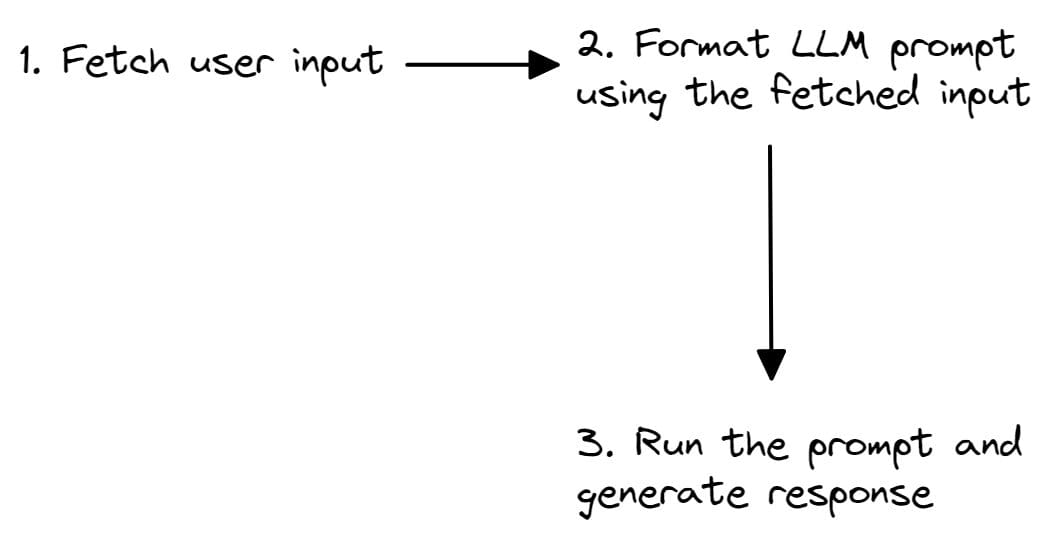

The chain can also be simple. You may need to read in user input which is then used to construct the prompt. Which can then be used to generate a response.

Image by Author

Prompts

Prompts are at the core of any NLP application. Even in a ChatGPT session, the answer is only as helpful as the prompt. To that end, LangChain provides prompt templates that you can use to format inputs and a lot of other utilities.

Document Loaders and Utils

LangChain’s Document Loaders and Utils modules facilitate connecting to sources of data and computation, respectively.

Suppose you have a large corpus of text on economics that you'd like to build an NLP app over. Your corpus may be a mix of text files, PDF documents, HTML web pages, images, and more. Currently, document loaders leverage the Python library Unstructured to convert these raw data sources into text that can be processed.

The utils module provides Bash and Python interpreter sessions amongst others. These are suitable for applications where it’ll help to interact directly with the underlying system. Or when we need code snippets to compute a specific mathematical quantity or solve a problem instead of computing answers once.

Agents

We mentioned that “chains” can help chain together a sequence of LLM calls. In some tasks, however, the sequence of calls is often not deterministic. And the next step will likely be dependent on the user input and the response in the previous steps.

For such applications, the LangChain library provides “Agents” that can take actions based on inputs along the way instead of a hardcoded deterministic sequence.

In addition to the above, LangChain also offers integration with vector databases and has memory capabilities for maintaining state between LLM calls, and much more.

Building a Question-Answering App with LangChain

Now that we’ve gained an understanding of LangChain, let’s build a question-answering app using LangChain in five easy steps:

Step 1 – Setting Up the Development Environment

Before we get coding, let’s set up the development environment. I assume you already have Python installed in your working environment.

You can now install the LangChain library using pip:

pip install langchain

As we’ll be using OpenAI’s language models, we need to install the OpenAI SDK as well:

pip install openai

Step 2 – Setting the OPENAI_API_KEY as an Environment Variable

Next, sign into your OpenAI account. Navigate to account settings > View API Keys. Generate a secret key and copy it.

In your Python script, use the os module and tap into the dictionary of environment variables, os.environ. Set the "OPENAI_API_KEY" to your to the secret API key that you just copied:

import os

os.environ["OPENAI_API_KEY"] = "your-api-key-here"

Step 3 – Simple LLM Call Using LangChain

Now that we’ve installed the required libraries, let's see how to make a simple LLM call using LangChain.

To do so, let’s import the OpenAI wrapper. In this example, we’ll use the text-davinci-003 model:

from langchain.llms import OpenAI

llm = OpenAI(model_name="text-davinci-003")

“text-davinci-003: Can do any language task with better quality, longer output, and consistent instruction-following than the curie, babbage, or ada models. Also supports inserting completions within text.” – OpenAI Docs

Let's define a question string and generate a response:

question = "Which is the best programming language to learn in 2023?"

print(llm(question))

Output >>

It is difficult to predict which programming language will be the most popular in 2023. However, the most popular programming languages today are JavaScript, Python, Java, C++, and C#, so these are likely to remain popular for the foreseeable future. Additionally, newer languages such as Rust, Go, and TypeScript are gaining traction and could become popular choices in the future.

Step 4 – Creating a Prompt Template

Let's ask another question on the top resources to learn a new programming language, say, Golang:

question = "What are the top 4 resources to learn Golang in 2023?"

print(llm(question))

Output >>

1. The Go Programming Language by Alan A. A. Donovan and Brian W. Kernighan

2. Go in Action by William Kennedy, Brian Ketelsen and Erik St. Martin

3. Learn Go Programming by John Hoover

4. Introducing Go: Build Reliable, Scalable Programs by Caleb Doxsey

While this works fine for starters, it quickly becomes repetitive when we’re trying to curate a list of resources to learn a list of programming languages and tech stacks.

Here’s where prompt templates come in handy. You can create a template that can be formatted using one or more input variables.

We can create a simple template to get the top k resources to learn any tech stack. Here, we use the k and this as input_variables:

from langchain import PromptTemplate

template = "What are the top {k} resources to learn {this} in 2023?"

prompt = PromptTemplate(template=template,input_variables=['k','this'])

Step 5 – Running Our First LLM Chain

We now have an LLM and a prompt template that we can reuse across multiple LLM calls.

llm = OpenAI(model_name="text-davinci-003")

prompt = PromptTemplate(template=template,input_variables=['k','this'])

Let’s go ahead and create an LLMChain:

from langchain import LLMChain

chain = LLMChain(llm=llm,prompt=prompt)

You can now pass in the inputs as a dictionary and run the LLM chain as shown:

input = {'k':3,'this':'Rust'}

print(chain.run(input))

Output >>

1. Rust By Example - Rust By Example is a great resource for learning Rust as it provides a series of interactive exercises that teach you how to use the language and its features.

2. Rust Book - The official Rust Book is a comprehensive guide to the language, from the basics to the more advanced topics.

3. Rustlings - Rustlings is a great way to learn Rust quickly, as it provides a series of small exercises that help you learn the language step-by-step.

Summing Up

And that’s a wrap! You know how to use LangChain to build a simple Q&A app. I hope you’ve gained a cursory understanding of LangChain’s capabilities. As a next step, try exploring LangChain to build more interesting applications. Happy coding!

References and Further Learning

- LangChain Documentation

- LangChain Quickstart Guide

- LangChain Demo + Q&A with Harrison Chase

- Chase, H. (2022). LangChain [Computer software]. https://github.com/hwchase17/langchain

Bala Priya C is a technical writer who enjoys creating long-form content. Her areas of interest include math, programming, and data science. She shares her learning with the developer community by authoring tutorials, how-to guides, and more.