5 Open Source Image Editing AI Models

From real-time edits to reasoning-driven image transformations, this guide breaks down five open source AI models that are quietly reshaping how images are created and edited.

Image by Author

# Introduction

AI image editing has advanced quickly. Tools like ChatGPT and Gemini have shown how powerful AI can be for creative work, leading many people to wonder how this will change the future of graphic design. At the same time, open source image editing models are rapidly improving and closing the quality gap.

These models allow you to edit images using simple text prompts. You can remove backgrounds, replace objects, enhance photos, and add artistic effects with minimal effort. What once required advanced design skills can now be done in a few steps.

In this blog, we review five open source AI models that stand out for image editing. You can run them locally, use them through an API, or access them directly in the browser, depending on your workflow and needs.

# 1. FLUX.2 [klein] 9B

FLUX.2 [klein] is a high-performance open source image generation and editing model designed for speed, quality, and flexibility. Developed by Black Forest Labs, it combines image generation and image editing into a single compact architecture, enabling end-to-end inference in under a second on consumer hardware.

The FLUX.2 [klein] 9B Base model is an undistilled, full-capacity foundation model that supports text-to-image generation and multi-reference image editing, making it well suited for researchers, developers, and creatives who want fine control over outputs rather than relying on heavily distilled pipelines.

Key Features:

- Unified generation and editing: Handles text-to-image and image editing tasks within a single model architecture.

- Undistilled foundation model: Preserves the full training signal, offering greater flexibility, control, and output diversity.

- Multi-reference editing support: Allows image edits guided by multiple reference images for more precise results.

- Optimized for real-time use: Delivers state-of-the-art quality with very low latency, even on consumer GPUs.

- Open weights and fine-tuning ready: Designed for LoRA training, research, and custom pipelines, with compatibility across tools like Diffusers and ComfyUI.

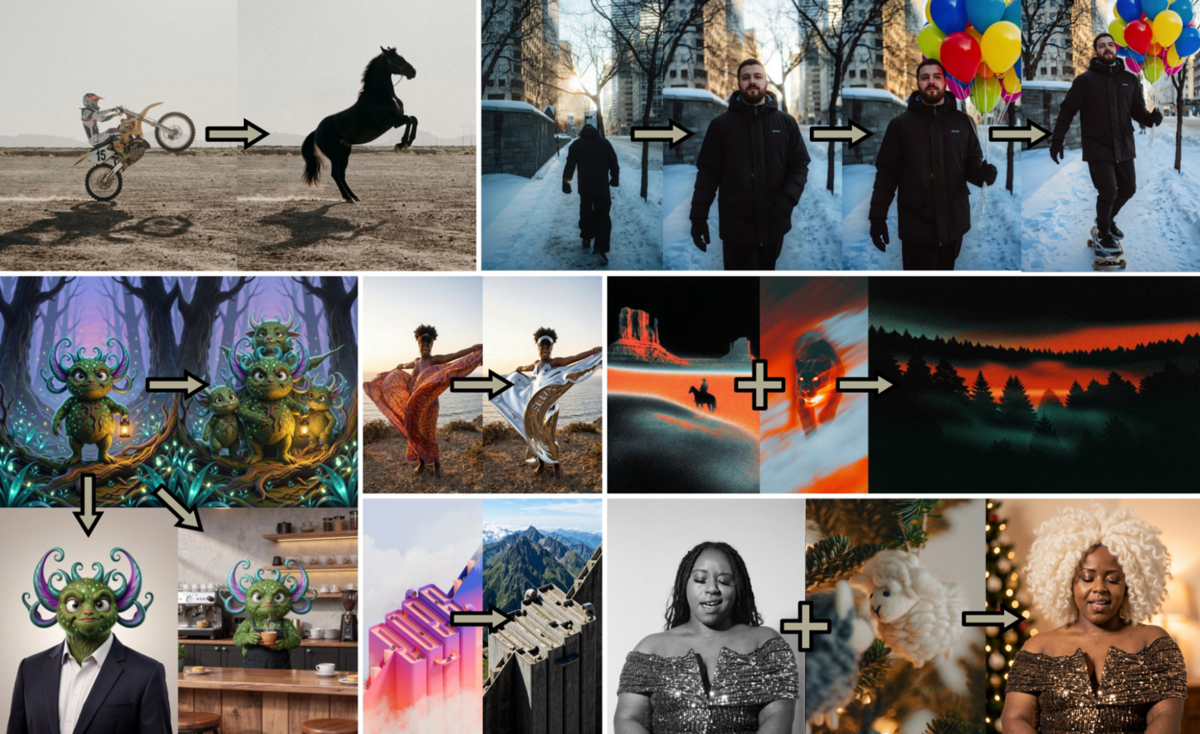

# 2. Qwen-Image-Edit-2511

Qwen-Image-Edit-2511 is an advanced open source image editing model focused on high consistency and precision. Developed by Alibaba Cloud as part of the Qwen model family, it builds on Qwen-Image-Edit-2509 with major improvements in image stability, character consistency, and structural accuracy.

The model is designed for complex image editing tasks such as multi-person edits, industrial design workflows, and geometry-aware transformations, while remaining easy to integrate through Diffusers and browser-based tools like Qwen Chat.

Key Features:

- Improved image and character consistency: Reduces image drift and preserves identity across single-person and multi-person edits.

- Multi-image and multi-person editing: Enables high-quality fusion of multiple reference images into a coherent final result.

- Built-in LoRA integration: Includes community-created LoRAs directly in the base model, unlocking advanced effects without extra setup.

- Industrial design and engineering support: Optimized for product design tasks such as material replacement, batch design, and structural edits.

- Enhanced geometric reasoning: Supports geometry-aware edits, including construction lines and design annotations for technical use cases.

# 3. FLUX.2 [dev] Turbo

FLUX.2 [dev] Turbo is a lightweight, high-speed image generation and editing adapter designed to dramatically reduce inference time without sacrificing quality.

Built as a distilled LoRA adapter for the FLUX.2 [dev] base model by Black Forest Labs, it enables high-quality outputs in as few as eight inference steps. This makes it an excellent choice for real-time applications, rapid prototyping, and interactive image workflows where speed is critical.

Key Features:

- Ultra-fast 8-step inference: Achieves up to six times faster generation compared to the standard 50-step workflow.

- Quality preserved: Matches or exceeds the visual quality of the original FLUX.2 [dev] model despite heavy distillation.

- LoRA-based adapter: Lightweight and easy to plug into existing FLUX.2 pipelines with minimal overhead.

- Text-to-image and image editing support: Works across both generation and editing tasks in a single setup.

- Broad ecosystem support: Available via hosted APIs, Diffusers, and ComfyUI for flexible deployment options.

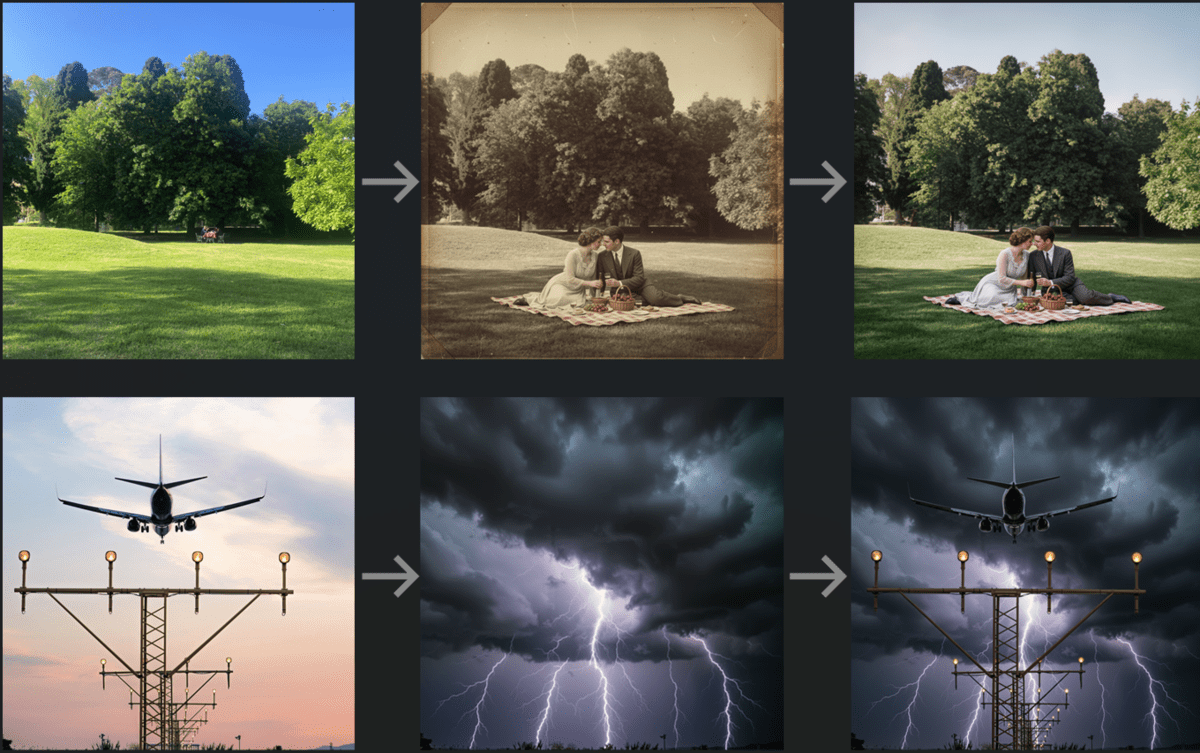

# 4. LongCat-Image-Edit

LongCat-Image-Edit is a state-of-the-art open source image editing model designed for high-precision, instruction-driven edits with strong visual consistency. Developed by Meituan as the image editing counterpart to LongCat-Image, it supports bilingual editing in both Chinese and English.

The model excels at following complex editing instructions while preserving non-edited regions, making it especially effective for multi-step and reference-guided image editing workflows.

Key Features:

- Precise instruction-based editing: Supports global edits, local edits, text modification, and reference-guided editing with strong semantic understanding.

- Strong consistency preservation: Maintains layout, texture, color tone, and subject identity in non-edited regions, even across multi-turn edits.

- Bilingual editing support: Handles both Chinese and English prompts, enabling broader accessibility and use cases.

- State-of-the-art open source performance: Delivers SOTA results among open source image editing models with improved inference efficiency.

- Text rendering optimization: Uses specialized character-level encoding for quoted text, enabling more accurate text generation within images.

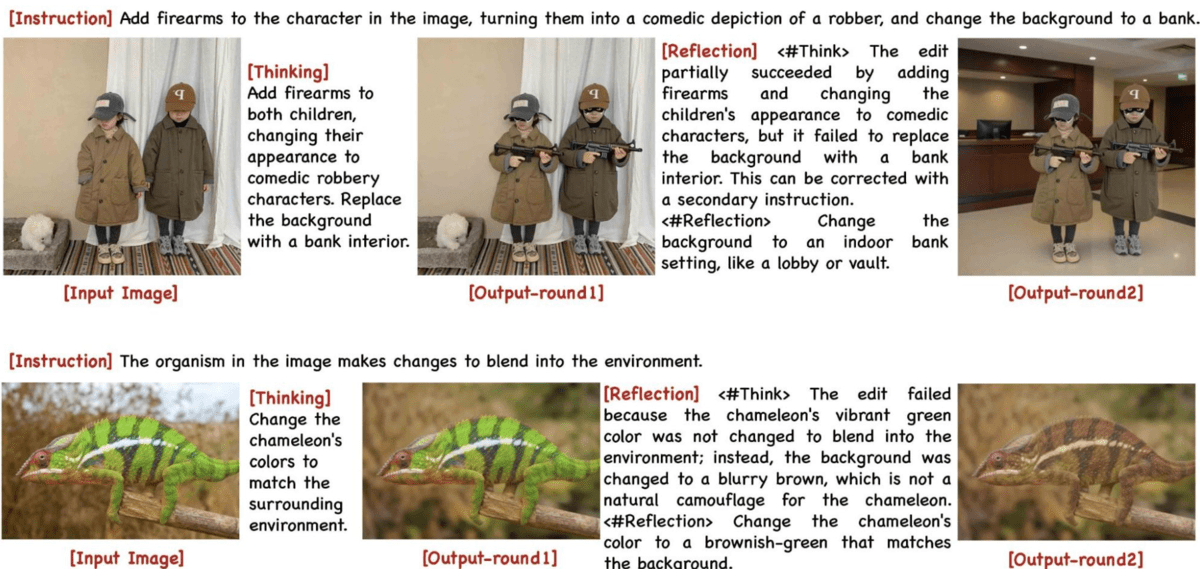

# 5. Step1X-Edit-v1p2

Step1X-Edit-v1p2 is a reasoning-enhanced open source image editing model designed to improve instruction understanding and editing accuracy. Developed by StepFun AI, it introduces native reasoning capabilities through structured thinking and reflection mechanisms. This allows the model to interpret complex or abstract edit instructions, apply changes carefully, and then review and correct the results before finalizing the output.

As a result, Step1X-Edit-v1p2 achieves strong performance on benchmarks such as KRIS-Bench and GEdit-Bench, especially in scenarios that require precise, multi-step edits.

Key Features:

- Reasoning-driven image editing: Uses explicit thinking and reflection stages to better understand instructions and reduce unintended changes.

- Strong benchmark performance: Delivers competitive results on KRIS-Bench and GEdit-Bench among open source image editing models.

- Improved instruction comprehension: Excels at handling abstract, detailed, or multi-part editing prompts.

- Reflection-based correction: Reviews edited outputs to fix errors and decide when editing is complete.

- Research-focused and extensible: Designed for experimentation, with multiple modes that trade off speed, accuracy, and reasoning depth.

# Final Thoughts

Open source image editing models are maturing fast, offering creators and developers serious alternatives to closed tools. They now combine speed, consistency, and fine-grained control, making advanced image editing easier to experiment with and deploy.

The models at a glance:

- FLUX.2 [klein] 9B focuses on high-quality generation and flexible editing in a single, undistilled foundation model.

- Qwen-Image-Edit-2511 stands out for consistent, structure-aware edits, especially in multi-person and design-heavy scenarios.

- FLUX.2 [dev] Turbo LoRA prioritizes speed, delivering strong results in real time with minimal inference steps.

- LongCat-Image-Edit excels at precise, instruction-driven edits while preserving visual consistency across multiple turns.

- Step1X-Edit-v1p2 pushes image editing further by adding reasoning, allowing the model to think through complex edits before finalizing them.

Abid Ali Awan (@1abidaliawan) is a certified data scientist professional who loves building machine learning models. Currently, he is focusing on content creation and writing technical blogs on machine learning and data science technologies. Abid holds a Master's degree in technology management and a bachelor's degree in telecommunication engineering. His vision is to build an AI product using a graph neural network for students struggling with mental illness.