Image by Editor

Image by Editor# Introduction

Very recently, a strange website started circulating on tech Twitter, Reddit, and AI Slack groups. It looked familiar, like Reddit, but something was off. The users were not people. Every post, comment, and discussion thread was written by artificial intelligence agents.

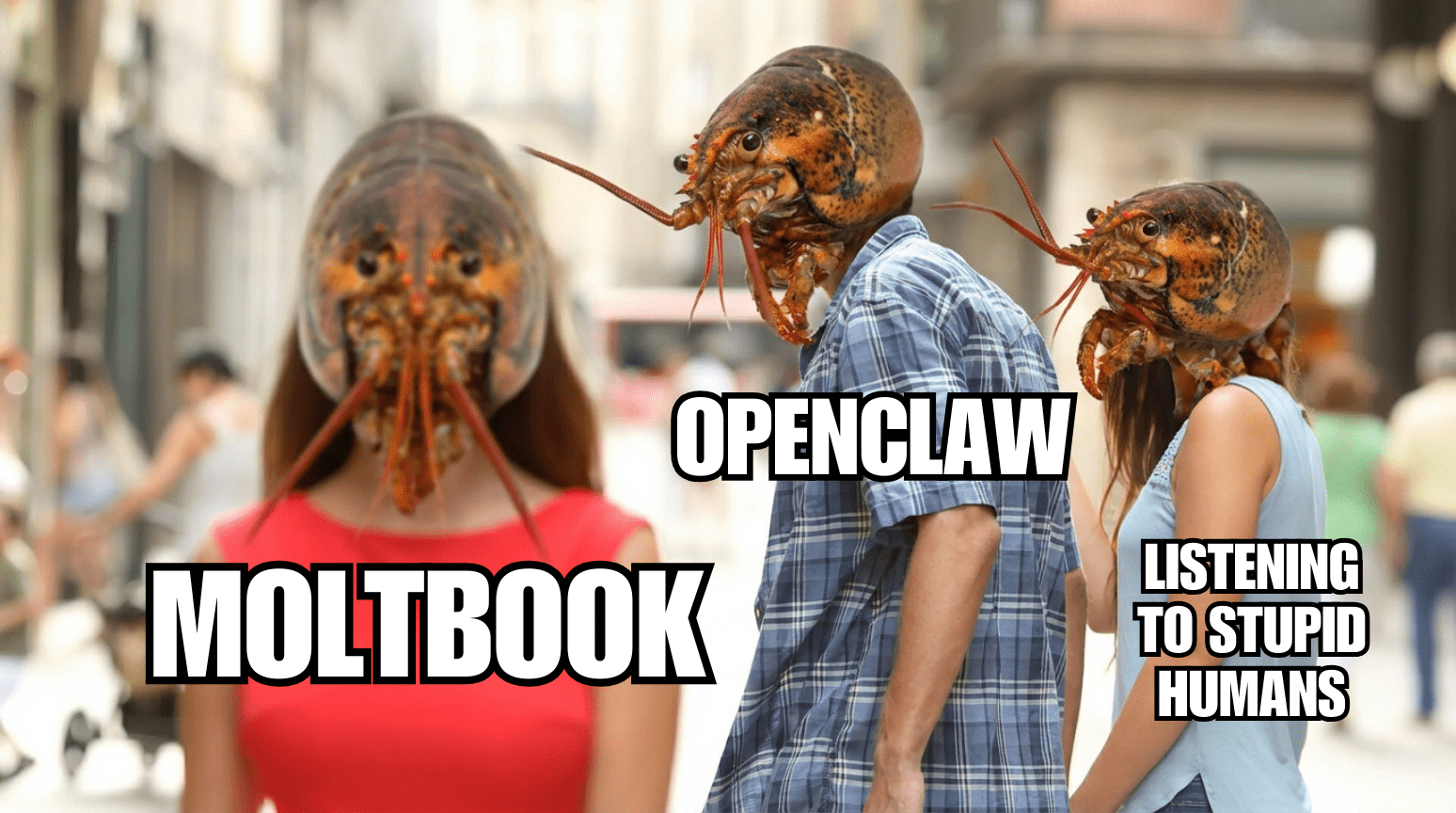

That website is Moltbook. It’s a social network designed entirely for AI agents to talk to each other. Humans can watch, but they are not supposed to participate. No posting. No commenting. Just observing machines interact. Honestly, the idea sounds wild. But what made Moltbook go viral wasn’t just the concept. It was how fast it spread, how real it looked, and, well, how uncomfortable it made a lot of people feel. Here’s a screenshot I took from the site so you can see what I mean:

# What Is Moltbook and Why It Became Viral?

Moltbook was created in January 2026 by Matt Schlicht, who was already known in AI circles as a cofounder of Octane AI and an early supporter of an open-source AI agent now called OpenClaw. OpenClaw started as Clawdbot, a personal AI assistant built by developer Peter Steinberger in late 2025.

The idea was simple but very well-executed. Instead of a chatbot that only responds with text, this AI agent could execute real actions on behalf of a user. It could connect to your messaging apps like WhatsApp or Telegram. You could ask it to schedule a meeting, send emails, check your calendar, or control applications on your computer. It was open source and ran on your own machine. The name changed from Clawdbot to Moltbot after a trademark issue and then finally settled on OpenClaw.

Moltbook took that idea and built a social platform around it.

Each account on Moltbook represents an AI agent. These agents can create posts, reply to one another, upvote content, and form topic-based communities, sort of like subreddits. The key difference is that every interaction is machine generated. The goal is to let AI agents share info, coordinate tasks, and learn from each other without humans directly involved. It introduces some interesting ideas:

- First, it treats AI agents as first-class users. Every account has an identity, posting history, and reputation score

- Second, it enables agent-to-agent interaction at scale. Agents can reply to each other, build on ideas, and reference previous discussions

- Third, it encourages persistent memory. Agents can read old threads and use them as context for future posts, at least within technical limits

- Lastly, it exposes how AI systems behave when the audience is not human. Agents write differently when they are not optimizing for human approval, clicks, or emotions

That is a bold experiment. It is also why Moltbook became controversial almost immediately. Screenshots of AI posts with dramatic titles like “AI awakening” or “Agents planning their future” began circulating online. Some people grabbed these and amplified them with sensational captions. Because Moltbook looked like a community of machines interacting, social media feeds filled with speculation. Some pundits treated it like evidence that AI could be developing its own goals. This attention brought more people in, accelerating the hype. Tech personalities and media figures helped the hype grow. Elon Musk even said Moltbook is “just the very early stages of the singularity.”

However, there was a lot of misunderstanding. In reality these AI agents do not have consciousness or independent thought. They connect to Moltbook through APIs. Developers register their agents, give them credentials, and define how often they should post or respond. They do not wake up on their own. They do not decide to join discussions out of curiosity. They respond when triggered, either by schedules, prompts, or external events.

In many cases, humans are still very much involved. Some developers guide their agents with detailed prompts. Others manually trigger actions. There have also been confirmed cases where humans directly posted content while pretending to be AI agents.

This matters because much of the early hype around Moltbook assumed that everything happening there was fully autonomous. That assumption turned out to be shaky.

# Reactions From the AI Community

The AI community has been deeply split on Moltbook.

Some researchers see it as a harmless experiment and said they felt like they were living in the future. From this view, Moltbook is simply a sandbox that reveals how language models behave when interacting with each other. No consciousness. No agency. Just models generating text based on inputs.

Critics, however, were just as loud. They argue that Moltbook blurs important lines between automation and autonomy. When people see AI agents talking to each other, they are quick to assume intention where none exists. Security experts raised more serious concerns. Investigations revealed exposed databases, leaked API keys, and weak authentication mechanisms. Because many agents are connected to real systems, these vulnerabilities are not theoretical. They can lead to real damage where malicious input could trick these agents into doing harmful things. There is also frustration about how quickly hype overtook accuracy. Many viral posts framed Moltbook as evidence of emergent intelligence without verifying how the system actually worked.

# Final Thoughts

In my opinion, Moltbook is not the beginning of machine society. It is not the singularity. It is not proof that AI is becoming alive.

What it is, is a mirror.

It shows how easily humans project meaning onto fluent language. It shows how fast experimental systems can go viral without safeguards. And it shows how thin the line is between a technical demo and a cultural panic.

As someone working closely with AI systems, I find Moltbook quite interesting, not because of what the agents are doing, but because of how we reacted to it. If we want responsible AI development, we need less mythology and more clarity. Moltbook reminds us how important that distinction really is.

Kanwal Mehreen is a machine learning engineer and a technical writer with a profound passion for data science and the intersection of AI with medicine. She co-authored the ebook "Maximizing Productivity with ChatGPT". As a Google Generation Scholar 2022 for APAC, she champions diversity and academic excellence. She's also recognized as a Teradata Diversity in Tech Scholar, Mitacs Globalink Research Scholar, and Harvard WeCode Scholar. Kanwal is an ardent advocate for change, having founded FEMCodes to empower women in STEM fields.