Text Preprocessing in Python: Steps, Tools, and Examples

We outline the basic steps of text preprocessing, which are needed for transferring text from human language to machine-readable format for further processing. We will also discuss text preprocessing tools.

By Olga Davydova, Data Monsters.

After a text is obtained, we start with text normalization. Text normalization includes:

- converting all letters to lower or upper case

- converting numbers into words or removing numbers

- removing punctuations, accent marks and other diacritics

- removing white spaces

- expanding abbreviations

- removing stop words, sparse terms, and particular words

- text canonicalization

We will describe text normalization steps in detail below.

Convert text to lowercase

Example 1. Convert text to lowercase

Python code:

input_str = ”The 5 biggest countries by population in 2017 are China, India, United States, Indonesia, and Brazil.” input_str = input_str.lower() print(input_str)

Output:

the 5 biggest countries by population in 2017 are china, india, united states, indonesia, and brazil.

Remove numbers

Remove numbers if they are not relevant to your analyses. Usually, regular expressions are used to remove numbers.

Example 2. Numbers removing

Python code:

import re input_str = ’Box A contains 3 red and 5 white balls, while Box B contains 4 red and 2 blue balls.’ result = re.sub(r’\d+’, ‘’, input_str) print(result)

Output:

Box A contains red and white balls, while Box B contains red and blue balls.

Remove punctuation

The following code removes this set of symbols [!”#$%&’()*+,-./:;<=>?@[\]^_`{|}~]:

Example 3. Punctuation removal

Python code:

import string

input_str = “This &is [an] example? {of} string. with.? punctuation!!!!” # Sample string

result = input_str.translate(string.maketrans(“”,””), string.punctuation)

print(result)

Output:

This is an example of string with punctuation

Remove whitespaces

To remove leading and ending spaces, you can use the strip() function:

Example 4. White spaces removal

Python code:

input_str = “ \t a string example\t “ input_str = input_str.strip() input_str

Output:

‘a string example’

Tokenization

Tokenization is the process of splitting the given text into smaller pieces called tokens. Words, numbers, punctuation marks, and others can be considered as tokens. In this table (“Tokenization” sheet) several tools for implementing tokenization are described.

Table 1: Tokenization tools

Remove stop words

“Stop words” are the most common words in a language like “the”, “a”, “on”, “is”, “all”. These words do not carry important meaning and are usually removed from texts. It is possible to remove stop words using Natural Language Toolkit (NLTK), a suite of libraries and programs for symbolic and statistical natural language processing.

Example 7. Stop words removal

Code:

input_str = “NLTK is a leading platform for building Python programs to work with human language data.” stop_words = set(stopwords.words(‘english’)) from nltk.tokenize import word_tokenize tokens = word_tokenize(input_str) result = [i for i in tokens if not i in stop_words] print (result)

Output:

[‘NLTK’, ‘leading’, ‘platform’, ‘building’, ‘Python’, ‘programs’, ‘work’, ‘human’, ‘language’, ‘data’, ‘.’]

A scikit-learn tool also provides a stop words list:

from sklearn.feature_extraction.stop_words import ENGLISH_STOP_WORDS

It’s also possible to use spaCy, a free open-source library:

from spacy.lang.en.stop_words import STOP_WORDS

Remove sparse terms and particular words

In some cases, it’s necessary to remove sparse terms or particular words from texts. This task can be done using stop words removal techniques considering that any group of words can be chosen as the stop words.

Stemming

Stemming is a process of reducing words to their word stem, base or root form (for example, books — book, looked — look). The main two algorithms are Porter stemming algorithm (removes common morphological and inflexional endings from words [14]) and Lancaster stemming algorithm (a more aggressive stemming algorithm). In the “Stemming” sheet of the table some stemmers are described.

|

Name, Developer, Initial release |

Features |

Programming languages |

License |

|

Natural Language Toolkit (NLTK), |

Mac/Unix/Windows support |

Python |

|

|

Contains many corpora, toy grammars, trained models, etc [1]. |

|||

|

Splitting text into words and sentences |

Python |

||

|

Runs on Unix/Linux, MacOS/OS X, and Windows. |

Python |

||

|

Neural network models |

|||

|

Can process large, web-scale corpora |

Python |

||

|

Runs on Linux, Windows and OS X |

|||

|

Contains a large number of pre-built models for a variety of languages |

Java |

||

|

Is a generic deep learning framework mainly specialized in sequence-to-sequence models |

Python |

||

|

Can be used either via command line applications, client-server, or libraries. [6] |

Lua |

||

|

Has currently 3 main implementations (OpenNMT-lua, OpenNMT-py, OpenNMT-tf) |

|

||

|

General Architecture for Text Engineering (GATE), |

Includes an information extraction system |

Java |

|

|

Multiple languages support |

|||

|

Java, C++ |

|||

|

Cross-platform |

|||

|

Memory-Based Shallow Parser (MBSP), |

Client-server architecture |

Python |

|

|

includes binaries (TiMBL, MBT and MBLEM) Precompiled for Mac OS X |

|||

|

Unified platform |

RapidMiner provides a GUI to design and execute analytical workflows |

||

|

Visual workflow design |

|||

|

Breadth of functionality |

|||

|

Includes sophisticated tools for document classification and sequence tagging |

Java |

||

|

Web mining module |

Python |

||

|

runs on Windows, Mac, & Linux |

|||

|

Stanford Tokenizer, |

Tokenizer is not distributed separately but is included in several software downloads; |

Java |

|

|

Rate of about 1,000,000 tokens per second, |

|||

|

There are a number of options that affect how tokenization is performed [13] |

|||

|

FreeLing, |

Provides language analysis functionalities |

C++ |

|

|

Supports a variety of languages |

|||

|

Provides a command-line front-end |

|||

Stemming tools

Example 8. Stemming using NLTK:

Code:

from nltk.stem import PorterStemmer

from nltk.tokenize import word_tokenize

stemmer= PorterStemmer()

input_str=”There are several types of stemming algorithms.”

input_str=word_tokenize(input_str)

for word in input_str:

print(stemmer.stem(word))

Output:

There are sever type of stem algorithm.

Lemmatization

The aim of lemmatization, like stemming, is to reduce inflectional forms to a common base form. As opposed to stemming, lemmatization does not simply chop off inflections. Instead it uses lexical knowledge bases to get the correct base forms of words.

Lemmatization tools are presented libraries described above: NLTK (WordNet Lemmatizer), spaCy, TextBlob, Pattern, gensim, Stanford CoreNLP, Memory-Based Shallow Parser (MBSP), Apache OpenNLP, Apache Lucene, General Architecture for Text Engineering (GATE), Illinois Lemmatizer, and DKPro Core.

Example 9. Lemmatization using NLTK:

Code:

from nltk.stem import WordNetLemmatizer

from nltk.tokenize import word_tokenize

lemmatizer=WordNetLemmatizer()

input_str=”been had done languages cities mice”

input_str=word_tokenize(input_str)

for word in input_str:

print(lemmatizer.lemmatize(word))

Output:

be have do language city mouse

Part of speech tagging (POS)

Part-of-speech tagging aims to assign parts of speech to each word of a given text (such as nouns, verbs, adjectives, and others) based on its definition and its context. There are many tools containing POS taggers including NLTK, spaCy, TextBlob, Pattern, Stanford CoreNLP, Memory-Based Shallow Parser (MBSP), Apache OpenNLP, Apache Lucene, General Architecture for Text Engineering (GATE), FreeLing, Illinois Part of Speech Tagger, and DKPro Core.

Example 10. Part-of-speech tagging using TextBlob:

Code:

input_str=”Parts of speech examples: an article, to write, interesting, easily, and, of” from textblob import TextBlob result = TextBlob(input_str) print(result.tags)

Output:

[(‘Parts’, u’NNS’), (‘of’, u’IN’), (‘speech’, u’NN’), (‘examples’, u’NNS’), (‘an’, u’DT’), (‘article’, u’NN’), (‘to’, u’TO’), (‘write’, u’VB’), (‘interesting’, u’VBG’), (‘easily’, u’RB’), (‘and’, u’CC’), (‘of’, u’IN’)]

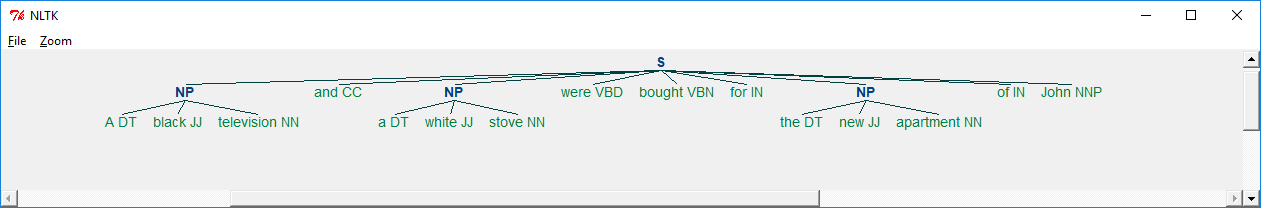

Chunking (shallow parsing)

Chunking is a natural language process that identifies constituent parts of sentences (nouns, verbs, adjectives, etc.) and links them to higher order units that have discrete grammatical meanings (noun groups or phrases, verb groups, etc.) [23]. Chunking tools: NLTK, TreeTagger chunker, Apache OpenNLP, General Architecture for Text Engineering (GATE), FreeLing.

Example 11. Chunking using NLTK:

The first step is to determine the part of speech for each word:

Code:

input_str=”A black television and a white stove were bought for the new apartment of John.” from textblob import TextBlob result = TextBlob(input_str) print(result.tags)

Output:

[(‘A’, u’DT’), (‘black’, u’JJ’), (‘television’, u’NN’), (‘and’, u’CC’), (‘a’, u’DT’), (‘white’, u’JJ’), (‘stove’, u’NN’), (‘were’, u’VBD’), (‘bought’, u’VBN’), (‘for’, u’IN’), (‘the’, u’DT’), (‘new’, u’JJ’), (‘apartment’, u’NN’), (‘of’, u’IN’), (‘John’, u’NNP’)]

The second step is chunking:

Code:

reg_exp = “NP: {?*}”

rp = nltk.RegexpParser(reg_exp)

result = rp.parse(result.tags)

print(result)

Output:

(S (NP A/DT black/JJ television/NN) and/CC (NP a/DT white/JJ stove/NN) were/VBD bought/VBN for/IN (NP the/DT new/JJ apartment/NN) of/IN John/NNP)

It’s also possible to draw the sentence tree structure using code result.draw()

Named entity recognition

Named-entity recognition (NER) aims to find named entities in text and classify them into pre-defined categories (names of persons, locations, organizations, times, etc.).

Named-entity recognition tools: NLTK, spaCy, General Architecture for Text Engineering (GATE) — ANNIE, Apache OpenNLP, Stanford CoreNLP, DKPro Core, MITIE, Watson Natural Language Understanding, TextRazor, FreeLingare described in the “NER” sheet of the table.

|

Name, Developer, Initial release |

Features |

Programming languages |

License |

|

Baleen, |

Works with unstructured and semi-structured data sources |

Java |

|

|

Includes a built-in server |

|||

|

Tags plain text with named entities |

Java |

||

|

4-label type set (people / organizations / locations / miscellaneous) |

|||

|

Returns the list of terms recognized in the text, including their exact location (annotations) |

GNU awk |

- |

|

|

Uses deep learning technology to determine representations of character groupings |

|||

|

Discovers the most relevant entities in textual content |

|||

|

Accurate, real-time, customizable |

|

||

|

|

|||

|

|

|||

|

Finds the names of people, organizations, or locations |

Java |

||

|

Source code and unit tests |

|||

|

A neural architecture |

Python |

||

|

MinorThird, |

Combines tools for annotating and visualizing text with state-of-the-art learning methods |

Java |

|

|

Supports active learning and online learning |

|||

|

A person, location, and organization annotators |

Python SDK |

||

|

English, Chinese, French, German, Japanese, Spanish languages |

Node SDK |

||

|

Swift SDK |

|||

|

|

Java SDK |

||

|

|

Unity SDK |

||

|

|

.NET Standard library |

||

|

Modular and flexible |

Data is transformed into RDF graphs and can be queried with SPARQL |

||

|

uses standards-based technologies as defined by W3C |

|||

|

Enriches information with valuable metadata |

|||

|

20 supported languages |

Bindings: cURL, Python, PHP, Java, R, Ruby, C#, Node.js |

- |

|

|

18 entity types detected |

|||

|

Filter for key entities |

|||

NER Tools