2018’s Top 7 R Packages for Data Science and AI

This is a list of the best packages that changed our lives this year, compiled from my weekly digests.

4. DataExplorer — Automate data exploration and treatment

https://github.com/boxuancui/DataExplorer

Exploratory Data Analysis (EDA) is an initial and important phase of data analysis/predictive modeling. During this process, analysts/modelers will have a first look of the data, and thus generate relevant hypotheses and decide next steps. However, the EDA process can be a hassle at times. This R package aims to automate most of data handling and visualization, so that users could focus on studying the data and extracting insights.

Installation

The package can be installed directly from CRAN.

install.packages("DataExplorer")

Usage

With the package you can create reports, plots, and tables like this:

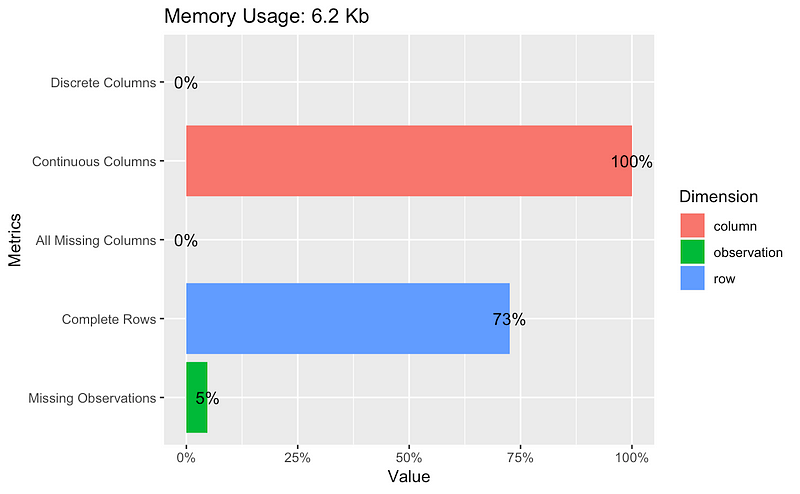

## Plot basic description for airquality data plot_intro(airquality)

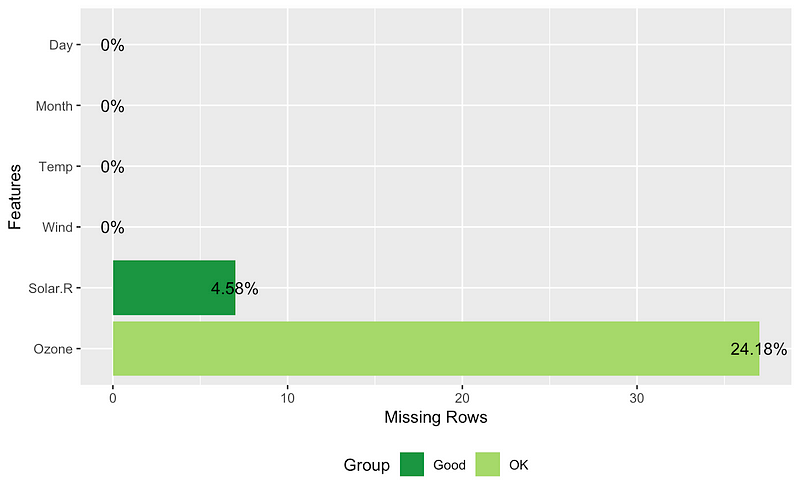

## View missing value distribution for airquality data plot_missing(airquality)

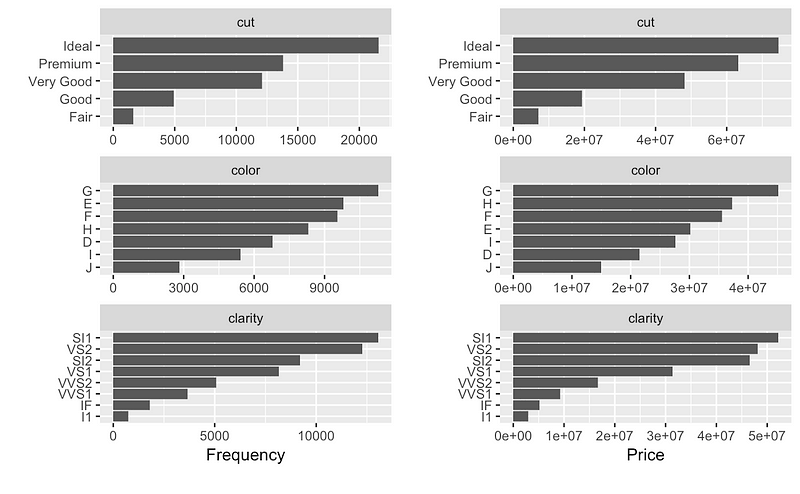

## Left: frequency distribution of all discrete variables plot_bar(diamonds) ## Right: `price` distribution of all discrete variables plot_bar(diamonds, with = "price")

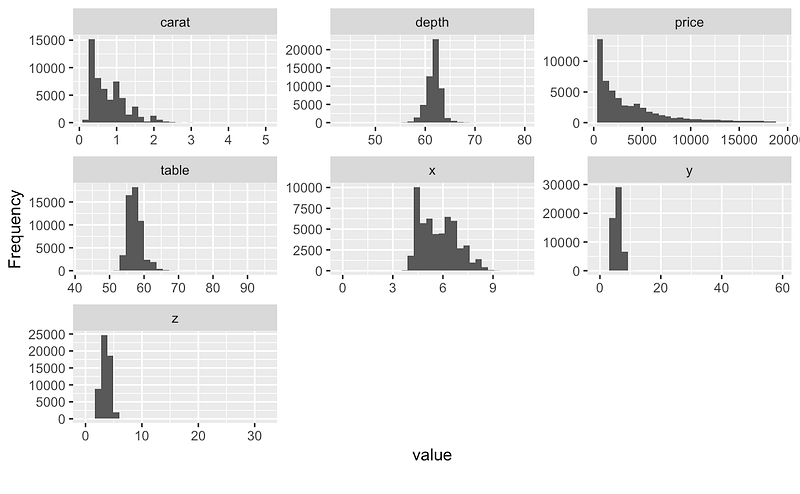

## View histogram of all continuous variables plot_histogram(diamonds)

You can find much more like this on the package’s official webpage:

And in this vignette:

3. Sparklyr — R interface for Apache Spark

https://github.com/rstudio/sparklyr

Sparklyr will allow you to:

- Connect to Spark from R. The sparklyr package provides a

complete dplyr backend. - Filter and aggregate Spark datasets, and then bring them into R for

analysis and visualization. - Use Spark’s distributed machine learning library from R.

- Create extensions that call the full Spark API and provide

interfaces to Spark packages.

Installation

You can install the Sparklyr package from CRAN as follows:

install.packages("sparklyr")

You should also install a local version of Spark for development purposes:

library(sparklyr) spark_install(version = "2.3.1")

Usage

The first part of using Spark is always creating a context and connecting to a local or remote cluster.

Here we’ll connect to a local instance of Spark via the spark_connect function:

library(sparklyr) sc <- spark_connect(master = "local")

Using sparklyr with dplyr and ggplot2

We’ll start by copying some datasets from R into the Spark cluster (note that you may need to install the nycflights13 and Lahman packages in order to execute this code):

install.packages(c("nycflights13", "Lahman"))

library(dplyr)

iris_tbl <- copy_to(sc, iris)

flights_tbl <- copy_to(sc, nycflights13::flights, "flights")

batting_tbl <- copy_to(sc, Lahman::Batting, "batting")

src_tbls(sc)

## [1] "batting" "flights" "iris"

To start with, here’s a simple filtering example:

# filter by departure delay and print the first few records flights_tbl %>% filter(dep_delay == 2) ## # Source: lazy query [?? x 19] ## # Database: spark_connection ## year month day dep_time sched_dep_time dep_delay arr_time ## <int> <int> <int> <int> <int> <dbl> <int> ## 1 2013 1 1 517 515 2 830 ## 2 2013 1 1 542 540 2 923 ## 3 2013 1 1 702 700 2 1058 ## 4 2013 1 1 715 713 2 911 ## 5 2013 1 1 752 750 2 1025 ## 6 2013 1 1 917 915 2 1206 ## 7 2013 1 1 932 930 2 1219 ## 8 2013 1 1 1028 1026 2 1350 ## 9 2013 1 1 1042 1040 2 1325 ## 10 2013 1 1 1231 1229 2 1523 ## # ... with more rows, and 12 more variables: sched_arr_time <int>, ## # arr_delay <dbl>, carrier <chr>, flight <int>, tailnum <chr>, ## # origin <chr>, dest <chr>, air_time <dbl>, distance <dbl>, hour <dbl>, ## # minute <dbl>, time_hour <dttm>

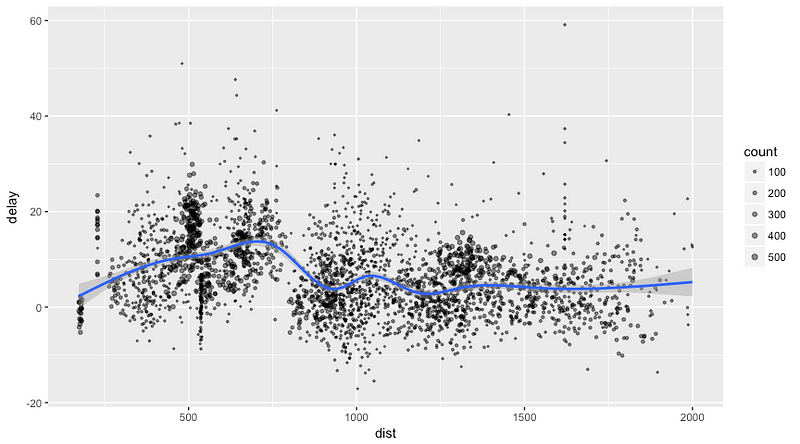

Let’s plot the data on flight delays:

delay <- flights_tbl %>% group_by(tailnum) %>% summarise(count = n(), dist = mean(distance), delay = mean(arr_delay)) %>% filter(count > 20, dist < 2000, !is.na(delay)) %>% collect # plot delays library(ggplot2) ggplot(delay, aes(dist, delay)) + geom_point(aes(size = count), alpha = 1/2) + geom_smooth() + scale_size_area(max_size = 2) ## `geom_smooth()` using method = 'gam'

Machine Learning with Sparklyr

You can orchestrate machine learning algorithms in a Spark cluster via the machine learning functions within Sparklyr. These functions connect to a set of high-level APIs built on top of DataFrames that help you create and tune machine learning workflows.

Here’s an example where we use ml_linear_regression to fit a linear regression model. We’ll use the built-in mtcars dataset to see if we can predict a car’s fuel consumption (mpg) based on its weight (wt), and the number of cylinders the engine contains (cyl). We’ll assume in each case that the relationship between mpg and each of our features is linear.

# copy mtcars into spark

mtcars_tbl <- copy_to(sc, mtcars)

# transform our data set, and then partition into 'training', 'test'

partitions <- mtcars_tbl %>%

filter(hp >= 100) %>%

mutate(cyl8 = cyl == 8) %>%

sdf_partition(training = 0.5, test = 0.5, seed = 1099)

# fit a linear model to the training dataset

fit <- partitions$training %>%

ml_linear_regression(response = "mpg", features = c("wt", "cyl"))

fit

## Call: ml_linear_regression.tbl_spark(., response = "mpg", features = c("wt", "cyl"))

##

## Formula: mpg ~ wt + cyl

##

## Coefficients:

## (Intercept) wt cyl

## 33.499452 -2.818463 -0.923187

For linear regression models produced by Spark, we can use summary() to learn a bit more about the quality of our fit and the statistical significance of each of our predictors.

summary(fit)

## Call: ml_linear_regression.tbl_spark(., response = "mpg", features = c("wt", "cyl"))

##

## Deviance Residuals:

## Min 1Q Median 3Q Max

## -1.752 -1.134 -0.499 1.296 2.282

##

## Coefficients:

## (Intercept) wt cyl

## 33.499452 -2.818463 -0.923187

##

## R-Squared: 0.8274

## Root Mean Squared Error: 1.422

Spark machine learning supports a wide array of algorithms and feature transformations, and as illustrated above, it’s easy to chain these functions together with dplyr pipelines.

Check out more about machine learning with sparklyr here:

sparklyr

An R interface to Sparkspark.rstudio.com

And more information in general about the package and examples here:

sparklyr

An R interface to Sparkspark.rstudio.com

2. Drake — An R-focused pipeline toolkit for reproducibility and high-performance computing

Drake programming

Nope, just kidding. But the name of the package is drake!

https://github.com/ropensci/drake

This is such an amazing package. I’ll create a separate post with more details about it, so wait for that!

Drake is a package created as a general-purpose workflow manager for data-driven tasks. It rebuilds intermediate data objects when their dependencies change, and it skips work when the results are already up to date.

Also, not every run-through starts from scratch, and completed workflows have tangible evidence of reproducibility.

Reproducibility, good management, and tracking experiments are all necessary for easily testing others’ work and analysis. It’s a huge deal in Data Science, and you can read more about it here:

From Zach Scott:

And in an article by me :)

With drake, you can automatically

- Launch the parts that changed since last time.

- Skip the rest.

Installation

# Install the latest stable release from CRAN.

install.packages("drake")

# Alternatively, install the development version from GitHub.

install.packages("devtools")

library(devtools)

install_github("ropensci/drake")

There are some known errors when installing from CRAN. For more on these errors, visit:

The drake R Package User Manual

I encountered a mistake, so I recommend that for now you install the package from GitHub.

Ok, so let’s reproduce a simple example with a twist:

I added a simple plot to see the linear model within drake’s main example. With this code, you’re creating a plan for executing your whole project.

First, we read the data. Then we prepare it for analysis, create a simple hist, calculate the correlation, fit the model, plot the linear model, and finally create a rmarkdown report.

The code I used for the final report is here:

If we change some of our functions or analysis, when we execute the plan, drake will know what has changed and will only run those changes. It creates a graph so you can see what’s happening:

Graph for analysis

In Rstudio, this graph is interactive, and you can save it to HTML for later analysis.

There are more awesome things that you can do with drake that I’ll show in a future post :)

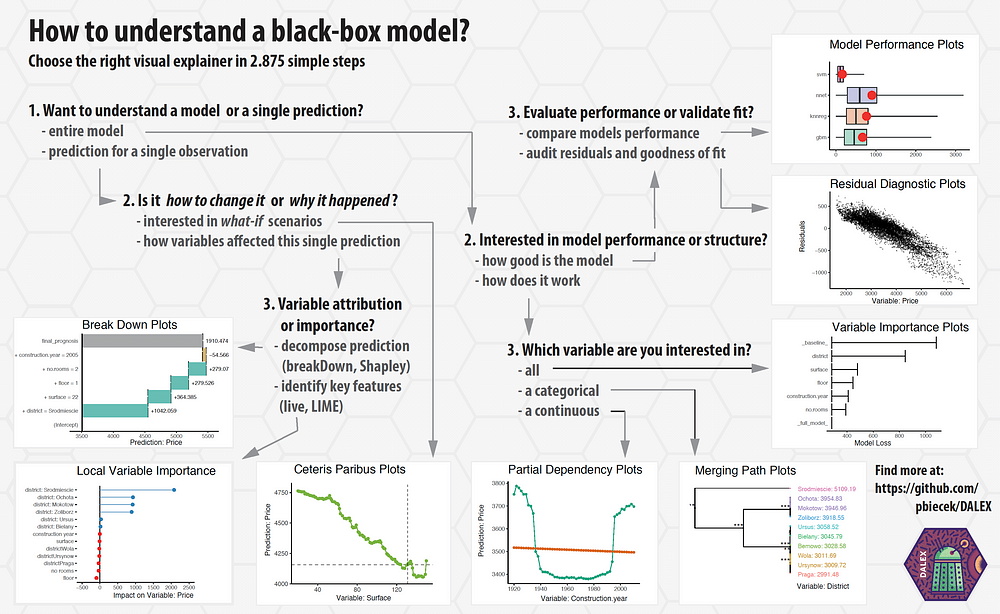

1. DALEX — Descriptive mAchine Learning EXplanations

https://github.com/pbiecek/DALEX

Explaining machine learning models isn’t always easy. Yet it’s so important for a range of business applications. Luckily, there are some great libraries that help us with this task. For example:

(By the way, sometimes a simple visualization with ggplot can help you explain a model. For more on this check the awesome article below by Matthew Mayo)

In many applications, we need to know, understand, or prove how input variables are used in the model, and how they impact final model predictions.DALEX is a set of tools that helps explain how complex models are working.

To install from CRAN, just run:

install.packages("DALEX")

They have amazing documentation on how to use DALEX with different ML packages:

- How to use DALEX with caret

- How to use DALEX with mlr

- How to use DALEX with H2O

- How to use DALEX with xgboost package

- How to use DALEX for teaching. Part 1

- How to use DALEX for teaching. Part 2

- breakDown vs lime vs shapleyR

Great cheat sheets:

https://github.com/pbiecek/DALEX

https://github.com/pbiecek/DALEX

Here’s an interactive notebook where you can learn more about the package:

Binder (beta)

Edit descriptionmybinder.org

And finally, some book-style documentation on DALEX, machine learning, and explainability:

Check it out in the original repository:

pbiecek/DALEX

DALEX — Descriptive mAchine Learning EXplanationsgithub.com

and remember to star it :)

Thanks to the amazing team at Ciencia y Datos for helping with these digests.

Thanks also for reading this. I hope you found something interesting here :). If these articles are helping you please share them with your friends!

If you have questions just follow me on Twitter:

and LinkedIn:

See you there :)

Bio: Favio Vazquez is a physicist and computer engineer working on Data Science and Computational Cosmology. He has a passion for science, philosophy, programming, and music. He is the creator of Ciencia y Datos, a Data Science publication in Spanish. He loves new challenges, working with a good team and having interesting problems to solve. He is part of Apache Spark collaboration, helping in MLlib, Core and the Documentation. He loves applying his knowledge and expertise in science, data analysis, visualization, and automatic learning to help the world become a better place.

Original. Reposted with permission.

Related:

- 2018’s Top 7 Python Libraries for Data Science and AI

- Top 20 R Libraries for Data Science in 2018

- 5 of Our Favorite Free Visualization Tools