When Too Likely Human Means Not Human: Detecting Automatically Generated Text

Passably-human automated text generation is a reality. How do we best go about detecting it? As it turns out, being too predictably human may actually be a reasonably good indicator of not being human at all.

Passably-human automated text generation is a reality. Today's advanced language models are capable of generating blocks of short text which is indistinguishable from the human-crafted sort.

That's not to say there aren't issues with such technologies. Cherry picked results can give the impression that these models fire on all cylinders and operate at 100% fool-ability. This is not the case. However, given that most concerns surrounding text generation — particularly its more nefarious potential uses — are not particularly hindered by the necessity of cherry picking, robust methods for detecting automatically generated text are undoubtedly required.

A new project called GLTR (Giant Language Model Test Room, pronounced "glitter") takes a step in this direction. A recent blog post, Catching a Unicorn with GLTR, provides an introduction and some rationale:

[W]e need to develop forensic techniques to detect automatically generated text. We make the assumption that computer generated text fools humans by sticking to the most likely words at each position, a trick that fools humans. In contrast, natural writing actually more frequently selects unpredictable words that make sense to the domain. That means that we can detect whether a text actually looks too likely to be from a human writer!

Using OpenAI's recent GPT-2 language model, GLTR feeds text input and is able to see which words GPT-2 would have predicted at every position. A comparison of this prediction can then be made with the observed word in the text at the same positions, and analysis shows how predicable observed words are. The idea is that the more uniformly predictable observed words are the less likely these words were strung together by a human, since humans tend to inject unpredictable words more frequently.

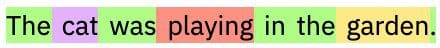

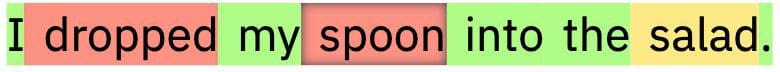

GLTR color codes word by likelihood:

A word that ranks within the most likely words is highlighted in green (top 10), yellow (top 100), red (top 1,000), and the rest of the words in purple.

Therefore, more purple words means less predictable. As in less predictably human. Which in turn means probably more human.

For example, compare

with

A cat is always unpredictable.

Aside from the color coding, GLTR also provides the top 5 word predictions and their associated probabilities, and the position of the observed word.

Add in 3 different histograms (see below) which aggregate this information over the entire text and GLTR ends up painting a picture of how predictable the given piece of text is.

The first one demonstrates how many words of each category appear in the text. The second one illustrates the ratio between the probabilities of the top predicted word and the following word. The last histogram shows the distribution over the entropies of the predictions.

GLTR offers an online demo, a description of which is below.

Each text is analyzed by how likely each word would be the predicted word given the context to the left. If the actual used word would be in the Top 10 predicted words the background is colored green, for Top 100 in yellow, Top 1000 red, otherwise violet. Try some sample texts from below and see for yourself if you can spot the difference between machine generated text and human generated text or try your own. (Tip: hover over the words for more detail)

The battle against nefarious automatically generated text is one which is just getting underway, but GLTR jump starts the conversation of how to best identify such generated text. Check out the demo and give it a try for yourself.

And remember, stay unpredictable.

Related:

- Data Representation for Natural Language Processing Tasks

- NLP Overview: Modern Deep Learning Techniques Applied to Natural Language Processing

- The Main Approaches to Natural Language Processing Tasks