I Asked ChatGPT, Claude and DeepSeek to Build Tetris

Which of these state-of-the-art models writes the best code?

Image by Author

# Introduction

It seems like almost every week, a new model claims to be state-of-the-art, beating existing AI models on all benchmarks.

I get free access to the latest AI models at my full-time job within weeks of release. I typically don’t pay much attention to the hype and just use whichever model is auto-selected by the system.

However, I know developers and friends who want to build software with AI that can be shipped to production. Since these initiatives are self-funded, their challenge lies in finding the best model to do the job. They want to balance cost with reliability.

Due to this, after the release of GPT-5.2, I decided to run a practical test to understand whether this model was worth the hype, and if it really was better than the competition.

Specifically, I chose to test flagship models from each provider: Claude Opus 4.5 (Anthropic’s most capable model), GPT-5.2 Pro (OpenAI’s latest extended reasoning model), and DeepSeek V3.2 (one of the latest open-source alternatives).

To put these models to the test, I chose to get them to build a playable Tetris game with a single prompt.

These were the metrics I used to evaluate the success of each model:

| Criteria | Description |

|---|---|

| First Attempt Success | With just one prompt, did the model deliver working code? Multiple debugging iterations leads to higher cost over time, which is why this metric was chosen. |

| Feature Completeness | Were all the features mentioned in the prompt built by the model, or was anything missed out? |

| Playability | Beyond the technical implementation, was the game actually smooth to play? Or were there issues that created friction in the user experience? |

| Cost-effectiveness | How much did it cost to get production-ready code? |

# The Prompt

Here is the prompt I entered into each AI model:

Build a fully functional Tetris game as a single HTML file that I can open directly in my browser.

Requirements:

GAME MECHANICS:

- All 7 Tetris piece types

- Smooth piece rotation with wall kick collision detection

- Pieces should fall automatically, increase the speed gradually as the user's score increases

- Line clearing with visual animation

- "Next piece" preview box

- Game over detection when pieces reach the topCONTROLS:

- Arrow keys: Left/Right to move, Down to drop faster, Up to rotate

- Touch controls for mobile: Swipe left/right to move, swipe down to drop, tap to rotate

- Spacebar to pause/unpause

- Enter key to restart after game overVISUAL DESIGN:

- Gradient colors for each piece type

- Smooth animations when pieces move and lines clear

- Clean UI with rounded corners

- Update scores in real time

- Level indicator

- Game over screen with final score and restart buttonGAMEPLAY EXPERIENCE AND POLISH:

- Smooth 60fps gameplay

- Particle effects when lines are cleared (optional but impressive)

- Increase the score based on number of lines cleared simultaneously

- Grid background

- Responsive designMake it visually polished and feel satisfying to play. The code should be clean and well-organized.

# The Results

// 1. Claude Opus 4.5

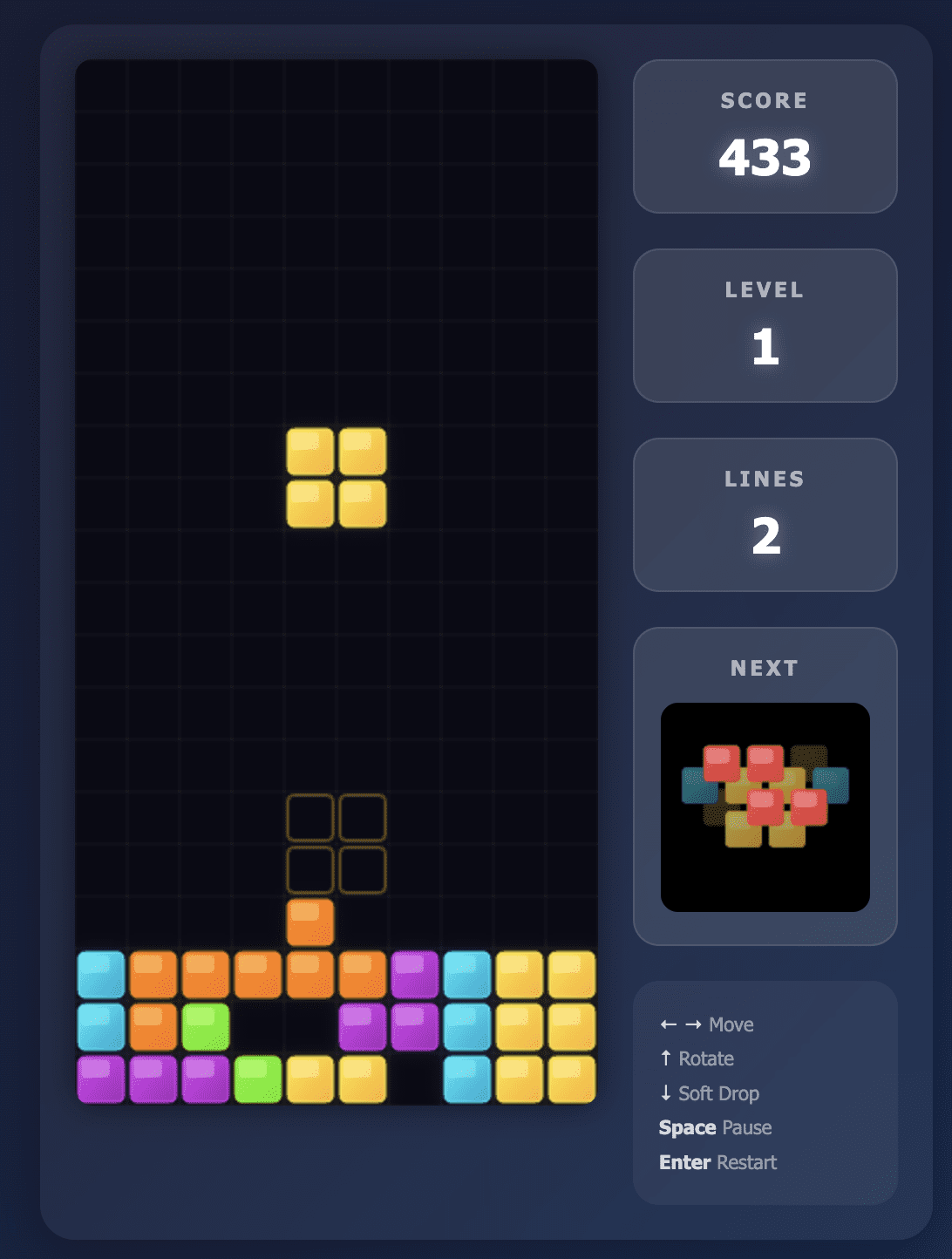

The Opus 4.5 model built exactly what I asked for.

The UI was clean and instructions were displayed clearly on the screen. All the controls were responsive and the game was fun to play.

The gameplay was so smooth that I actually ended up playing for quite some time and got sidetracked from testing the other models.

Also, Opus 4.5 took less than 2 minutes to provide me with this working game, leaving me impressed on the first try.

Tetris game built by Opus 4.5

// 2. GPT-5.2 Pro

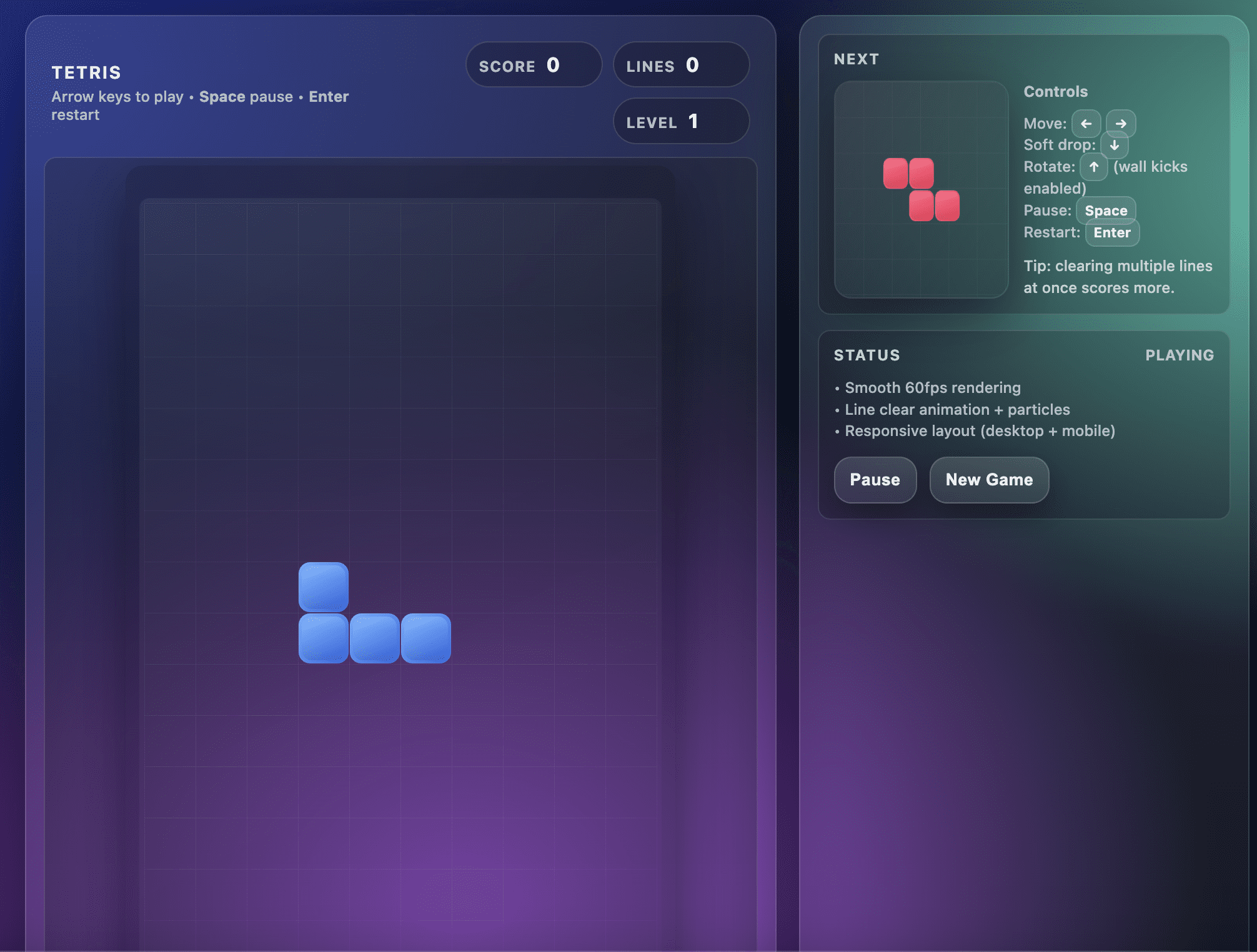

GPT-5.2 Pro is OpenAI’s latest model with extended reasoning. For context, GPT-5.2 has three tiers: Instant, Thinking, and Pro. At the point of writing this article, GPT-5.2 Pro is their most intelligent model, providing extended thinking and reasoning capabilities.

It is also 4x more expensive than Opus 4.5.

There was a lot of hype around this model, leading me to go in with high expectations.

Unfortunately, I was underwhelmed by the game this model produced.

At the first try, GPT-5.2 Pro produced a Tetris game with a layout bug. The bottom rows of the game were outside of the viewport, and I couldn’t see where the pieces were landing.

This made the game unplayable, as shown in the screenshot below:

Tetris game built by GPT-5.2

I was especially surprised by this bug since it took around 6 minutes for the model to produce this code.

I decided to try again with this follow-up prompt to fix the viewport problem:

The game works, but there's a bug. The bottom rows of the Tetris board are cut off at the bottom of the screen. I can't see the pieces when they land and the canvas extends beyond the visible viewport.

Please fix this by:

1. Making sure the entire game board fits in the viewport

2. Adding proper centering so the full board is visibleThe game should fit on the screen with all rows visible.

After the follow-up prompt, the GPT-5.2 Pro model produced a functional game, as seen in the below screenshot:

Tetris second try by GPT-5.2

However, the game play wasn’t as smooth as the one produced by the Opus 4.5 model.

When I pressed the “down” arrow for the piece to drop, the next piece would sometimes plummet instantly at a high speed, not giving me enough time to think about how to position it.

The game ended up being playable only if I let each piece fall on its own, which wasn’t the best experience.

(Note: I tried the GPT-5.2 Standard model too, which produced similar buggy code on the first try.)

// 3. DeepSeek V3.2

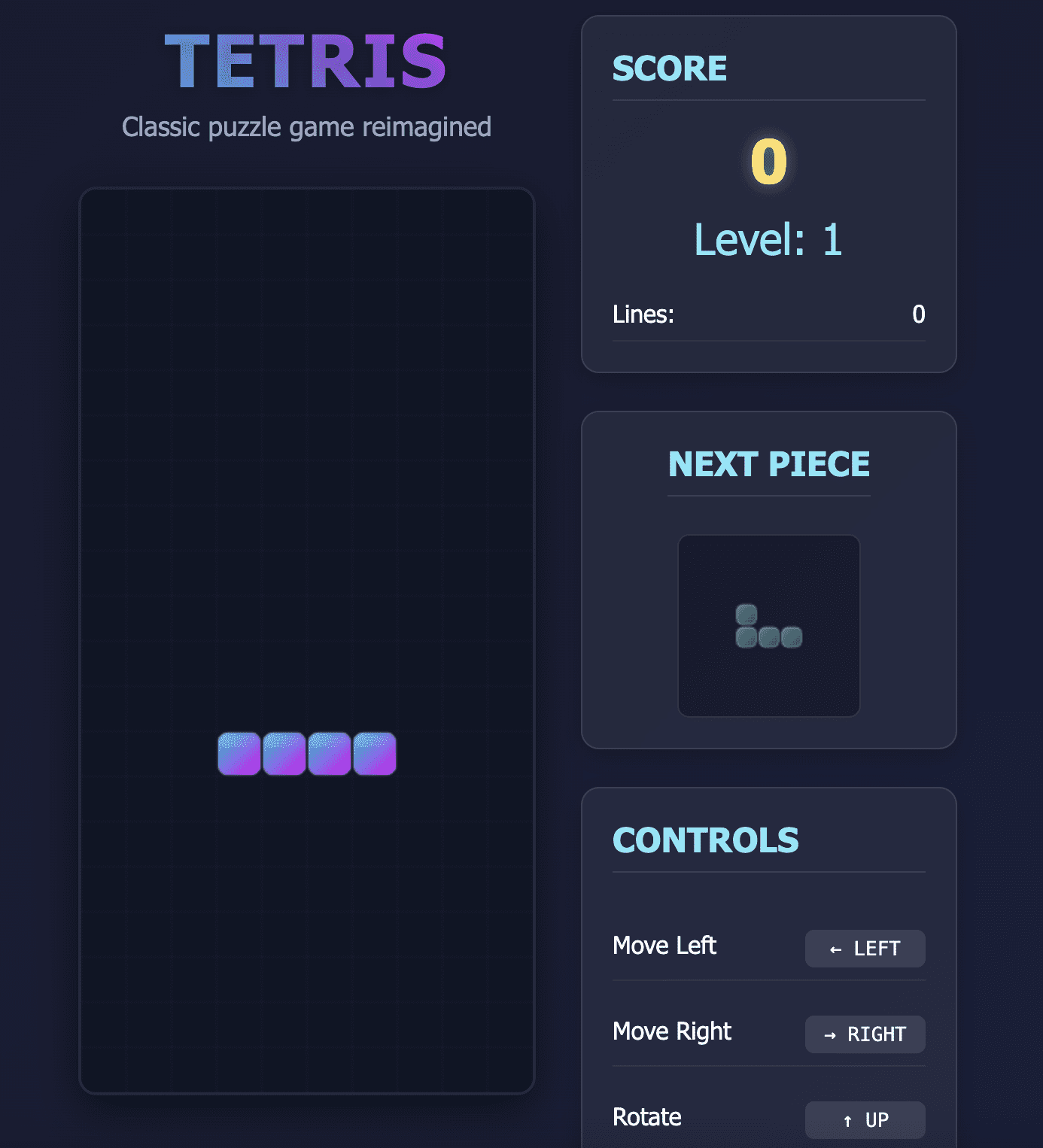

DeepSeek’s first attempt at building this game had two issues:

- Pieces started disappearing when they hit the bottom of the screen.

- The “down” arrow that’s used to drop the pieces faster ended up scrolling the entire webpage rather than just moving the game pieces.

Tetris game built by DeepSeek V3.2

I re-prompted the model to fix this issue, and the gameplay controls ended up working correctly.

However, some pieces still disappeared before they landed. This made the game completely unplayable even after the second iteration.

I’m sure that this issue can be fixed with 2–3 more prompts, and given DeepSeek’s low pricing, you could afford 10+ debugging rounds and still spend less than one successful Opus 4.5 attempt.

# Summary: GPT-5.2 vs Opus 4.5 vs DeepSeek 3.2

// Cost Breakdown

Here is a cost comparison between the three models:

| Model | Input (per 1M tokens) | Output (per 1M tokens) |

|---|---|---|

| DeepSeek V3.2 | $0.27 | $1.10 |

| GPT-5.2 | $1.75 | $14.00 |

| Claude Opus 4.5 | $5.00 | $25.00 |

| GPT-5.2 Pro | $21.00 | $84.00 |

DeepSeek V3.2 is the cheapest alternative, and you can also download the model’s weights for free and run it on your own infrastructure.

GPT-5.2 is almost 7x more expensive than DeepSeek V3.2, followed by Opus 4.5 and GPT-5.2 Pro.

For this specific task (building a Tetris game), we consumed approximately 1,000 input tokens and 3,500 output tokens.

For each additional iteration, we will estimate an extra 1,500 tokens per additional round. Here is the total cost incurred per model:

| Model | Total Cost | Result |

|---|---|---|

| DeepSeek V3.2 | ~$0.005 | Game isn't playable |

| GPT-5.2 | ~$0.07 | Playable, but poor user experience |

| Claude Opus 4.5 | ~$0.09 | Playable and good user experience |

| GPT-5.2 Pro | ~$0.41 | Playable, but poor user experience |

# Takeaways

Based on my experience building this game, I would stick to the Opus 4.5 model for day to day coding tasks.

Although GPT-5.2 is cheaper than Opus 4.5, I personally would not use it to code, since the iterations required to yield the same result would likely lead to the same amount of money spent.

DeepSeek V3.2, however, is far more affordable than the other models on this list.

If you’re a developer on a budget and have time to spare on debugging, you will still end up saving money even if it takes you over 10 tries to get working code.

I was surprised at GPT 5.2 Pro’s inability to produce a working game on the first try, as it took around 6 minutes to think before coming up with flawed code. After all, this is OpenAI’s flagship model, and Tetris should be a relatively simple task.

However, GPT-5.2 Pro’s strengths lie in math and scientific research, and it is especially designed for problems that don’t rely on pattern recognition from training data. Perhaps this model is over-engineered for simple day-to-day coding tasks, and should instead be used when building something that is complex and requires novel architecture.

The practical takeaway from this experiment:

- Opus 4.5 performs best at day-to-day coding tasks.

- DeepSeek V3.2 is a budget alternative that delivers reasonable output, although it requires some debugging effort to reach your desired outcome.

- GPT-5.2 (Standard) didn’t perform as well as Opus 4.5, whereas GPT-5.2 (Pro) is probably better suited for complex reasoning than quick coding tasks like this one.

Feel free to replicate this test with the prompt I’ve shared above, and happy coding!

Natassha Selvaraj is a self-taught data scientist with a passion for writing. Natassha writes on everything data science-related, a true master of all data topics. You can connect with her on LinkedIn or check out her YouTube channel.