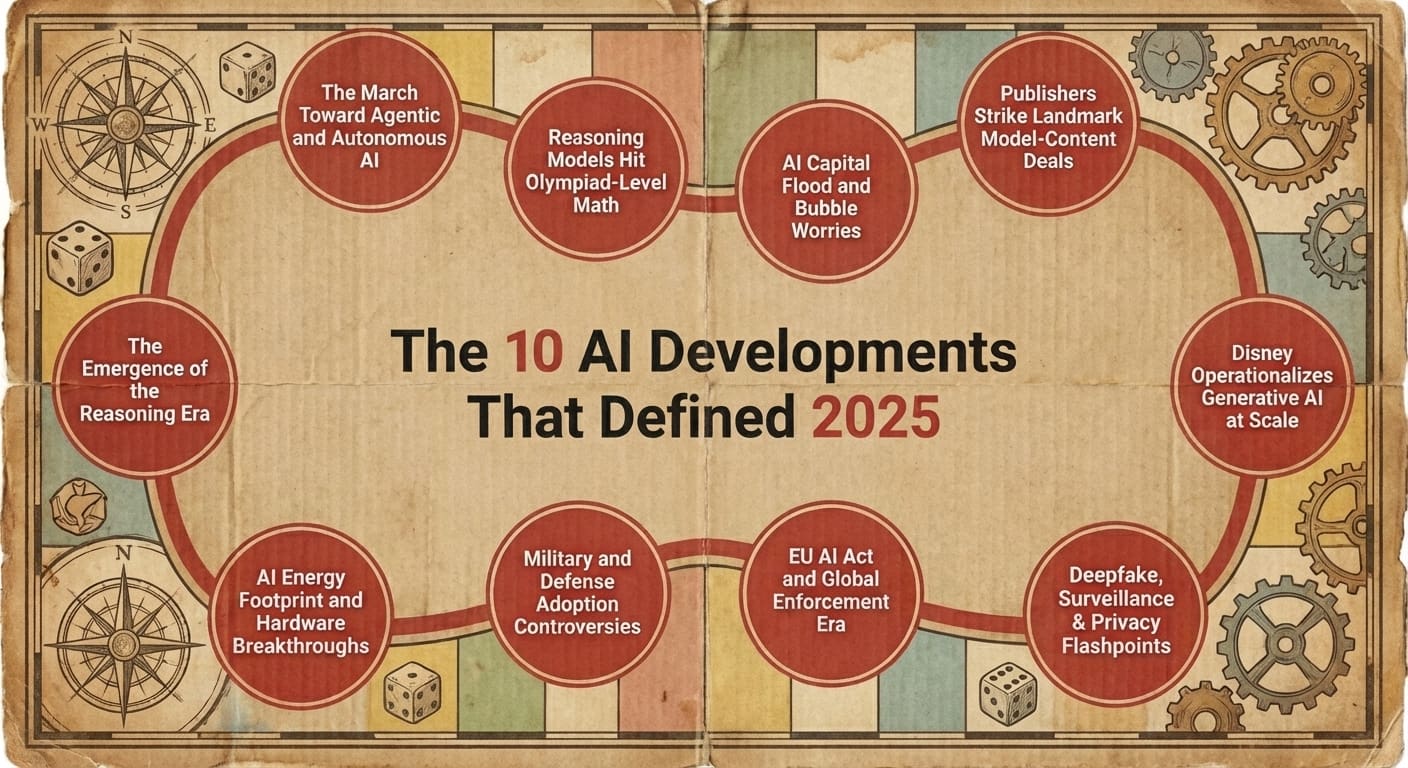

The 10 AI Developments That Defined 2025

In this article, we retroactively analyze what I would consider the ten most consequential, broadly impactful AI storylines of 2025, and gain insight into where the field is going in 2026.

Image by Editor

# Introduction

As we look forward to what may transpire in AI this year, it's good to take stock of what happened in 2025. And a lot happened. It would be too much to go through everything, obviously, but focusing on ten of the top developments is certainly possible. The list is subjective, as quantifying and directly comparing such developments in any meaningful way would be impossible, but I believe this list we have come up with is representative of both the broad and the effectual nature of AI stories of 2025.

While I would find it difficult to rank all ten, it's easier to pick out developments that are more clearly impactful than others. The first two are, for my money, the biggest stories of the year (and likely in the sequence presented for reasons that may be more clear after reading), while the remaining eight are presented in no particular order.

Without further ado, here are what I would consider the ten most consequential, broadly impactful AI storylines of 2025.

# 1. The Emergence of the "Reasoning Era"

Likely the biggest development in the LLM space this past year was the marked shift to what we could refer to as the reasoning era. Though existent prior to 2025, the first major shock of the year came early when DeepSeek released its first foray into the LLM market with their R1 model in early January, a reasoning model with performance comparable to the o1 model from OpenAI. This performance also came at a dramatically decreased computational cost, thanks in large part to Group Relative Policy Optimization (GPRO) technique used for model training. This was also the event that arguably shot China into the lead with open source AI models.

The shift to the reasoning era was basically complete by August 7, 2025, when OpenAI released GPT‑5, widely framed as the year’s defining model launch and an event which emphasized the shift from "chat" LLMs to systems arguably consistently hitting human expert — or "PhD level" — performance on demanding benchmarks. GPT‑5 collapsed the earlier split between general‑purpose and "o‑series" reasoning models into a single system that can dynamically switch between fast responses and deep, tool‑using analysis. The launch re‑ignited geopolitical scrutiny of frontier models, export controls and AI safety regimes, as governments began treating foundation models as strategic infrastructure rather than just cloud services.

# 2. The March Toward Agentic and Autonomous AI

If we skip ahead and look at the end of 2025 for contrast, AI had by this time moved from sidekick to core collaborator in many workplaces, powered in no small part by the increasingly-capable reasoning models unleashed on us all year long. As the months passed, autonomous agents and orchestration frameworks quietly reshaped white collar workflows across law, finance, software, and media.

Surveys and case studies pointed to substantial productivity gains as firms embedded AI into document review, coding, customer support, and sales operations, even as workers wrestled with job redesign, deskilling fears, and new expectations to manage "AI workforces." Commentators argued that historians will see 2025 as the year the foundations were laid for most people to eventually command networks of AI agents, rather than simply using isolated chatbots.

# 3. Reasoning Models Hit Olympiad‑Level Math

Given that 2025 was the year reasoning‑centric architectures moved from demo to dominance, it makes sense that models from OpenAI and Google DeepMind achieved gold medal‑equivalent scores on International Math Olympiad‑style problems, while also producing publishable new math results. These systems, including variants of Gemini Pro and other "DeepThink"‑style reasoning models, showcased persistent, multi‑step "problem solving" that had eluded prior LLMs, and were quickly embedded into scientific and engineering workflows. The same capability sparked new safety concerns about self‑improving systems, as DeepMind used a reasoning model to optimize training of Gemini itself, raising questions about recursive improvement and oversight.

# 4. AI Capital Flood and Bubble Worries

AI startups and scale‑ups raised record amounts in 2025, with estimates running to roughly 150 billion dollars in equity and debt financing, fuelling fears of a speculative bubble reminiscent of late‑stage dot‑com insanity. Mega‑rounds clustered around foundation‑model labs, agentic platform plays, and AI‑native semiconductor and datacenter companies, as investors chased "picks and shovels" exposure to the model arms race. Analysts and some regulators warned that capital concentration around a small set of players could amplify systemic risk, from power‑grid strain to talent hoarding, even as enterprise surveys showed AI finally driving material productivity gains in many sectors. 2026 will undoubtedly bring us closer to an understanding as to whether this bubble pops sooner than later, and to what degree the effects ripple throughout both the industry and the greater economy.

# 5. Publishers Strike Landmark Model‑Content Deals

Throughout 2025, newsrooms and media groups cut high‑profile licensing and product‑integration deals with OpenAI, Google, Microsoft, and Meta, marking a pivot from 2023–24’s copyright wars toward cash‑and‑data détente. Early in the year, organizations like Axios and the Associated Press expanded agreements allowing their archives to train or ground AI models, while later deals gave tech platforms rights to use full‑text news content in AI‑powered news products and assistants. These partnerships, which often bundled co‑developed AI tools for reporters, fueled debate inside journalism about dependence on AI platforms even as they offered new revenue at a time of severe ad‑market pressure. The mother of all of these agreements may well have been the Disney/OpenAI deal, which coincides with the following development.

# 6. Disney Operationalizes Generative AI at Scale

In late December, The Walt Disney Company confirmed it was embedding generative AI across its core operations, moving beyond pilot projects into end‑to‑end support for content development, post‑production and theme‑park guest personalization. The initiative, built around centralized internal AI platforms trained on Disney’s vast IP catalog, was pitched as a way to streamline creative workflows while tightly controlling brand integrity and copyright risk.

This came at the same time as their partnership with OpenAI, which sets the stage of limited usage for Disney intellectual property to be used in OpenAI services, specifically video-generation. In return, Disney becomes a corporate partner, leveraging OpenAI’s tech to build internal tools and consumer experiences. Disney producing AI-generated entertainment is no longer far off in the future. This is confirmation that generative models have matured enough to become part of the industrial backbone of a major entertainment conglomerate, not just an experimental storytelling toy. The future is entertainment is upon us.

# 7. Deepfake, Surveillance & Privacy Flashpoints

2025 saw generative AI collide with civil liberties flashpoints, from a wave of political deepfakes in global election cycles to renewed controversy over biometric surveillance. Amazon’s December rollout of Familiar Faces, an AI‑powered facial recognition feature for Ring doorbells in the US, drew criticism from lawmakers and privacy advocates alarmed at normalizing neighborhood‑scale facial tracking.

In parallel, new open‑source and commercial image/video generators capable of realistic, explicit content turbocharged abuse potential, forcing platforms and regulators to grapple with non‑consensual deepfakes and content‑filtering mandates. Videos are being used at an unprecedented rate in politics and other public misinformation campaigns. User language model prompts have been leaked. AI is modifying codebases in unauthorized ways, and outright deleting data. Major LLMs are intentionally behaving badly. Chatbots have been linked to teen suicides.

There are an awful lot of individual stories in this broader development category, and Crescendo has a great roundup of many of them. I encourage you to check it out if this space interests you.

# 8. EU AI Act and Global Enforcement Era

By late 2025, the European Union’s AI Act moved from negotiation to implementation, cementing its tiered risk framework, ranging from "unacceptable risk" bans (such as certain biometric surveillance) to strict obligations for high‑risk systems. This triggered a de facto global standard, as multinational firms adapted products and documentation to the EU rulebook and aligned with NIST- and ISO‑style governance requirements across jurisdictions.

At the same time, US state initiatives (such as California’s frontier‑model safety rules), China’s sector‑specific generative content controls, and emerging regimes in India and Canada signaled a broader shift from voluntary guidelines to enforceable AI compliance. You can check the Global AI Law and Policy Tracker for more information.

# 9. Military and Defense Adoption Controversies

In December 2025, the US military’s decision to integrate xAI’s Grok chatbot into a Pentagon AI platform ignited a storm over reliability, bias, and the appropriateness of consumer‑grade conversational systems in national security contexts. Critics warned that Grok’s notoriously irreverent tone and more generalized hallucination risks were at odds with the demands of command‑and‑control environments, even as defense officials framed the move as part of a broader AI modernization push. The episode capped a year of escalating concern about military AI, from autonomous targeting debates to alliances investing heavily in battlefield automation and decision‑support agents.

# 10. AI Energy Footprint and Hardware Breakthroughs

As AI workloads exploded, attention predictably swung to their environmental and grid impacts, with new estimates highlighting the enormous energy demands of frontier‑model training and inference.

In September 2025, University of Florida researchers announced a photonic‑computing chip that performs key AI computations using light instead of electricity, promising drastically lower energy consumption with near‑perfect accuracy on benchmark tasks. The work joined a wave of specialized AI hardware — optical, neuromorphic, and domain‑specific accelerators — positioned as essential to sustaining AI growth without unsustainable power and cooling requirements. More positive developments like this one will be welcomed in 2026, should they come to fruition.

# Looking Ahead

What insights for the future of AI can we glean from the top developments of last year?

First, the symbiosis between the increased "reasoning" capabilities of models and the abilities of agentic and autonomous AI cannot be ignored. As models improve, so too will the possibilities of what can be tackled competently with agents and automation. This is of particular note not only because of the convenience these technologies will bring, but also the threat that they pose to human workers.

Second, regulation is not going anywhere. This means that explainability and understanding of what's going on in AI systems will accelerate in importance, from societal, regulatory, and accuracy points of view.

Third, reality versus slop is a concern that will not be disappearing. This theme was present in numerous developments of the past year, often as an implicit undercurrent. In 2026, the current generative AI wave must prove beyond a reasonable doubt that it is capable of more than just creating slop, with studies suggesting that more than 50% of articles and more than 20% of videos currently being created are AI-generated.

# Final Thoughts

Taken together, the defining AI developments of 2025 describe a field that has crossed an important threshold: AI is no longer primarily experimental, peripheral, or novelty-driven, but increasingly infrastructural, consequential, and entangled with economic, political, and cultural systems. The year showed both extraordinary technical progress and mounting pressure to confront second-order effects, from labor disruption and capital concentration to trust, energy use, and governance.

As we move into 2026, the central question is no longer whether AI will advance, but whether its deployment can mature at the same pace as its capabilities. How well institutions, companies, and societies adapt to that challenge will determine whether the next phase of AI feels like a durable transformation or an unstable acceleration still searching for its equilibrium.

If 2026 closes with a general societal view that AI equals slop, it may be a losing battle to stop the bubble from bursting.

Matthew Mayo (@mattmayo13) holds a master's degree in computer science and a graduate diploma in data mining. As managing editor of KDnuggets & Statology, and contributing editor at Machine Learning Mastery, Matthew aims to make complex data science concepts accessible. His professional interests include natural language processing, language models, machine learning algorithms, and exploring emerging AI. He is driven by a mission to democratize knowledge in the data science community. Matthew has been coding since he was 6 years old.