Goodhart’s Law for Data Science and what happens when a measure becomes a target?

Goodhart’s Law for Data Science and what happens when a measure becomes a target?

When developing analytics and algorithms to better understand a business target, unintended biases can sneak in that ensure desired outcomes are obtained. Guiding your work with multiple metrics in mind can help avoid such consequences of Goodhart's Law.

By Jamil Mirabito, U. of Chicago & NYC Flatiron School.

In 2002, President Bush signed into law No Child Left Behind (NCLB), which was an education policy stating that all schools receiving public funding must administer an annual standardized assessment to their students. One of the stipulations of the law required that schools make adequate yearly progress (AYP) on standardized assessments year over year (i.e., third grade students taking an assessment in the current year would have had to perform better than third grade students in the previous year’s cohort). If schools were continuously unable to meet AYP requirements, there were drastic consequences, including school restructuring and school closure. As such, many district administrators developed internal policies requiring that teachers increase their students’ test scores, using these scores as a metric for teacher quality. Eventually, with their jobs on the line, teachers began to “teach to the test.” In fact, a policy of this sort inadvertently incentivized cheating so that teachers and whole school systems could maintain necessary funding. One of the most prominent cases of alleged cheating was the Atlanta Public Schools cheating scandal.

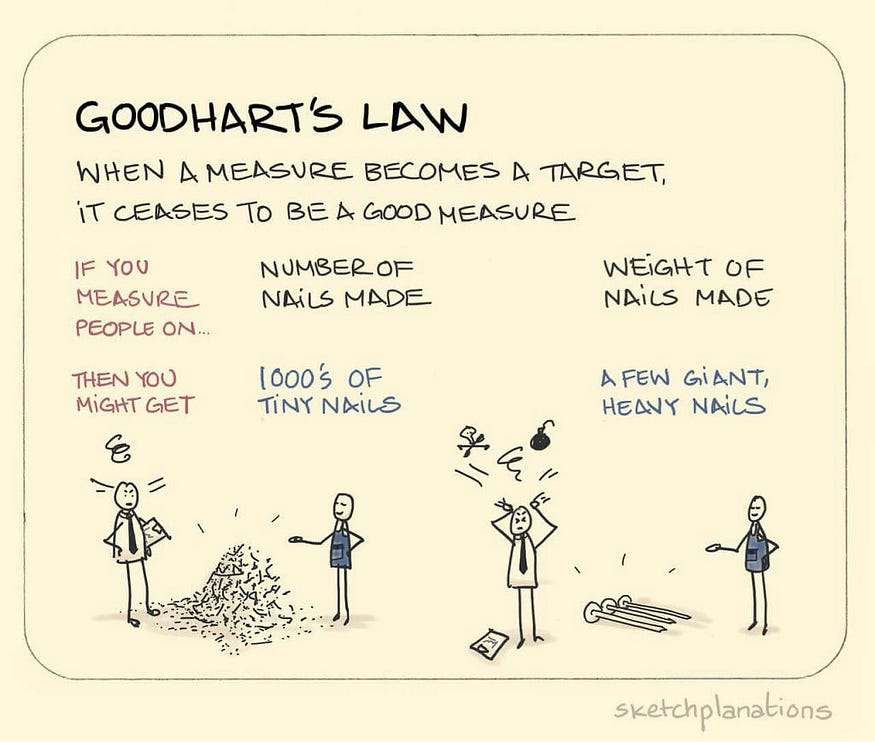

Unintended consequences of this sort are actually very common. Charles Goodhart, a British economist, once said, “When a measure becomes a target, it ceases to be a good measure.” This statement, known as Goodhart’s Law, can actually be applied to a number of real-world scenarios beyond just social policies and economics.

Source: Jono Hey, Sketchplanations (CC BY-NC 3.0).

Another commonly cited example is a call center manager setting a target to increase the number of calls taken at the center each day. Eventually, call center employees increase their numbers at the cost of actual customer satisfaction. In observing employees’ conversations, the manager notices that some employees are rushing to end the call without ensuring that the customer is fully satisfied. This example, as well as the accountability measures of No Child Left Behind, stresses one of the most important elements of Goodhart’s Law — targets can and will be gamed.

Source: Szabo Viktor, Unsplash.

The threat of gaming is much greater when considering how AI and machine learning models may be susceptible to gaming and/or intrusion. A 2019 analysis of 84,695 videos from YouTube found that a video by Russia Today, a state-owned media outlet, had been recommended by over 200 channels, far exceeding the number of recommendations that other videos on YouTube get on average. The findings from the analysis were suggestive that Russia, in some way, gamed YouTube’s algorithm to propagate false information on the internet. The problem is further exacerbated by the platform’s reliance on viewership as a metric for user satisfaction. This created the unintended consequence of incentivizing conspiracy theories about the unreliability and dishonesty of major media institutions so that users would continue to source their information from YouTube.

“The question before us is the ethics of leading people down hateful rabbit holes full of misinformation and lies at scale just because it works to increase the time people spend on the site — and it does work.” — Zeynep Tufekci

So what can be done?

In this vein, it’s important to think critically about how to effectively measure and achieve desired outcomes in a way that minimizes unintended consequences. A large part of this is not relying too heavily on a single metric. Rather, understanding how a combination of variables can influence a target variable or outcome could help to better contextualize data. Chris Wiggins, Chief Data Scientist at the New York Times, provides four useful steps for creating ethical computer algorithms to avoid harmful outcomes:

- Start by defining your principles. I’d suggest [five in particular], which are informed by the collective research of the authors of the Belmont and Menlo reports on ethics in research, augmented by a concern for the safety of the users of a product. The choice is important, as is the choice to define, in advance, the principles which guide your company, from the high-level corporate goals to the individual product key performance indicators (KPIs) [or metrics].

- Next: before optimizing a KPI, consider how this KPI would or would not align with your principles. Now document that and communicate, at least internally if not externally, to users or simply online.

- Next: monitor user experience, both quantitatively and qualitatively. Consider what unexpected user experiences you observe and how, irrespective of whether your KPIs are improving, your principles are challenged.

- Repeat: these conflicts are opportunities to learn and grow as a company: how do we re-think our KPIs to align with our objectives and key results (OKRs), which should derive from our principles? If you find yourself saying that one of your metrics is the “de facto” goal, then you’re doing it wrong.

Original. Reposted with permission.

Bio: Jamil Mirabito is a Project Manager, Poverty Lab at the University of Chicago and a Data Science Student at Flatiron School in NYC.

Related:

Goodhart’s Law for Data Science and what happens when a measure becomes a target?

Goodhart’s Law for Data Science and what happens when a measure becomes a target?