XGBoost: What it is, and when to use it

XGBoost is a tree based ensemble machine learning algorithm which is a scalable machine learning system for tree boosting. Read more for an overview of the parameters that make it work, and when you would use the algorithm.

By Harish Krishna, Praxis Business School

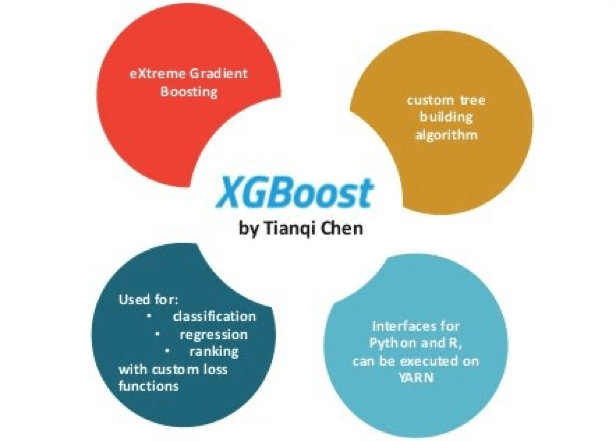

Let us set the basis for understanding the basic components of boosting in the first part of this discussion on XGBoost. Next let us see how Gradient Boosting is improvised to make it Extreme. XGBoost is a tree based ensemble machine learning algorithm which is a scalable machine learning system for tree boosting. XGBoost stands for Extreme Gradient Boosting. It uses more accurate approximations to find the best tree model.

Boosting: N new training data sets are formed by random sampling with replacement from the original dataset, during which some observations may be repeated in each new training data set. The observations are weighted and therefore some of them may get selected in the new datasets more often.

Bagging: N new training data sets are formed by random sampling with replacement from the original dataset, where each observation has the same probability to appear in a new data set.

Gradient Boosting: It is an additive and sequential model where trees are grown in sequential manner which converts weak learners into strong learners by adding weights to the weak learners and reduce weights of the strong learners. So each tree learns and boosts from the previous tree grown.

The following are the improvisation done on existing Gradient Boosting framework.

Regularized Learning of Objective function: It uses second order partial derivatives as approximation of loss function to provide more information about the direction of the gradient and the way to get to the minimum of the loss function. This smoothens the final learnt weights and avoids Over-fitting.

Shrinkage and Column Sub-sampling: Shrinkage scales newly added weights by a factor ƞ after each step of tree boosting which reduces the influence of each individual tree and leaves space for future tree to improve the model. This avoids Over-fitting.

Column Sub-sampling is column-wise sampling (sampling the predictor for build the tree). It is the subsample ratio of columns (colsample_bytree, colsample_bylevel, colsample_bynode). It can Increase the computational speed of parallel algorithm and Avoids Over-fitting.

Approximate Greedy Algorithm: The decision to stop growing using threshold that gives the largest gain is made without knowing about how the leaves will be split later. This is known as Greedy Algorithm. It has to check every possible threshold which is time consuming too. Approximate Greedy Algorithm overcomes this tiring process by, dividing the data into Quantiles or uses quantiles as candidate thresholds to split. By default, it uses about 33 quantiles.

Weighted Quantile Sketch & Parallel Learning: Since XGBoost works well on large data sets, it is difficult to store, sort and measure the quantities of this dataset in a single device. The SKETCH algorithm is used here to provide faster approximations. A big data sketch is a small data structure which allows some characteristics of the original data to be calculated or approximated. A large dataset is broken into small parts and placed on a network on various computers.In order to construct a rough histogram, Quantile Sketch combines the data on each device. For calculating approximate quantiles, this rough histogram will be used. The weighted quantile sketch incorporates the data into an estimated histogram, then the histogram is split into weighted quantities that allow smaller quantiles with low confidence predictions.

Sparsity Aware Split Finding: XGBoost can handle missing values in independent variables. Even with missing values its residuals can be calculated. First XGBoost calculates the residuals of all observations then split the data into two tables one with all observations without missing values and the other has observations with missing values. Then it sorts low to high based on the attribute in the table where there are no missing values. It calculates the average of the attributes values in a quartile if the data is huge and uses it as candidate threshold. Then it splits based on the threshold and put all its corresponding residual values in the leaves respectively. It puts the missing value’s residuals into leaf having residuals of observations below threshold to calculate Gain left. Then it puts the missing value’s residuals into leaf having residuals of observations greater than threshold to calculate Gain Right. This repeated on all quantiles and finds which has largest gain overall and decides the default path based on that respective threshold. So whenever an observation with missing values comes in, it takes the default path and split is done. This how it handles missing values on its own.

Cache – Aware Access (Prefetching): Each computer has CPU and which has a small amount of Cache memory. The CPU can use this memory faster than any other memory in computer. It allocates an internal buffer in each thread, to fetch gradient statistics into it, and it performs accumulation in mini-bath manner. This can reduce the runtime when dataset is big.

Blocks for Out-of-core Computation: Two techniques are used to improve the out-of-core computation. The aim is to fully utilize the resources of a machine to achieve scalable learning.

Block Compression: Data is divided into multiple blocks and stored into disks. These blocks are compressed by columns and decompressed by independent threads when loading into main memory. This reduces the disk reading cost.

Block Sharing: When multiple disks are available and data is shared onto multiple disks. A pre-fetcher thread is assigned to each disk and fetches the data into in-memory buffer. These threads then alternatively read the data from each buffer. It can reduce the throughput of disk reading.

Important Parameters of XGBoost

Booster: (default=gbtree)

It is based one the type of problem (Regression or Classification)

gbtree/dart – Classification , gblinear – Regression.

nthreads: (default – it is set maximum number of threads available)

Number of parallel threads needed to run XGBoost.

early_stopping_rounds :

XGBoost supports early stopping after a fixed number of iterations.

In addition to specifying a metric and test dataset for evaluation each epoch, you must specify a window of the number of epochs over which no improvement is observed. This is specified in the early_stopping_rounds parameter.

eta ƞ (default = 0.3, alias : learning_rate, range : [0,1])

Step size shrinkage used in update to prevents overfitting. After each boosting step, we can directly get the weights of new features, and eta shrinks the feature weights to make the boosting process more conservative.

gamma (default=0, alias: min_split_loss, range: [0,∞])

Minimum loss reduction required to make a further partition on a leaf node of the tree. The larger gamma is, the more conservative the algorithm will be.

Column subsampling:

- colsample_bytree: is the subsample ratio of columns when constructing each tree. Subsampling occurs once for every tree constructed.

- colsample_bylevel:is the subsample ratio of columns for each level. Subsampling occurs once for every new depth level reached in a tree. Columns are subsampled from the set of columns chosen for the current tree.

- colsample_bynode: is the subsample ratio of columns for each node (split). Subsampling occurs once every time a new split is evaluated. Columns are subsampled from the set of columns chosen for the current level.

max_depth (default=6, range: [0,∞])

Maximum depth of a tree. Increasing this value will make the model more complex and more likely to over-fit. 0 is only accepted in lossguided growing policy when tree_method is set as hist and it indicates no limit on depth.

min_child_weight (default=1, range: [0,∞])

Minimum sum of instance weight (hessian) needed in a child. If the tree partition step results in a leaf node with the sum of instance weight less than min_child_weight, then the building process will give up further partitioning. In linear regression task, this simply corresponds to minimum number of instances needed to be in each node. The larger min_child_weight is, the more conservative the algorithm will be.

lambda (default=0, alias: reg_lambda)

L2 regularization term on weights. Increasing this value will make model more conservative. Normalised to number of training examples.

alpha (default=0, alias: reg_alpha)

L1 regularization term on weights. Increasing this value will make model more conservative. Normalised to number of training examples.

early_stopping_rounds

XGBoost supports early stopping after a fixed number of iterations. You must define a window for the number of epochs over which no change is observed, in addition to specifying a metric and test dataset for evaluation for each epoch.

Learning Task Parameters:

The metric to be used for validation data. The default values are rmse for regression and error for classification.

Typical values are:

- rmse – root mean square error.

- mae – mean absolute error.

- logloss – negative log-likelihood.

- error – Binary classification error rate (0.5 threshold).

- merror – Multiclass classification error rate.

- mlogloss – Multiclass logloss.

- auc – Area under the curve.

When to Use XGBoost?

Consider using XGBoost for any supervised machine learning task when satisfies the following criteria:

- When you have large number of observations in training data.

- Number features < number of observations in training data.

- It performs well when data has mixture numerical and categorical features or just numeric features.

- When the model performance metrics are to be considered.

Summary

XGBoost is a tree based ensemble machine learning algorithm which has higher predicting power and performance and it is achieved by improvisation on Gradient Boosting framework by introducing some accurate approximation algorithms. XGB commonly used and frequently makes its way to the top of the leaderboard of competitions in data science. XGBoost – Greatly Boosted.

Bio: is pursuing Data Science at Praxis Business School.

Related:

- Comparing Decision Tree Algorithms: Random Forest® vs. XGBoost

- Time Series Classification Synthetic vs Real Financial Time Series

- Many Heads Are Better Than One: The Case For Ensemble Learning