DataOps: 5 things that you need to know

DataOps (Data Operations) has assumed a critical role in the age of big data to drive definitive impact on business outcomes. This process-oriented and agile methodology synergizes the components of DevOps and the capabilities of data engineers and data scientists to support data-focused workloads in enterprises. Here is a detailed look at DataOps.

By Sigmoid

1. What is DataOps?

In simple terms, DataOps can be defined as a methodology that offers speed and agility to data pipelines, thereby enhancing the quality of data and delivery practices. DataOps enables greater collaboration within organizations and drives data initiatives at scale. With the help of automation, DataOps improves the availability, accessibility, and integration of data. It brings people, processes, and technology together to deliver reliable and high-quality data to all stakeholders. Rooted in the agile methodology, DataOps aims at offering optimal consumer experience by continuous delivery of analytic insights.

2. How is DataOps different from DevOps?

DataOps is often considered DevOps applied to data analytics. However, DataOps is more than just that. It also combines key capabilities of data engineers and data scientists to offer a robust, process-driven structure to data-focused enterprises. DevOps combines software development and IT operations to ensure continuous delivery in the systems development lifecycle. Whereas, DataOps also brings niche capabilities of key contributors in the data chain – data developers, data analysts, data scientists, and data engineers – to ensure greater collaboration in the development of data flows. Also, while comparing DataOps and DevOps, it is worth noting that DevOps focuses on transforming the delivery capability of software development teams whereas DataOps emphasizes the transformation of analytics models and intelligence systems with the help of data engineers.

3. Why is DataOps integral to data engineering?

Data engineers play an important role in ensuring that data is properly managed across the entire analytics trail. Additionally, they are entrusted with the responsibility of optimal use and safety of data. DataOps help facilitate the key functional areas of data engineers by enabling them with end-to-end orchestration of tools, data, codes, and organizational data environment. It can boost the collaboration and communication within the teams to adapt with evolving customer needs. In simple terms, DataOps strengthens the hands of data engineers by offering greater collaboration between various data stakeholders and helping them achieve reliability, scalability, and agility.

4. What role do DataOps engineers play in enabling advanced enterprise analytics?

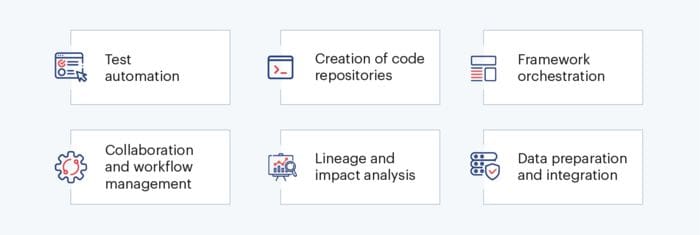

Now, the role of a DataOps engineer is slightly different from that of a data engineer. The DataOps engineer meticulously defines and manages the environment in which the data is developed. The role also includes offering guidance and design support to data engineers around workflows. As far as advanced enterprise analytics is concerned, DataOps engineers play a significant role in automating data development and integration. With their in-depth knowledge of software development and agile methodologies, DataOps engineers contribute to enterprise analytics by tracking document sources through metadata cataloging as well as building metric platforms to standardize calculations. Some of the key roles of a DataOps engineer are:

- Test automation

- Creation of code repositories

- Framework orchestration

- Collaboration and workflow management

- Lineage and impact analysis

- Data preparation and integration

5. What are the popular technology platforms commonly used by DataOps teams?

It is fair to say that DataOps is still an evolving discipline and data-focused organizations are learning more about it every day. However, with technological innovations, a number of platforms have already made a mark and are growing their impact across the industry. Here are some of the popular platforms used by DataOps teams.

- Kubernetes

Kubernetes is an open-source orchestration platform that allows companies to combine multiple docker containers into a single unit. This makes the development process much faster and simple. Kubernetes can help teams manage data scheduling on nodes within a single cluster, simplify workloads and categorize containers into logical units for easy discovery and management. - ELK (Elasticsearch, Logstash, Kibana)

The ELK Stack of Elastic is a widely preferred log management platform which comprises three distinct open-source software packages – Elasticsearch, Logstash and Kibana. While Elasticsearch is a NoSQL database that runs on Lucene search engine, Logstash is a log pipeline solution that accepts data inputs from multiple sources and performs data transformations. Kibana on the other hand, is essentially a visualization layer which operates on top of elastic search. - Docker

The Docker platform is often regarded as the simplest and straight forward tool which can help companies scale high-end applications using containers and securely run them on the cloud. Security is one of the key aspects that differentiates the platform as it provides a secure environment for testing and execution. - Git

Git is a leading version-control application that allows companies to effectively manage and store version updates in data files. It can control and define a particular data analytics pipeline such as source code, algorithms, HTML, parameter files, configuration files, containers and logs. Since these data artefacts are simply source code, Git makes them easily discoverable and manageable. - Jenkins

Jenkins is an open-source server-based application which helps companies seamlessly orchestrate a range of activities to achieve continuous automated integration. The platform supports the end-to-end development lifecycle of an application from development to deployment while speeding up the process through automated testing. - Datadog

Datadog is an open-source cloud monitoring platform that facilitates full visibility across an application stack by allowing companies to monitor metrics, traces and logs through a unified dashboard. The platform comes with around 400 built-in integrations and a predefined dashboard that simplifies the process.

Rounding Up

With time the complexity and scale of enterprise AI and ML applications will only increase, giving rise to the need of convergence in data management practices. In order to meet customer demands and deploy applications faster, organizations need to reconcile data cataloging and accessibility functions while ensuring integrity. And, this is where DataOps practices can help companies create a difference.

Bio: Jagan is a Dev Ops evangelist who leads the Dev Ops practice at Sigmoid. He has instrumental in maintaining and supporting highly critical data systems for clients across CPG, Retail, AdTech, BFSI, QSR and Hi-Tech verticals.

Original. Reposted with permission.

Related:

- Model Experiments, Tracking and Registration using MLflow on Databricks

- Data Scientist, Data Engineer & Other Data Careers, Explained

- Why You Should Consider Being a Data Engineer Instead of a Data Scientist