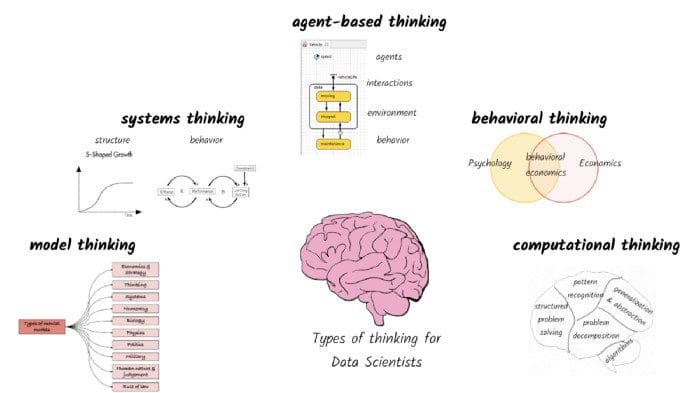

Five types of thinking for a high performing data scientist

Five types of thinking for a high performing data scientist

The way you think about a problem and the conceptual process you go through to find a solution may be guided by your personal skills or the type of problem at hand. Many mental models exist representing a variety of thinking patterns -- and as a Data Scientist, appreciating different approaches can help you more effectively model data in the business world and communicate your results to the decision-makers.

By Anand Rao, Interested in cutting edge science, engineering, politics, and philosophy.

Source: Produced by the author.

Complexity pervades today’s society — whether we look at the economy, businesses that operate within the economy, individuals who play different roles in society, how our physical, social, political, and industrial complex interact with each other, we cannot ignore the complexity of it. There is no single or simple explanation that captures the complexity of it all. As data scientists, we have to understand this complexity and hone in our thinking to isolate what matters, ignore what doesn’t, and push forward with answering key questions posed to us.

In this blog, I expand on some of the key ‘thinking’ paradigms that have helped me in conceptualizing the abstract problems posed to me and how I have been able to address these problems to generate insights. While meta-cognition or ‘thinking about thinking’ is a rich topic of discussion — one I think is critical to the endeavor of AI — I will restrict my attention here to thinking paradigms useful for data scientists.

Model Thinking

As data scientists, the first and foremost skill we need is to think in terms of models. In its most abstract form, a model is any physical, mathematical, or logical representation of an object, property, or process. Let’s say we want to build an aircraft engine that will lift heavy loads. Before we build the complete aircraft engine, we might build a miniature model to test the engine for a variety of properties (e.g., fuel consumption, power) under different conditions (e.g., headwind, impact with objects). Even before we build a miniature model, we might build a 3-D digital model that can predict what will happen to the miniature model built out of different materials.

In each of these cases, there were two distinct entities — a model and the object that was being modeled. In the first case, the miniature model engine was a model of the complete aircraft engine. In the second case, the digital model of the engine was a model of the miniature model engine. The digital model itself would have had to model different aspects of the physics of flight (e.g., thrust). So the model need not always be of an object. It can also be of a property. For example, a model of the gravitational force takes into account the relative masses of the two objects and the distance between the center of their masses. In this particular case, the model is an equation or a mathematical representation. In the case of the miniature model engine, the model was a physical model.

Models can also be about a process. For example, a fairly common model of consumer purchase process starts with awareness, consideration, purchase, and repeat purchase. Here the model is a logical representation of the step-by-step process. The same logical representation can be expressed with equations or can be programmed as code.

Hence, models are abstractions of reality or something worthy of study. They are also ways by which we explain and make sense of our world. Charlie Munger, vice chairman of Berkshire Hathaway, is one of the biggest proponents of this way of thinking [1]

“I think it is undeniably true that the human brain must work in models. The trick is to have your brain work better than the other person’s brain because it understands the most fundamental models: ones that will do most work per unit.” “If you get into the mental habit of relating what you’re reading to the basic structure of the underlying ideas being demonstrated, you gradually accumulate some wisdom.”

Charlie Munger has more than adequately demonstrated the value of this type of model thinking in the business world. This skill is not only critical for investing but is also a better way to make sense of the world. This type of model thinking is also essential for data scientists and AI researchers. We are often required to model some aspect of human decision-making (e.g., prediction, optimization, classification — see [2] for more details) or make sense of a process or phenomenon (e.g., anomalous behavior). Often we are called upon by domain experts in a number of different fields — business, science (physics, chemistry, biology), engineering, economics, or social science — to build models to make sense of the world, draw insights, make decisions, or act.

If data scientists can understand the mental models in these fields, it makes it easier for us to model them as mathematical formulas, logical representations, or just code. In fact, there are a number of books [2–4] on mental models and literally hundreds of mental models that have been categorized. The site on Mental Models [6] lists 339 models under ten different categories, including economics and strategy, human nature and judgment, systems, the biological or physical worlds, etc. Scott Page in his article [7] makes a case for why ‘many-model thinkers’ make better decisions. Not only are many-model thinkers better decision-makers, but data scientists who can think and build an ensemble of models based on the hundreds of mental models are also better data scientists. Basically, having an ensemble of models is good for humans and machines!

Figure 1: What is model thinking? (Source: Produced by the author.)

Systems Thinking

In my thirty-year career in business and technology, the single most useful, practical, and profound mental model that I have used extensively is systems thinking. It has helped me see the bigger picture and the inter-relationships between seemingly unrelated areas. I feel it is critical for data scientists to have a good appreciation of systems thinking and also to practice it. Peter Senge sums up lucidly on what systems thinking is:

“Systems thinking is a discipline for seeing the ‘structures’ that underlie complex situations and for discerning high from low leverage change. That is, by seeing wholes, we learn how to foster health. To do so, systems thinking offers a language that begins by restructuring how we think.”

There are a number of books [10–12] and even multiple series of blogs on Medium [8,9] that describe systems thinking. In my own work, I have been most influenced by the works of Barry Richmond [12–13] and John Sterman [11]. I will draw upon their work to illustrate what it is and why it is relevant for data scientists.

Our traditional educational curriculum emphasizes analytical thinking — the ability to take a problem, break it down into its constituent parts, develop solutions for these parts and assemble them together. As consultants, you are constantly urged to be MECE (Mutually Exclusive and Collectively Exhaustive) in your thinking and clearly lay out your hypotheses, and develop a MECE set of options to address any problem. While analytical and MECE thinking do have a specific role in understanding problems, we often are blinded by it and rarely question it. Systems thinking, on the other hand, is a perfect anti-dote to MECE thinking. Let’s review a few of the key thinking patterns that the systems approach entails.

Dynamic thinking — a pattern of thinking that reinforces the framing of a problem in terms of how it evolves over time. While static thinking focuses on specific events, dynamic thinking focuses on how behaviors of physical or human systems change over time. The ability to think through how the input could change over time and the impact it will have on the output behavior is critical.

Data scientists often approach problems with cross-sectional data at a point in time to make predictions or inferences. Unfortunately, given the constantly changing context around most problems, very few things can be analyzed statically. Static thinking reinforces the ‘one-and-done’ approach to model building that is misleading at best and disastrous at its worst. Even simple recommendation engines and chatbots trained on historical data need to be updated on a regular basis. Understanding the dynamic nature of change is essential for building robust data science models.

System-as-cause thinking — a pattern of thinking that determines what to include within the boundaries of our system (i.e., extensive boundary) and the level of granularity of what is to be included (i.e., intensive boundary). The extensive and intensive boundaries depend on the context in which we are analyzing the system and what is under the control of the decision-maker vs. what is outside their control.

Data scientists typically work with whatever data has been provided to them. While it is a good starting point, we also need to understand the broader context around how a model will be used and what it is that the decision-maker can control or influence. For example, when building a robo-advice tool, we could include a number of different aspects ranging from macro-economic indicators, asset class performance, company investment strategies, individual risk appetite, life-stage of the individual, health condition of the investor etc. The breadth and depth of factors to be included depend on whether we are building a tool for an individual consumer, an advisor, a wealth management client, or even a policymaker in the government. Having a bigger picture of the different factors and how they influence each other together with the user and the context of the user will help us build targeted models and scope them appropriately.

Forest thinking — a pattern of thinking that allows us to see the ‘bigger picture’ and aggregate where necessary while not losing the essential details. Often data scientists are forced into tree-by-tree thinking as they look at individual elements of data (e.g., individual customer data) and fail to see the bigger picture on what data is required in order to solve the problem being posed. I have often seen this translate into ‘build the best model we can build using available data’ rather than probing ‘what data may be needed to be collected to solve the problem we have’.

Operational thinking — a pattern of thinking that focuses on the operational process or ‘causality’ of how behaviors are manifested in systems. The opposite of operational thinking is factors thinking or list-based thinking or MECE thinking that I mentioned earlier. Reliance on machine learning as the primary or only method for data science could easily lead us all into factors thinking where the focus is on predicting the output variable with no consideration to the process or causality. While this may be suitable in a number of applications, they are not universally applicable. Recent research into explainable AI is an attempt to recreate some of the process and rationale for how the answers were arrived at.

Closed-loop thinking — a pattern of thinking that seeks to identify feedback loops in systems whereby certain effects become causes. The predominant ‘time as an arrow’ that moves in a single forward direction is a powerful mindset that has constrained our thinking in business as well as scientific endeavors. Data scientists have not been immune to this trend. Causal loop diagrams and stock-flow diagrams used extensively by the system dynamics community and causal inference are some of the tools available to data scientists to break away from straight-line thinking.

These are just a few of the key patterns of system thinking. Barry Richmond [12,13] also talks about quantitative thinking, scientific thinking, non-linear thinking, and 10,000-meter thinking. In addition, he shares principles of communication and learning that are worth studying.

I find the systems thinking approach particularly appealing for a few key reasons. First, it provides an alternative mindset and, more importantly, a mindset that draws connections between disparate parts and more often than not brings in a unique perspective to problem-solving. Second, it is more open-ended, offering multiple viewpoints and trade-offs to be analyzed as opposed to claiming a unique and correct answer. This often leads to more informed human decisions as opposed to brittle and unexplained machine decisions. Third, it offers a better way to explain and communicate decisions. In subsequent articles, I will take specific examples that illustrate these benefits.

Figure 2: What is Systems Thinking? (Source: Produced by Author)

Agent-based thinking

If you search for agent-based thinking on the Internet, you may not find much. Instead, you will see a number of references to agent-based modeling (ABM). While ABM is the concrete realization of this type of thinking that I will explore in forthcoming articles, I want to focus on the thinking that precedes the building of such agent-based models.

Agent-based thinking — a pattern of thinking where we focus on simpler (or atomic) entities or concepts and how relatively simple interactions between these entities can result in emergent system behaviors. Similar to systems thinking, we are interested in system-level behaviors, but rather than observing the relationships from a top-down perspective, we analyze system behaviors from a bottom-up perspective. The thinking is individual-centric — what is the state (physical or mental) of the individual, how does the individual interact with its environment and other individuals, and how does that change its state. Such individual-centric or agent-based thinking can be applied to physical assets (e.g., a thermostat can be considered an agent), individual consumers (e.g., modeling individual consumers making purchase decisions in a marketing context), corporate entities (e.g., companies acting in their self-interest making markets efficient), or even national governments (e.g., countries trading with each other based on their comparative advantage).

Systems thinking and agent-based thinking can be applied to the same set of problems and produce similar results but approach it from different mindsets or mental models. For example, the well-known epidemiological models of disease progression — SEIR (Susceptible, Exposed, Infectious, Recovered) can be analyzed from a systems perspective or an individual perspective. When we look at the entire population of susceptible, exposed etc., we are working at the systems level and if we are looking at the state of each individual as to whether they are already infectious, recovered etc. from the disease, we are operating at the agent-based level.

When we want to move from aggregate behaviors of disease progression to individual behaviors, agent-based thinking becomes a more natural way of thinking. For example, if we are interested not just in the overall levels of infections but want to understand which individuals are susceptible to the disease or the behaviors (e.g., social distancing or mask-wearing) of specific individuals and how they may contribute to disease progression, the agent-based thinking is a more natural approach.

Agent-based thinking is the foundation for agent-based modeling (also referred to as agent-based simulation or microsimulation systems), multi-agent systems, and reinforcement learning. As a result, a data scientist needs to be comfortable with analyzing problems from an individual agent perspective — where the individual agents may be IoT devices or physical assets (more popularly referred to as ‘digital twins’) or individual decision-making entities such as consumers, corporations etc.

I find agent-based thinking particularly appealing under certain types of situations. First, when the collection of entities or agents are identifiable and heterogenous, it provides an intuitive way of studying their behaviors. Second, when interactions between entities are more localized, it is easier to study them with agent-based thinking. Third, when the individual behaviors (or behaviors of groups of individuals) matter more than the system behavior, agent-based thinking offers a better approach. Fourth, when the individual entities adapt and change differently, we are better off modeling at an individual as opposed to a system level.

Figure 3: What is agent-based thinking? (Source: Produced by the author)

Behavioral (Economics) Thinking

The collection of mental models around human nature and judgment [6], often called behavioral economics, has influenced me significantly in both my consulting and AI journey. Interestingly, both AI and behavioral economics have a common ancestry. Herbert Simon’s notion of bounded rationality questioned the prevailing view of ‘humans as ideal rational decision makers’ and instead argued that we make decisions bounded by our thinking capacity, available information, and time [14]. This was the foundation for the field of behavioral economics [15–17] that has been in the ascendancy since the late 1900s. Simon is also considered one of the founding fathers of AI, and his work on the development of heuristic programs and human problem solving laid the foundation for future symbolic AI systems. In his words [14],

The principle of bounded rationality [is] the capacity of the human mind for formulating and solving complex problems is very small compared with the size of the problems whose solution is required for objectively rational behavior in the real world — or even for a reasonable approximation to such objective rationality.

Over the past couple of decades, behavioral economics has had a profound influence in the academic and business world on how humans make decisions [15], the underlying processes for making the decisions [16], how they may deviate from an ideal utility-maximizing economic perspective, and how to nudge them towards certain decisions for their own benefit [17].

Behavioral (economics) thinking (or behavioral thinking for short) is a pattern of thinking that focuses on how humans really make decisions as opposed to how they ought to make decisions. When we use agent-based thinking, we are often required to understand how humans make decisions (e.g., decisions regarding what goods to purchase, how much to invest, etc.). Behavioral economic principles such as anchoring, defaults, bandwagon effect, loss aversion, hyperbolic discounting, and a huge list of other heuristics seek to explain how we make decisions under different scenarios.

As a data scientist, I find behavioral thinking useful in two specific ways. First, it helps us understand how humans make decisions so that we can understand how the models built by data scientists will be used by them and the explanations required for better adoption. Second, it helps us model human decision-making within agents to simulate or observe overall behavior. This second aspect can be viewed as a further specialization of agent-based thinking.

Figure 4: What is behavioral thinking? (Source: Produced by the author)

Computational Thinking

The term computational thinking was first introduced by Seymour Papert [18] in 1980. However, the importance of computational thinking as a critical component of computer science education came much later with a paper by Jeannette Wing [10]. The foundations of computational thinking have been maturing over the last half of the previous century with algorithmic thinking, computational approaches to all aspects of science, engineering, and business.

Computational thinking is a pattern of thinking that emphasizes structured problem solving, problem decomposition, pattern recognition, generalization, and abstraction that can be coded and executed by computers. Computational thinking has had a profound influence in the successive revolutions that have followed the industrial revolution — the computing revolution, the internet and smartphone revolution, and now the big data, analytics, and AI revolutions.

While all of the previous thinking patterns I have discussed can be applied to understanding both humans and machines, computational thinking is central to creating intelligent systems. Alternatively, we can also view computational thinking as the mechanism for encoding and realizing the other types of thinking. Hence, as data scientists, we have to often think computationally and be precise on the instructions we provide.

Figure 5: What is computational thinking? (Source: Produced by the author)

References

[1] Trents Griffin. A Dozen Things I’ve Learned from Charlie Munger about Mental Models and Worldly Wisdom. August 22, 2015.

[2] Anand Rao. Ten human abilities and four types of intelligence to exploit human-centered AI. Medium — Start it up, Oct 10, 2020.

[3] Shane Parrish and Rhiannon Beaubien. The Great Mental Models Volume 1: General Thinking Concepts. Latticework Publishing Inc. 2019.

[4] Shane Parrish and Rhiannon Beaubien. The Great Mental Models Volume 2: Physics, Chemistry, and Biology. Latticework Publishing Inc. 2020.

[5] Gabriel Weinberg and Lauren McCann. Super Thinking: The Big Book of Mental Models. Portfolio, 2019.

[6] Understanding the world with mental models: 339 models explained to carry around in your head.

[7] Scott E Page. Why “Many-Model Thinkers” Make Better Decisions, Harvard Business Review, November 19, 2018.

[8] Leyla Acaroglu. Tools for systems thinkers: The six fundamental concepts of systems thinking. Medium — Disruptive Design. September 7, 2017.

[9] Andrew Henning. Systems thinking Part 1 — Element, interconnections, and goals. Medium — Better Systems. August 1, 2018.

[10] Donella Meadows. Thinking in Systems: A Primer. Chelsea Green Publishing. 2008.

[11] John Sterman. Business Dynamics: Systems Thinking and Modeling for a complex world. McGraw-Hill Education. 2000.

[12] Barry Richmond. An Introduction to Systems Thinking with Stella. ISEE Systems, 2004.

[13] Barry Richmond. The “thinking” in systems thinking. How can we make it easier to master?. Systems Thinker.

[14] Herbert Simon. Models of bounded rationality Volume 1: Economic Analysis and Public Policy. The MIT Press. 1984.

[15] Dan Ariely. Predictably irrational, Revised and Expanded Edition: The hidden forces that shape our decisions. Harper Collins, 2009.

[16] Daniel Kahneman. Thinking, fast and slow. Farrar, Straus and Giroux, 2013.

[17] Richard Thaler and Cass Sunstein. Nudge: Improving decisions about health, wealth, and happiness.Penguin Books, 2009.

[18] Seymour Papert. Mindstorms: Children, computers, and powerful ideas. Basic Book, Inc., 1980.

[19] Jeannette Wing. Computational thinking. Communications of the ACM 49 (3):33- 35. 2006.

Original. Reposted with permission.

Related:

Five types of thinking for a high performing data scientist

Five types of thinking for a high performing data scientist