Machine Learning Is Not Like Your Brain Part Two: Perceptrons vs Neurons

An ML system requiring thousands of tagged samples is fundamentally different from the mind of a child, which can learn from just a few experiences of untagged data.

While today’s artificial intelligence (AI) is able to do some extraordinary things, its functionality has very little to do with the way in which a human brain works to achieve the same tasks. AI – and specifically machine learning (ML) – works by analyzing massive data sets, looking for patterns and correlations without understanding the data it is processing. As a result, an ML system requiring thousands of tagged samples is fundamentally different from the mind of a child, which can learn from just a few experiences of untagged data.

For today’s AI to overcome such inherent limitations and evolve into its next phase (artificial general intelligence), we should examine the differences between the brain, which already implements general intelligence and its artificial counterparts.

With that in mind, this nine-part series examines in progressively greater detail the capabilities and limitations of biological neurons and how these relate to ML. In Part One, we examined how a neuron’s slowness makes its approach to learning through thousands of training samples implausible. In Part Two, we’ll take a look at the fundamental algorithm of the perceptron and how it differs from any model of a biological neuron which involves spikes.

The perceptron underlying most ML algorithms is fundamentally different from any model of a biological neuron. The perceptron has a value calculated as a function of the sum of incoming signals via synapses, each of which is the product of the synapse weight and the value of the perceptron from which it comes. In contrast, the biological neuron accumulates charge over time until a threshold is reached, giving it a modicum of memory.

Similarly, while the perceptron has an analog value, the neuron simply emits spikes. The perceptron has no intrinsic memory, while the neuron does. And while many say the perceptron’s value is analogous to the spiking rate of the neuron, this analogy breaks down because the perceptron ignores the relative spike timing or the phase of an incoming signal and considers only the frequency. As a result, the biological neuron can respond differently based on the order of spike arrival, while the perceptron cannot.

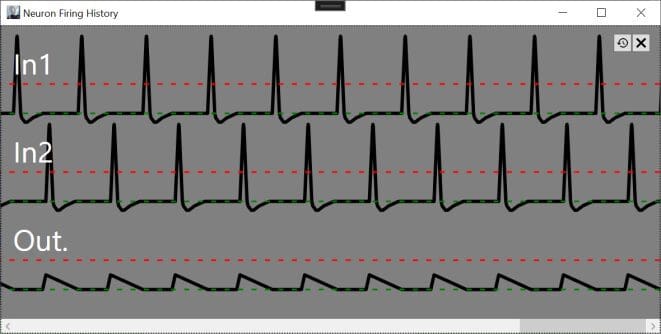

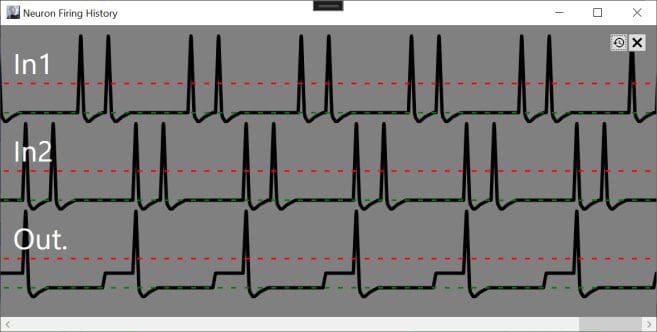

To provide an illustration, synapses with positive weights contribute to the internal charge of a neuron and stimulate it to fire when the accumulated charge exceeds a threshold level (defined as 1), while negatively weighted synapses inhibit firing. Consider a neuron stimulated by two inputs weighted .5 and -.5. If the input sequence is .5, -.5, .5, -.5, the neuron will never fire because each positive input is followed by a negative input which zeros out the accumulated charge. If the inputs fire in the sequence of .5, .5, -.5, -.5, the neuron will fire after the second positively-weighted spike.

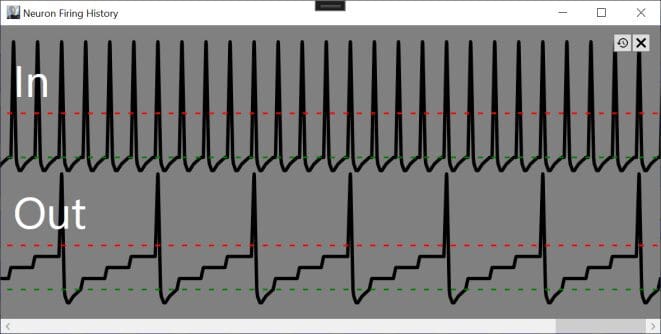

Illustrating how a neuron (Out) stimulated with a weight of .25 will accumulate charge and fire every 4th input spike of the input neuron (In).

With In1 and In2 stimulateing with weights of .5 and -.5 respectively Out will never fire.

But changing the timing of the incoming spikes lets Out fire. The perceptron model doesn’t support this function because it ignores the timing/phase of incoming signals.

In both cases, the average input frequency is .5 (half the maximum firing rate). The perceptron model will give the same output, 0, in either case, because the summation of weighted inputs or .5*.5 + .5*-.5 will always equal 0 regardless of the input timing. This means that the perceptron model cannot be reliably implemented in biological neurons and vice versa.

This is such an essential difference between the neural network and the biological neuron that I will devote a separate article to exploring it, along with the other biological factors which make today’s AI different from the brain’s function at a very fundamental level.

The biological neuron models allow for single spikes to have meaning. The overwhelming majority of the brain’s neurons spike only rarely, so it is likely that many neurons do have specific meanings. For example, since you understand what a ball is, it is likely that your brain contains a “ball neuron” (or perhaps many) that fires if you see a ball or hear the word. In a biological model, that neuron might fire only once when a ball is recognized. The perceptron model of firing rates doesn’t allow this, however, because the firing rate of a single spike isn’t defined.

In the next article, I’ll discuss how the connections in the brain are not organized in the orderly layers of ML, and how this requires some modification to the basic perceptron algorithm which may prevent backpropagation from working at all.

Next up: Fundamental Architecture

Charles Simon is a nationally recognized entrepreneur and software developer, and the CEO of FutureAI. Simon is the author of Will the Computers Revolt?: Preparing for the Future of Artificial Intelligence, and the developer of Brain Simulator II, an AGI research software platform. For more information, visit here.