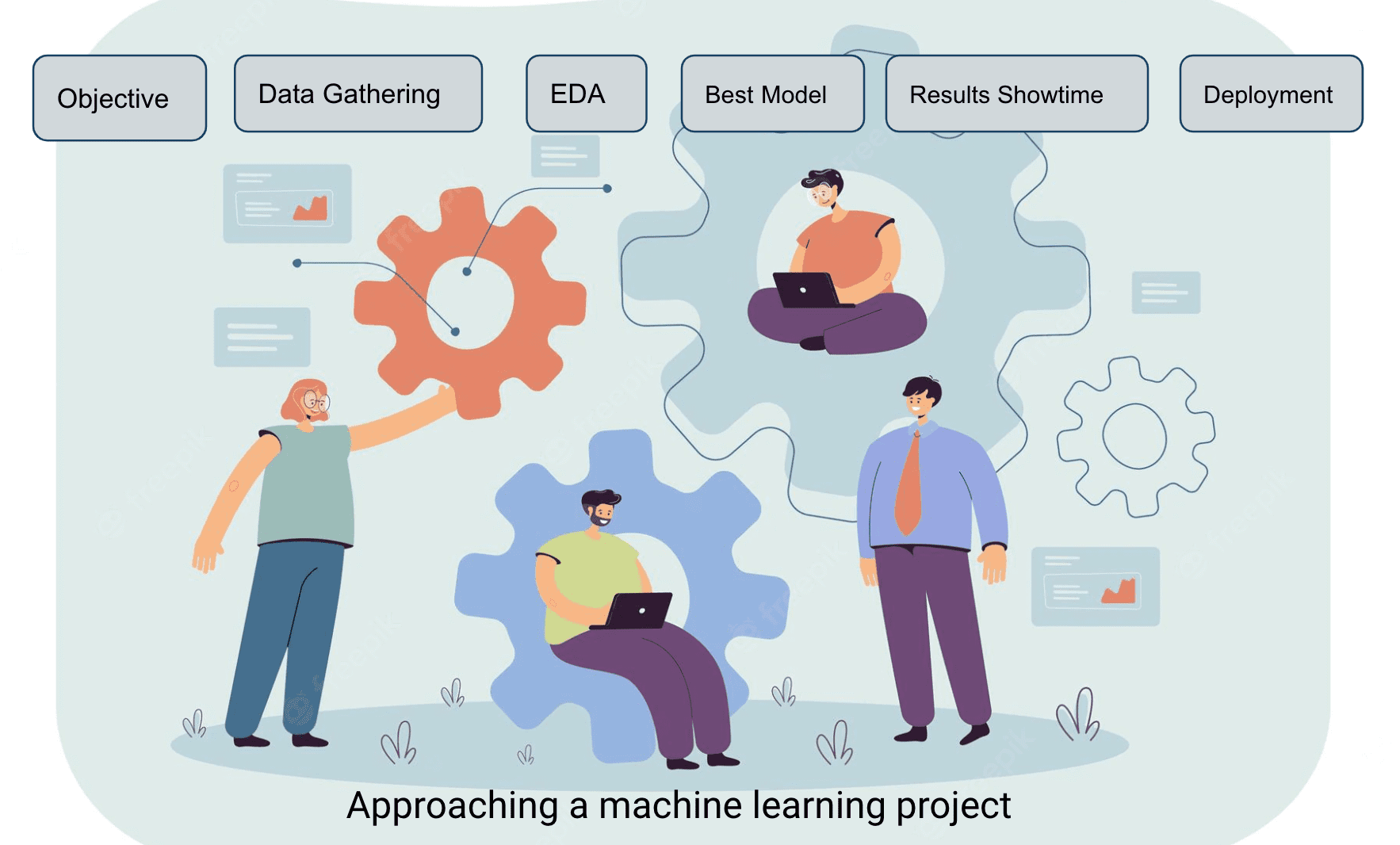

A Structured Approach To Building a Machine Learning Model

This article gives you a glimpse of how to approach a machine learning project with a clear outline of an easy-to-implement 5-step process.

Introduction

Building a machine learning model involves a lot of steps - these steps are not limited to objective guidelines and require a more elaborative approach and depth based on the complexity of the business problem.

Office infographic vector created by pch.vector

1. What is the End Goal?

The business problem can be solved in multiple ways - you need to decide whether the machine learning solution is really needed or it can be solved with a simple heuristic? Is there already a solution that is currently serving the business problem? If so, you need to do a thorough analysis and understand its limitations and seek the machine learning solution that can best overcome them. The next step should be to compare the two solutions - does the proposed machine learning solution also come with its limitations. For example, ML solutions are probabilistic and might not be correct all the time. The business needs to align on such limitations and make a trade-off between the current and proposed solutions.

Another factor that plays a crucial role is what degree of human expertise and intervention would still be required in an ML solution. Do we need humans in the loop to approve the ML model outcome or validate it still?

Once the ML solution seems to be the only choice to adopt for the business problem cognizant of its pros and cons, the data scientists frame the business problem into a statistical problem and decide which framework does it fall into? ML problems can be broadly categorized into supervised and unsupervised problems.

Also, the evaluation metric is important to decide on the best-performing algorithm. Care should be taken in terms of converting and translating the evaluation metric like Precision, Recall, etc (in case of classification) into the business metric. The data scientists work rigorously to get the best precision but fail to explain what implication it has on business outcomes. For example, understanding why precision is important in a particular use case would become clearer if we know the dollar value of a 1% drop in precision.

2. Getting Off the Ground

You have evaluated the need of building an ML model, which framework and algorithm to use like supervised/unsupervised, classification vs regression, etc, and eventually signed off the metric as an SLA to deliver the solution.

Congratulations, you are in a much better space now. Why? Because the interesting part of the model-building journey begins from here. You have a green signal to talk about the data now.

Note that the real-world solutions do not give you the luxury of a well-framed problem statement like the one Kaggle provides. As much as it is a hassle that every data scientist wants to avoid, this is the true testament of a seasoned data scientist. The one who can make its way through a not so clearly laid out situation and does not wait for instructions. Rather, works on making the best of the given data and documents the assumptions and limitations to back up the findings. One should not wait for the entire data to be made available - quite often you won’t have all the attributes that a human expert gets to act on in real-time.

After crossing the hurdle of lack of data, the issue of data quality awaits next. It is literature on its own and can be read further here.

3. Building a Connection with the Data

Data exploration is the gateway to understanding your data better and forming a connection. The more time you invest in the data at this stage, the higher the returns would be towards the later stage in the model lifecycle.

The thorough investigation of the data includes but is not limited to finding correlations, detecting outliers, deciding the legitimate vs anomalous records, identifying missing values, understanding the right way to impute them, etc. Next, you might also need to perform data transformations like log transformation, data scaling and normalization, feature selection, and engineering new features.

Every data tells a story, and the best way to weave it through is with the support of visualizations like box plots, density plots, scatter plots, etc.

4. Which Model Says “I am the Best?”

How do you select the model for the given dataset - does a linear model generalize well on the incoming dataset or tree-based ensembles. Do you have enough data to train a neural network?

The best performing model from the set of candidate models is chosen for tuning the hyperparameters. If you choose the neural network then how do you plan to tune the hyperparameters like the number of layers and neurons, learning rate, optimizer, loss function, etc.

5. Communication is the Key

Being surrounded by technicals all around, it is easy to underrate the key skill to make the data science project a success i.e. communication. Yes, how you communicate the results that are tailor-made for different audiences shows the skill of molding the solution with varying degrees of technical jargon. The visualizations are worth a million bucks and super helpful in setting those business discussions to success.

One important point is, to be honest with all your findings and then let everyone call the shots together. Don’t burden yourself with hitting the bullseye and present the golden solution. Call out where your model works vs where it fails. When can the business trust the model predictions and will what degree of confidence?

Essentially, your ability to explain model predictions and consideration into interpretable AI solutions will make you a great debugger of your model and will ensure the business that the model is in the right hands.

Did You Plan for Deployment?

So far we have talked about the model building process and that turned out to be a lengthy process in itself. What would you do with the model waiting to get deployed? This is another charter in itself that broadly involves different aspects of MLOps like writing unit tests, budgeting for latency, validation dataset, model monitoring and maintenance, error analysis, etc.

What would you do with incoming data- whether to keep accumulating data or archive the older data and retrain the model on fresh data also needs a well-laid out formalism.

Vidhi Chugh is an award-winning AI/ML innovation leader and an AI Ethicist. She works at the intersection of data science, product, and research to deliver business value and insights. She is an advocate for data-centric science and a leading expert in data governance with a vision to build trustworthy AI solutions.