What Does ETL Have to Do with Machine Learning?

ETL during the process of producing effective machine learning algorithms is found at the base - the foundation. Let’s go through the steps on how ETL is important to machine learning.

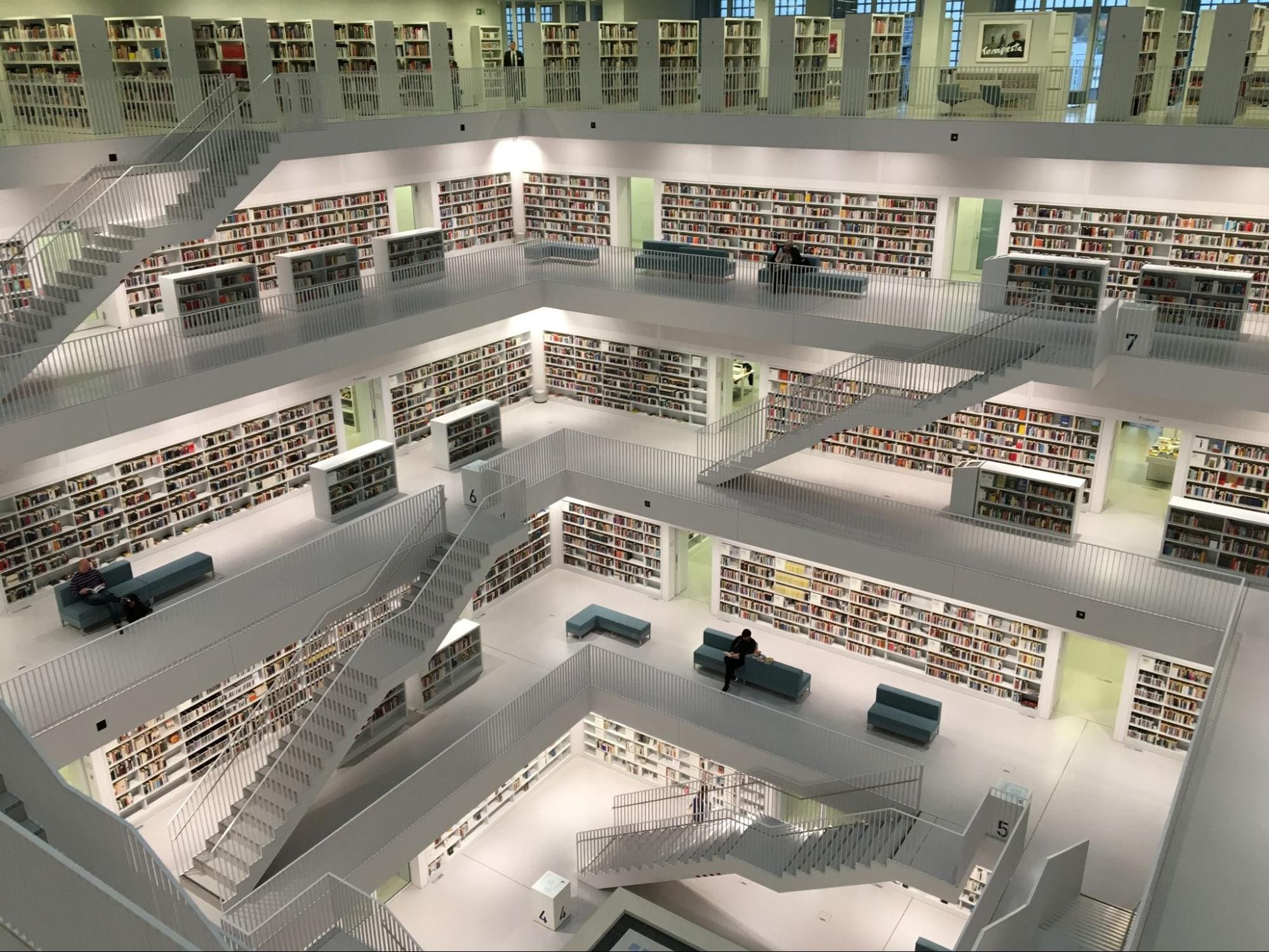

Tobias Fischer via Unsplash

You may have heard ETL getting thrown in sentences here and there when you're reading blogs or watching YouTube videos. So what does ETL have to do with machine learning?

For those who don’t already know, machine learning is a type of artificial intelligence that uses data analysis to predict accurate outcomes. It is the machine learning algorithms that produce these predicted outputs by learning on historical data and its features.

ETL stands for Extract-Transform-Load. It is the process of moving data from multiple sources to bring it to a centralized single database.

Extract:

Your first step will be to EXTRACT the data from its original source. This can be located in another database or application overall.

Transform:

Like the majority of the time when working with data and machine learning algorithms - there’s a phase of cleaning it up. During the TRANSFORM stage, you will clean up the data, search and rectify any duplicates and prepare it to be loaded into another database.

Load:

Once your data is in the correct format, it can be loaded into the target database.

Why is ETL important?

Every part of the ETL phase is important to deliver the end product accurately. The benefits it brings to machine learning are that it helps extract data, clean it up and deliver it from Point A to Point B.

However, it does more than that.

Most companies have a lot of data but they tend to be siloed. Meaning that they are in different formats, inconsistent, and do not communicate with other aspects of the business well. It’s basically useless.

We all know what data can do in this day and age - the things it’s been able to create, the problems it has solved, and how it can benefit our future. So why just leave it there to do nothing?

When you combine different datasets in a centralized repository, it provides:

- Context - organizations have more historical data to provide them with context

- Interpretable - with more data, we have a consolidated view and can make better interpretations through analysis and reports.

- Productivity - it eliminated heavy coding processes, saving both time and money and improves productivity

- Accuracy - all the points above improve the overall accuracy of the data and its outputs which can be imperative to comply with regulations and standards.

These stages make the workflow of machine learning algorithms smooth and produce accurate outputs that we can trust.

But Why Not Use Cloud Computing?

Yes, we generate and collect a lot of data with it growing at such an exponential rate that we can physically store all of it in a traditional data warehouse infrastructure. That’s where Cloud Computing has benefited us all.

Cloud Computing has not only allowed us to store large volumes of data but also helped us perform high-speed analytics. Businesses have been able to scale and continue to be innovative since Cloud Computing entered the market.

But your data still needs to be stored in a central repository, regardless if it's through traditional data warehouses or the cloud. The aim of ETL is to prepare your data so that it is in the best-suited format to be used in machine learning. If you don’t prepare your data through ETL - there’s no difference between it staying in the raw format in data warehouses or just sitting in the cloud.

ETL and Machine Learning

In order for a machine learning algorithm to be trusted and perform well it needs large amounts of training data. This training data needs to be of good quality and hold features and characteristics that can help to solve the task at hand.

ETL during the process of producing effective machine learning algorithms is found at the base - the foundation. Let’s go through the steps on how ETL is important to machine learning.

Collecting the data

Once you collect the data if it is through an external source, user-generated content, sensors, etc. The next step would be to move and store that data. This is where ETL comes into the picture, with other steps such as infrastructure, pipelines, structure, and unstructured data storage.

Preparing the data

Once the data has been moved and stored in the correct place, the next step would be to explore the data and transform it where required. The transforming of data can also be known as the preparing of data and includes cleaning and error detection.

Labeling the data

Once the data has been prepared and is in a good format - we can then move on to labeling the data for input in machine learning algorithms. This will be used as training data, where we will learn more about the features of the data points and perform analytics to grasp a better understanding.

Learning the data

This is where machine learning comes to play. With the labeled data, we can input it into machine learning algorithms so that they can better learn the features of each data point and the relationship between them. During this phase, there will be a lot of experimentations and A/B testing to understand the limitations of the data and its performance.

As you can see, ETL was one of the first steps in the machine learning algorithm process - that’s why I referred to it as the foundation. If you missed out on ETL, you will find yourself going back and forth to rectify the errors and problems in your data which will produce inaccurate outputs once inputted into the machine learning algorithm.

ETL vs ELT

You may have also heard of ELT, which is the same but different stages - Extract-Load-Transform. Although they use the same words, they differ.

ETL transforms data on a separate processing server, therefore raw data is never transferred into the data warehouse. The process of transforming data into a separate processing server makes it slower to ingest data.

However, ELT transfers raw data to the data warehouse and the data is transformed there. Due to ELT not using a separate processing server, the delivery of data ingestion is faster.

If you would like to know more about the difference between ETL and ELT, click on this link.

Conclusion

ETL is being effectively used in a variety of data management tasks, BigData, Hadoop, and more. When considering ETL you need to consider:

- What data sources do you need to extract?

- What transformations do you need to carry out on this data?

- Where do you plan to load the data?

This was an overview of what ETL has to do with machine learning and I hope I answered your question

Nisha Arya is a Data Scientist and Freelance Technical Writer. She is particularly interested in providing Data Science career advice or tutorials and theory based knowledge around Data Science. She also wishes to explore the different ways Artificial Intelligence is/can benefit the longevity of human life. A keen learner, seeking to broaden her tech knowledge and writing skills, whilst helping guide others.