Implementing Adaboost in Scikit-learn

It is called Adaptive Boosting due to the fact that the weights are re-assigned to each instance, with higher weights being assigned to instances that are not correctly classified - therefore it ‘adapts’.

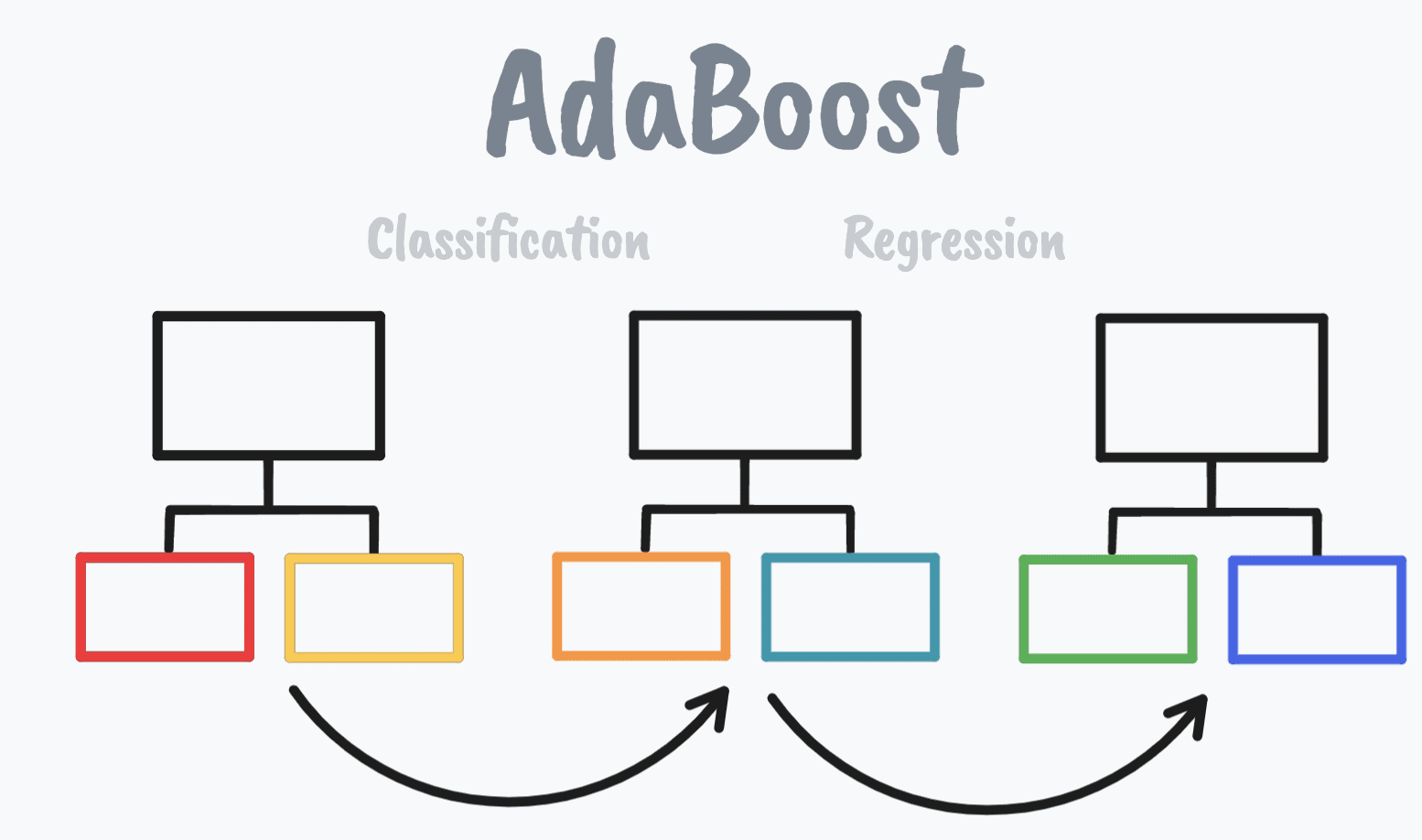

Source: Authors Image

What is AdaBoost?

The AdaBoost algorithm, short for Adaptive Boosting, is a type of Boosting technique that is used as an Ensemble Method in Machine Learning. It is called Adaptive Boosting due to the fact that the weights are re-assigned to each instance, with higher weights being assigned to instances that are not correctly classified - therefore it ‘adapts’.

If you want to learn more about Ensemble Methods and when to use them, read this article: When Would Ensemble Techniques be a Good Choice?

Boosting is a method that comes under ensemble machine learning algorithms and is used to reduce errors in predictive data analysis. It does this by combining the predictions of weak learners. Boosting was more of a theoretical concept before it became a practical concept.

If you would like to know more about Boosting, read this article: Boosting Machine Learning Algorithms: An Overview

Terminology

What is a weak learner? A weak learner refers to a simple model that has a somewhat level of skill and does slightly better than random chance.

What is a Stump? With tree-based algorithms, a node with two leaves is known as a Stump. Weak learners are almost always stumps.

How does AdaBoost work?

The AdaBoost algorithm uses short decision trees, during the data training period. The instances that are incorrectly classified are given priority and are used as inputs for the second model - known as weak learners. This process happens again and again until the model attempts to correct the predictions made by the model before.

The ideas behind AdaBoost:

- The combination of weak learners to make classifications

- Some stumps have more say in the classification than others

- Each stump takes the previous stumps' mistakes into consideration

AdaBoost and Scikit-Learn

Scikit-Learn provides ensemble methods using a Python machine learning library that implements AdaBoost. AdaBoost can be used both for classification and regression problems, so let’s look into how we can use Scikit-Learn for these types of problems.

Classification

sklearn.ensemble.AdaBoostClassifier

The aim of the AdaBoost classifier is to start off with fitting a classifier on the original dataset for the task at hand and then fit additional classifiers where the weights of incorrectly classified instances are adjusted.

These are the parameters:

sklearn.ensemble.AdaBoostClassifier(base_estimator = None, * ,

n_estimators = 50, learning_rate = 1.0, algorithm = 'SAMME.R',

random_state = None)

You can learn more about them and their attributes here.

Let’s see it as an example:

Imports:

from sklearn.ensemble import AdaBoostClassifier from sklearn.tree import DecisionTreeClassifier from sklearn.datasets import load_breast_cancer import pandas as pd import numpy as np from sklearn.model_selection import train_test_split from sklearn.metrics import confusion_matrix from sklearn.preprocessing import LabelEncoder

Load dataset:

breast_cancer = load_breast_cancer() X = pd.DataFrame(breast_cancer.data, columns=breast_cancer.feature_names) y = pd.Categorical.from_codes(breast_cancer.target, breast_cancer.target_names)

Encode malignant to 1 and benign to 0:

encoder = LabelEncoder() binary_encoded_y = pd.Series(encoder.fit_transform(y))

Training/Test set:

train_X, test_X, train_y, test_y = train_test_split(X,

binary_encoded_y, random_state = 1)

Fit our model:

classifier = AdaBoostClassifier(

DecisionTreeClassifier(max_depth = 1),

n_estimators = 200

)

classifier.fit(train_X, train_y)

Make prediction:

predictions = classifier.predict(test_X)

Evaluate the model:

confusion_matrix(test_y, predictions)

Output:

array([[86, 2],

[ 3, 52]])

Code source: Cory Maklin

Regression

sklearn.ensemble.AdaBoostRegressor

The aim of the AdaBoost regressor is to start off with fitting a regressor on the original dataset for the task at hand and then fit additional regressors where the weights have been adjusted based on the current prediction error.

These are the parameters:

sklearn.ensemble.AdaBoostRegressor(base_estimator = None, * ,

n_estimators = 50, learning_rate = 1.0, loss = 'linear',

random_state = None)

If you would like to know more about them and their attributes, click here.

Let’s see it as an example:

#evaluate adaboost ensemble

for regression

from numpy

import mean

from numpy

import std

from sklearn.datasets

import make_regression

from sklearn.model_selection

import cross_val_score

from sklearn.model_selection

import RepeatedKFold

from sklearn.ensemble

import AdaBoostRegressor

# define dataset

X, y = make_regression(n_samples = 1000, n_features = 20,

n_informative = 15, noise = 0.1, random_state = 6)

# define the model

model = AdaBoostRegressor()

# evaluate the model

cv = RepeatedKFold(n_splits = 10, n_repeats = 3, random_state = 1)

n_scores = cross_val_score(model, X, y, scoring =

'neg_mean_absolute_error', cv = cv, n_jobs = -1, error_score =

'raise')

# report performance

print('MAE: %.3f (%.3f)' % (mean(n_scores), std(n_scores)))

Code source: MachineLearningMastery

Wrapping it up

If you want to learn more about ensemble methods and how you can make better predictions using the techniques: bagging, boosting, and stacking - check out the MachineLearningMastery book: Ensemble Learning Algorithms With Python

Josh Starmer, the Statistics and Machine Learning Guru helped me to better understand AdaBoost through this video: AdaBoost, Clearly Explained

Nisha Arya is a Data Scientist and Freelance Technical Writer. She is particularly interested in providing Data Science career advice or tutorials and theory based knowledge around Data Science. She also wishes to explore the different ways Artificial Intelligence is/can benefit the longevity of human life. A keen learner, seeking to broaden her tech knowledge and writing skills, whilst helping guide others.