Free From Google: Generative AI Learning Path

Want to keep updated about Generative AI? Check these free courses and resources from Google Cloud.

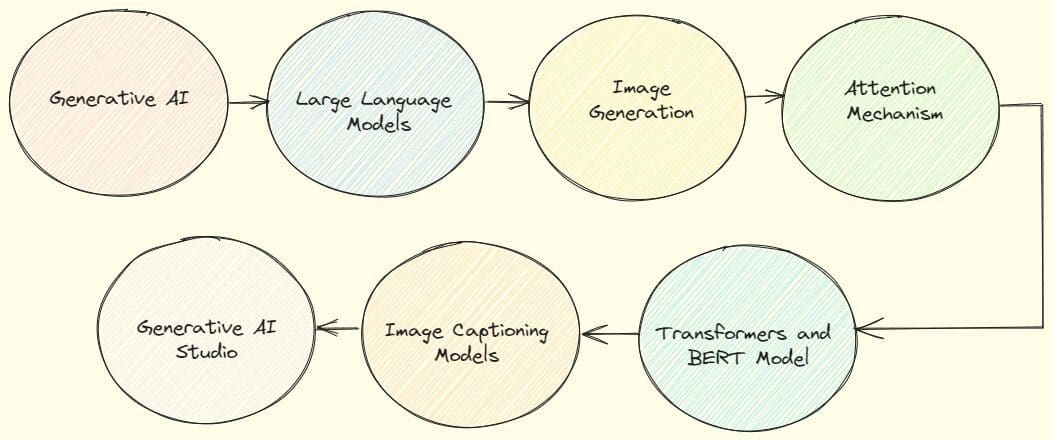

Illustration by Author

Are you interested in discovering the potential of Generative AI Models and their applications? Luckily, Google Cloud released the Generative AI Learning Path, a great collection of free courses, that start from explaining the basic concepts of Generative AI to more sophisticated tools, like Generative AI Studio to build your customized generative AI models.

This article will explore seven of the available courses, that will allow you to understand the concepts behind the Large Language Models that surround us every day and create new AI solutions. Let’s get started!

1. Introduction to Generative AI

Course link: Introduction to Generative AI

This first course is an introduction to Generative AI by Dr Gwendolyn Stripling, an AI Technical Curriculum Developer at Google Cloud. It will make you learn what Generative AI is and how it’s applied. It begins with the basic concepts of data science (AI, Machine Learning, Deep Learning), and how Generative AI is different from these disciplines. Moreover, it explains the key concepts that surround Generative AI with very intuitive illustrations, such as transformers, hallucinations and Large Language Models.

Video duration: 22 minutes

Lecturer: Gwendolyn Stripling

Suggested readings:

- Ask a Techspert: What is generative AI?

- Build new generative AI powered search & conversational experiences with Gen App Builder

- The implications of Generative AI for businesses

2. Introduction to Large Language Models

Course link: Introduction to Large Language Models

This second course aims to introduce what Language Models are at a high level. In particular, it gives examples of LLM applications, like text classification, question answering and document summarization. In the end, it shows the potential of Google’s Generative AI Development tools to build your applications with no code.

Video duration: 15 minutes

Lecturer: John Ewald

Suggested readings:

- NLP's ImageNet moment has arrived

- Google Cloud supercharges NLP with large language models

- LaMDA: our breakthrough conversation technology

3. Introduction to Image Generation

Course link: Introduction to Image Generation

This third course focuses on explaining the most important diffusion models, a family of models that generate images. Some of the most promising approaches are Variational Autoencoders, Generative Adversarial Models and Autoregressive Models.

It also shows the use cases that can be categorized into two types: unconditioned generation and conditioned generation. The first includes human face synthesis and super-resolution as applications, while examples of conditioned generation are the generation of images from a text prompt, the image inpainting and the text-guided image-to-image.

Video duration: 9 minutes

Lecturer: Kyle Steckler

4. Attention Mechanism

Course link: Attention Mechanism

In this short course, you will learn more about the attention mechanism, which is a very important concept behind transformers and Large Language Models. It has enabled improving tasks, such as machine translation, text summarization and question answering. In particular, it shows how the attention mechanism works to solve the machine translation.

Video duration: 5 minutes

Lecturer: Sanjana Reddy

5. Transformer Models and BERT Model

Course link: Transformer Models and BERT Model

This course covers transformer architecture, which is an underlying concept behind the BERT model. After explaining the transformer, it gives an overview of BERT and how it’s applied to solve different tasks, such as single-sentence classification and question answering.

Differently from the previous courses, the theory is accompanied by a laboratory, which requires prior knowledge of Python and TensorFlow.

Video duration: 22 minutes

Lecturer: Sanjana Reddy

Suggested readings:

- Attention Is All You Need

- Transformer: A Novel Neural Network Architecture for Language Understanding

6. Create Image Captioning Models

Course link: Create Image Captioning Models

This course aims to explain the Image Captioning Models, which are generative models that produce text captions by taking images as input. It exploits an encoder-decoder structure, attention mechanism and transformer to solve the task of predicting a caption for a given image. Like the previous course, there is also a laboratory to put the theory into practice. It is again oriented toward data professionals with prior knowledge in Python and Tensorflow.

Video duration: 29 minutes

Lecturer: Takumi Ohyama

7. Introduction to Generative AI Studio

Course link: Introduction to Generative AI Studio

This last course introduces and explores Generative AI Studio. It starts by explaining again what Generative AI is and its use cases, such as code generation, information extraction and virtual assistance. After giving an overview of these core concepts, Google Cloud shows the tools that help on solving Generative AI tasks even without an AI background. One of these tools is Vertex AI, which is a platform that enables to manage the machine learning cycle, from building to the deployment of the machine model. This end-to-end platform includes two products, Generative AI Studio and Model Garden. The course is focused on explaining Generative AI Studio, which allows to build easily generative models with no code or low code.

Video duration: 15 minutes

Suggested readings:

Final Thoughts

I hope that you have found useful this fast overview of the Generative AI course provided by Google Cloud. If you don’t know where to start in understanding the core concepts of Generative AI, this path covers every aspect. In case you have already a machine learning background, there are surely models and use cases you can discover from one of these courses. Do you know other free courses about Generative AI? Drop them in the comments if you have insightful suggestions.

Eugenia Anello is currently a research fellow at the Department of Information Engineering of the University of Padova, Italy. Her research project is focused on Continual Learning combined with Anomaly Detection.