Exploring Neural Networks

Unlocking the power of AI: a suide to neural networks and their applications.

Imagine a machine thinking, learning, and adapting like the human brain and discovering hidden patterns within data.

This technology, Neural Networks (NN), algorithms are mimicking cognition. We'll explore what NNs are and how they function later.

In this article, I'll explain to you the Neural Networks (NN) fundamental aspects - structure, types, real-life applications, and key terms defining operation.

What is a Neural Network?

Source: vitalflux.com

Algorithms called Neural Networks (NN) try to find relationships within data, imitating the human brain's operations for "learning" from data.

Neural networks can be mixed with deep learning and machine learning. So it will be good to explain these terms first. Let’s start.

Neural Network vs. Deep Learning vs. Machine Learning

Neural Networks form the foundation of Deep Learning, a subset of Machine Learning. While Machine Learning models learn from data and make predictions, Deep Learning goes deeper and can process huge amounts of data, recognizing complex patterns.

If you want to learn more about Machine Learning algorithms, read this one.

Moreover, these neural networks have become integral parts of many fields, serving as the backbone of numerous modern technologies, which we will see in later sections. These applications range from face recognition to natural language processing.

Let's explore some common areas where Neural Networks play a vital role in improving daily life.

Types of Neural Network

Real-world applications enrich understanding of Neural Networks, revolutionizing traditional methods across industries with accurate, efficient solutions.

Let's highlight intriguing examples of Neural Networks driving innovation and transforming everyday experiences, including Neural Network Types.

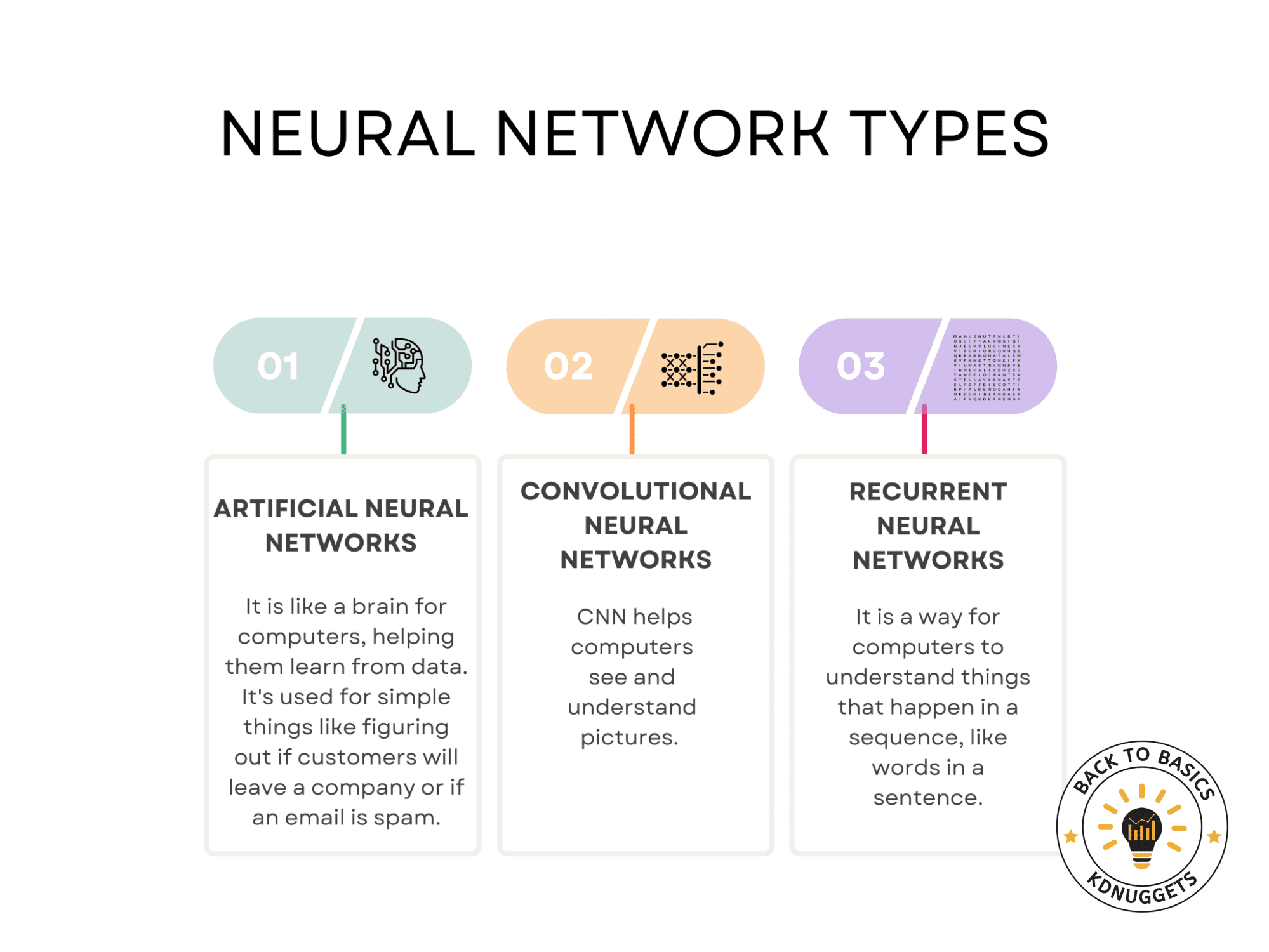

Image by Author

ANN (Artificial Neural Networks):

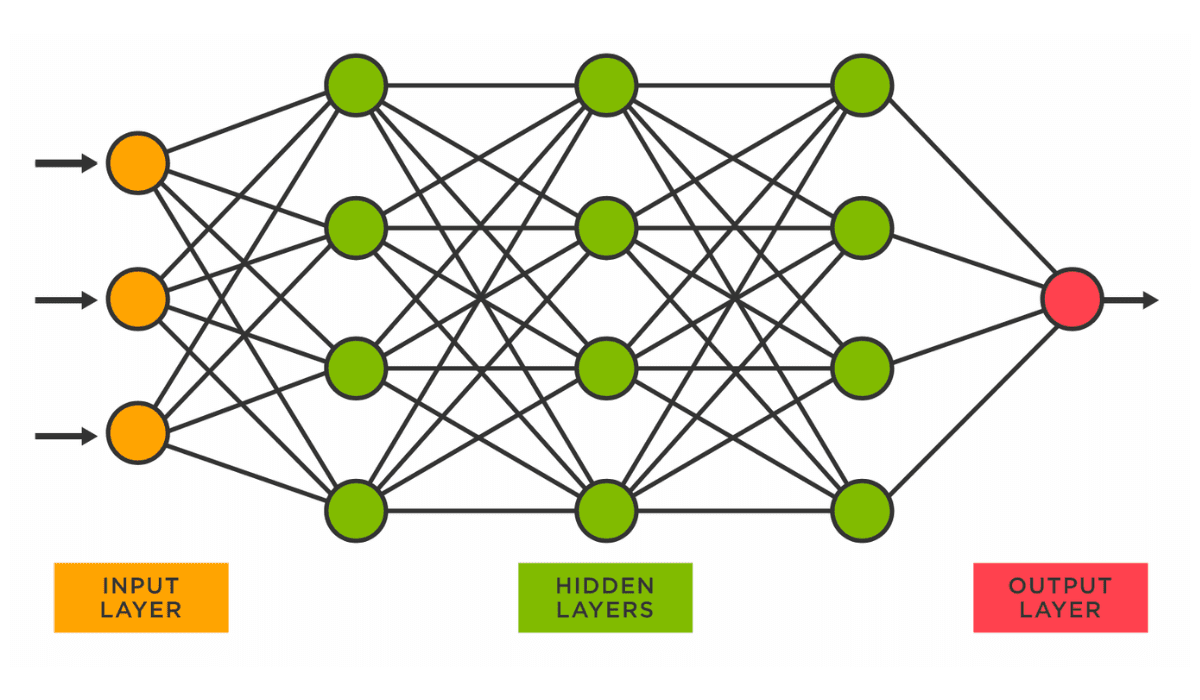

Artificial Neural Network (ANN), architecture is inspired by the biological neural network of the human brain. The network consists of interconnected layers, input, hidden, and output. Each layer contains multiple neurons that are connected to every neuron in the adjacent layer.

As data moves through the network, each connection applies a weight, and each neuron applies an activation function like ReLU, Sigmoid, or Tanh. These functions introduce non-linearity, making it possible for the network to learn from errors and make complex decisions.

During training, a technique called backpropagation is used to adjust these weights. This technique uses gradient descent to minimize a predefined loss function, aiming to make the network's predictions as accurate as possible.

ANN Use Cases

Customer Churn Prediction

ANNs analyze multiple features like user behavior, purchase history, and interaction with customer service to predict the likelihood of a customer leaving the service.

ANNs can model complex relationships between these features, providing a nuanced view that's crucial for predicting customer churn accurately.

Sales Forecasting

ANNs take historical sales data and other variables like marketing spend, seasonality, and economic indicators to predict future sales.

Their ability to learn from errors and adjust for complex, non-linear relationships between variables makes them well-suited for this task.

Spam Filtering

ANNs analyze the content, context, and other features of emails to classify them as spam or not.

They can learn to recognize new spam patterns, adapting over time, which makes them effective at filtering out unwanted messages.

CNN (Convolutional Neural Networks):

Convolutional Neural Networks (CNNs) are designed specifically for tasks that involve spatial hierarchies, like image recognition. The network uses specialized layers called convolutional layers to apply a series of filters to an input image, producing a set of feature maps.

These feature maps are then passed through pooling layers that reduce their dimensionality, making the network computationally more efficient. Finally, one or more fully connected layers perform classification.

The training process involves backpropagation, much like ANNs, but tailored to preserve the spatial hierarchy of features.

CNN Use Cases

Image Classification

CNNs apply a series of filters and pooling layers to automatically recognize hierarchical patterns in images.

Their ability to reduce dimensionality and focus on essential features makes them efficient and accurate for categorizing images.

Object Detection

CNNs not only classify but also localize objects within an image by drawing bounding boxes.

The architecture is designed to recognize spatial hierarchies, making it capable of identifying multiple objects within a single image.

Image Segmentation

CNNs can assign a label to each pixel in the image, classifying it as belonging to a particular object or background.

The network's granular, pixel-level understanding makes it ideal for tasks like medical imaging where precise segmentation is crucial.

RNN (Recurrent Neural Networks):

Recurrent Neural Networks (RNNs) differ in that they have an internal loop, or recurrent architecture, that allows them to store information. This makes them ideal for handling sequential data, as each neuron can use its internal state to remember information from previous time steps in the sequence.

While processing the data, the network takes into account both the current and previous inputs, allowing it to develop a kind of short-term memory. However, RNNs can suffer from issues like vanishing and exploding gradients, which make learning long-range dependencies in data difficult.

To address these issues, more advanced versions like Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRU) networks were developed.

RNN Use Cases

Speech-to-text

RNNs take audio sequences as input and produce a text sequence as output, taking into account the temporal dependencies in spoken language.

The recurrent nature of RNNs allows them to consider the sequence of audio inputs, making them adept at understanding the context and nuances in human speech.

Machine Translation

RNNs convert a sequence from one language to another, considering the entire input sequence to produce an accurate output sequence.

The sequence-to-sequence learning capability maintains context between languages, making translations more accurate and contextually relevant.

Sentiment Analysis

RNNs analyze sequences of text to identify and extract opinions and feelings.

The memory feature in RNNs helps capture the emotional build-up in textual sequences, making them suitable for sentiment analysis tasks.

Final Thoughts

Looking ahead, the future promises continued Neural Network advancement and special use cases. As algorithms evolve to handle more complex data, they will unlock new possibilities in healthcare, transportation, finance, and beyond.

To learn neural networks, doing a real-life project is very effective. From recognizing faces to predicting diseases, they are reshaping the way we live and work.

In this article, we reviewed its fundamentals, real-life examples like face detecting and recognition, and more.

Thanks for reading!

Nate Rosidi is a data scientist and in product strategy. He's also an adjunct professor teaching analytics, and is the founder of StrataScratch, a platform helping data scientists prepare for their interviews with real interview questions from top companies. Connect with him on Twitter: StrataScratch or LinkedIn.