Introduction to Natural Language Processing

An overview of Natural Language Processing (NLP) and its applications.

Image by Author

We’re learning a lot about ChatGPT and large language models (LLMs). Natural Language Processing has been an interesting topic, a topic that is currently taking the AI and tech world by storm. Yes, LLMs like ChatGPT have helped their growth, but wouldn’t it be good to understand where it all comes from? So let’s go back to the basics - NLP.

NLP is a subfield of artificial intelligence, and it is the ability of a computer to detect and understand human language, through speech and text just the way we humans can. NLP helps models process, understand and output the human language.

The goal of NLP is to bridge the communication gap between humans and computers. NLP models are typically trained on tasks such as next word prediction which allow them to build contextual dependencies and then be able to generate relevant outputs.

Fundamentals of Natural Language

The fundamentals of NLP revolve around being able to understand the different elements, characteristics and structure of the human language. Think about the times you tried to learn a new language, you had to understand different elements of it. Or if you haven’t tried learning a new language, maybe going to the gym and learning how to squat - you have to learn the elements of having good form.

Natural language is the way we as humans communicate with one another. There are more than 7,100 languages in the world today. Wow!

There are some key fundamentals of natural language:

- Syntax - This refers to the rules and structures of the arrangement of words to create a sentence.

- Semantics - This refers to the meaning behind words, phrases and sentences in language.

- Morphology - This refers to the study of the actual structure of words and how they are formed from smaller units called morphemes.

- Phonology - This refers to the study of sounds in language, and how the distinct units are formed together to combine words.

- Pragmatics - This is the study of how context plays a big role in the interpretation of language, for example, tone.

- Discourse - This is the connection between the context of language and how ideas form sentences and conversations.

- Language Acquisition - This is how humans learn and develop language skills, for example, grammar and vocabulary.

- Language Variation - This focuses on the 7,100+ languages that are spoken across different regions, social groups, and contexts.

- Ambiguity - This refers to words or sentences with multiple interpretations.

- Polysemy - This refers to words with multiple related meanings.

As you can see there are a variety of key fundamental elements of natural language, in which all of these are used to steer language processing.

Key Elements of NLP

So now we know the fundamentals of natural language. How is it used in NLP? There is a wide range of techniques used to help computers understand, interpret, and generate human language. These are:

- Tokenization - This refers to the process of breaking down or splitting paragraphs and sentences into smaller units so that they can be easily defined to be used for NLP models. The raw text is broken down into smaller units called Tokens.

- Part-of-Speech Tagging - This is a technique that involves assigning grammatical categories, for example, nouns, verbs, and adjectives to each token in a sentence.

- Named Entity Recognition (NER) - This is another technique that identifies and classifies named entities, for example, people's names, organizations, places, and dates in text.

- Sentiment Analysis - This is a technique that analyzes the tone expressed in a piece of text, for example, whether it's positive, negative, or neutral.

- Text Classification - This is a technique that categorizes text that is found in different types of documentation into predefined classes or categories based on their content.

- ??Semantic Analysis - This is a technique that analyzes words and sentences to get a better understanding of what is being said using context and relationships between words.

- Word Embeddings - This is when words are represented as vectors to help computers understand and capture the semantic relationship between words.

- Text Generation - is when a computer can create human-like text based on learning patterns from existing text data.

- Machine Translation - This is the process of translating text from one language to another.

- Language Modeling - This is a technique that takes all the above tools and techniques into consideration. This is the building of probabilistic models that can predict the next word in a sequence.

If you’ve worked with data before, you know that once you collect your data, you will need to standardize it. Standardizing data is when you convert data into a format that computers can easily understand and use.

The same applies to NLP. Text normalization is the process of cleaning and standardizing text data into a consistent formation. You will want a format that doesn’t have a lot or if any variations and noise. This makes it easier for NLP models to analyze and process the language more effectively and accurately.

How does NLP work?

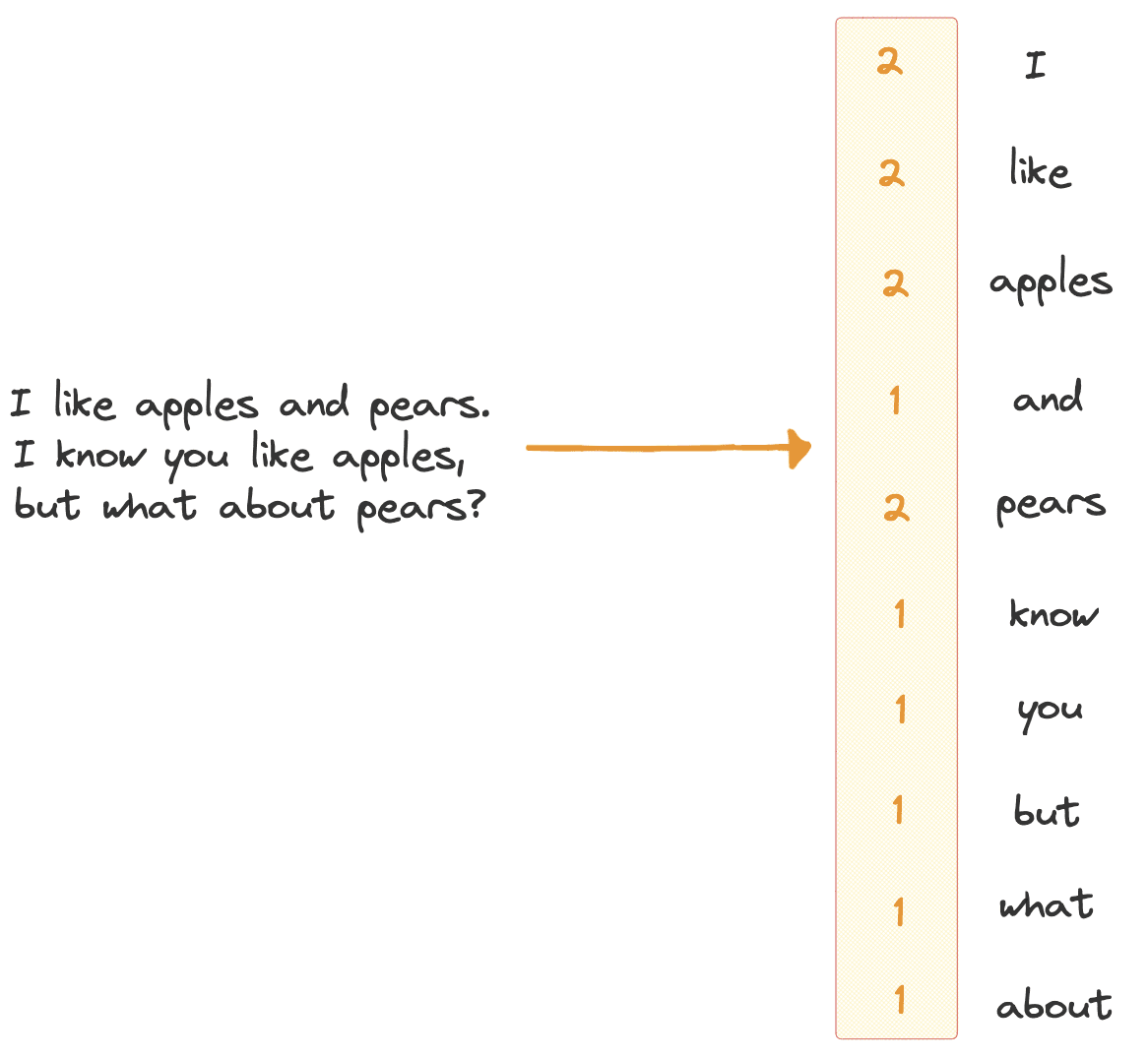

Before you can ingest anything into your NLP model, you need to understand computers and understand that they only understand numbers. Therefore, when you have text data, you will need to use text vectorization to transform the text into a format that the machine learning model can understand.

Have a look at the image below:

Image by Author

Once the text data is vectorised in a format the machine can understand, the NLP machine learning algorithm is then fed training data. This training data helps the NLP model to understand the data, learn patterns, and make relationships about the input data.

Statistical analysis and other methods are also used to build the model's knowledge base, which contains characteristics of the text, different features, and more. It’s basically a part of their brain that has learnt and stored new information.

The more data fed into these NLP models during the training phase, the more accurate the model will be. Once the model has gone through the training phase, it will then be put to the test through the testing phase. During the testing phase, you will see how accurately the model can predict outcomes using unseen data. Unseen data is new data to the model, therefore it has to use its knowledge base to make predictions.

As this is a back-to-basics overview of NLP, I have to do exactly that and not lose you with too heavy terminology and complex topics. If you would like to know more, have a read of:

- The Ultimate Guide To Different Word Embedding Techniques In NLP

- The ABCs of NLP, From A to Z

- The Best Way to Learn Practical NLP?

NLP Applications

Now you have a better understanding of the fundamentals of natural language, key elements of NLP and how it vaguely works. Below is a list of NLP applications in today's society.

- Sentiment Analysis

- Text Classification

- Language Translation

- Chatbots and Virtual Assistants

- Speech Recognition

- Information Retrieval

- Named Entity Recognition (NER)

- Topic Modeling

- Text Summarization

- Language Generation

- Spam Detection

- Question Answering

- Language Modeling

- Fake News Detection

- Healthcare and Medical NLP

- Financial Analysis

- Legal Document Analysis

- Emotion Analysis

Wrapping it up

There have been a lot of recent developments in NLP, as you may already know with chatbots such as ChatGPT and large language models coming out left right and centre. Learning about NLP will be very beneficial for anybody, especially for those entering the world of data science and machine learning.

If you would like to learn more about NLP, have a look at: Must Read NLP Papers from the Last 12 Months

Nisha Arya is a Data Scientist, Freelance Technical Writer and Community Manager at KDnuggets. She is particularly interested in providing Data Science career advice or tutorials and theory based knowledge around Data Science. She also wishes to explore the different ways Artificial Intelligence is/can benefit the longevity of human life. A keen learner, seeking to broaden her tech knowledge and writing skills, whilst helping guide others.