The Best Way to Learn Practical NLP?

Hugging Face has just released a course on using its libraries and ecosystem for practical NLP, and it appears to be very comprehensive. Have a look for yourself.

I probably don't need to tell you that Hugging Face — and in particular its Transformers library — has become a major power player in the NLP space. Transformers is full of SOTA NLP models which can be used out of the box as-is, as well as fine-tuned for specific uses and high performance. Hugging Face NLP tools don't stop there, however; its ecosystem includes numerous additional libraries, such as Datasets, Tokenizers, and Accelerate, and the ???? Model Hub.

However, with all of the massive and relentless advancements of natural language processing recently, keeping up with research breakthroughs and SOTA practices can be fraught with challenges. Hugging Face truly does provide a cohesive set of tools for practical NLP, but how does one keep up with both the most recent best practices and approaches to implementing NLP solutions and implementing these solutions with Hugging Face libraries?

Well, Hugging Face has a solution to this as well. Welcome to the Hugging Face course.

This course, taught by Matthew Carrigan, Lysandre Debut, and Sylvain Gugger, promises to cover a lot of ground:

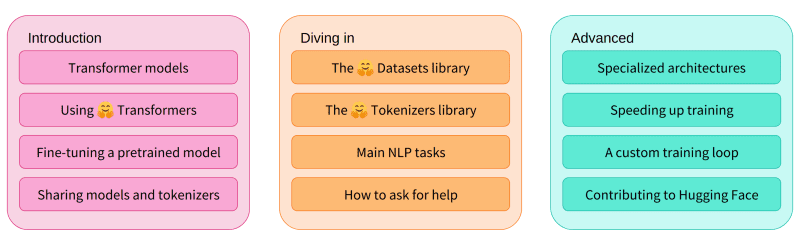

- Introduction - it will provide an introduction to the ???? Transformers library — how Transformer models work; using models from the ???? Hub; fine-tuning models on datasets; and sharing your results.

- Diving in - the course covers the basics of ???? Datasets and ???? Tokenizers, allowing students to plot their approach to common NLP problems on their own.

- Advanced - more complex topics are covered — learn specialized architectures, for concerns such as memory efficiency and long sequences; gain exposure to more custom solutions; confidently solve complex NLP problems; and contribute to ???? Transformers.

A brief overview of the Hugging Face course (source)

What do you need to jump into this course? While prior PyTorch or TensorFlow knowledge is not necessary, though would certainly be helpful, course creators note 2 specific prerequisites:

- A good knowledge of Python

- An understanding of introductory deep learning, perhaps via a course such as those developed by fast.ai or deeplearning.ai

I have not gone through the course to much extent yet; however, I have been hoping for and expecting something like this from Hugging Face for some time, and as such I will definitely be going through this material as soon as I can.

From what I have looked through, the course looks very well put together, with code, videos, notes, etc. I like the progression as well, getting students using the Transformers library right away via the Pipeline function, and then moving further down the layers from there. There is also setup help to get students up and running quickly with TensorFlow or PyTorch in either a local environment or using Colab, with all options fully supported throughout.

Output of the Transformer model is sent directly to the model head to be processed (source)

There is a lot going on in the vast field of NLP, and there is a lot to keep up with. Hugging Face first set out to centralize and make widely-available the increasing number of Transformers, and these early efforts have made its subsequent ecosystem a leader in the field. Why not see how you can master these tools yourself? This will undoubtedly help you stay relevant in the ever-evolving field of NLP.

Related:

- The Essential Guide to Transformers, the Key to Modern SOTA AI

- How to Create and Deploy a Simple Sentiment Analysis App via API

- Great New Resource for Natural Language Processing Research and Applications