How to Create and Deploy a Simple Sentiment Analysis App via API

In this article we will create a simple sentiment analysis app using the HuggingFace Transformers library, and deploy it using FastAPI.

Image source: Reputation X

Let's say you've built an NLP model for some specific task, whether it be text classification, question answering, translation, or what have you. You've tested it out locally and it performs well. You've had others test it out as well, and it continues to perform well. Now you want to roll it out to a larger audience, be that audience a team of developers you work with, a specific group of end users, or even the general public. You have decided that you want to do so using a REST API, as you find this to be your best option. What now?

FastAPI might be able to help. FastAPI is FastAPI is a web framework for building APIs with Python. We will use FastAPI in this article to build a REST API to service an NLP model which can be queried via GET request and can dole out responses to those queries.

For this example, we will skip the building of our own model, and instead leverage the Pipeline class of the HuggingFace Transformers library. Transformers is full of SOTA NLP models which can be used out of the box as-is, as well as fine-tuned for specific uses and high performance. The library's pipelines can be summed up as:

The pipelines are a great and easy way to use models for inference. These pipelines are objects that abstract most of the complex code from the library, offering a simple API dedicated to several tasks, including Named Entity Recognition, Masked Language Modeling, Sentiment Analysis, Feature Extraction and Question Answering.

Using the Transformers library, FastAPI, and astonishingly little code, we are going to create and deploy a very simple sentiment analysis app. We will also see how extending this same approach to a more complex app would be quite straightforward.

Getting Started

As outlined above, we will be using Transformers and FastAPI to build this app, which means you will need these installed on your system. You will also require installation of Uvicorn, an ASGI server that FastAPI relies on as part of its backend. I easily installed them all on my *buntu system using pip:

pip install transformers pip install fastapi pip install uvicorn

That's it. Moving on...

Analyzing Sentiment

Since we will be using Transformers for our NLP pipeline, let's first see how we would get this working standalone. Doing so is remarkably uncomplicated, and requires very few basic parameters be passed to the pipeline object in order to get started. Specifically, what we will need to define are a task — what it is we want to do — and a model — what it is we will use to perform our task. And that's really all there is to it. We can optionally provide additional parameters or fine-tune the pipeline to our specific task and data, but for our purposes using a model out of the box should work just fine.

For us, the task is sentiment-analysis and the model is nlptown/bert-base-multilingual-uncased-sentiment. This is a BERT model trained for multilingual sentiment analysis, and which has been contributed to the HuggingFace model repository by NLP Town. Note that the first time you run this script the sizable model will be downloaded to your system, so ensure that you have the available free space to do so.

Here is the code to setup the standalone sentiment analysis app:

from transformers import pipeline

text = 'i love this movie!!! :)'

# Instantiate a pipeline object with our task and model passed as parameters

nlp = pipeline(task='sentiment-analysis',

model='nlptown/bert-base-multilingual-uncased-sentiment')

# Pass the text to our pipeline and print the results

print(f'{nlp(text)}')

What is returned by the call to the pipeline object is a predicted label and its corresponding probability. In our case, the combination of task and model that we are using results in labels between 1 (negative) and 5 (positive), along with the prediction probability. Let's give our script a run to see how it does.

python test_model.py

>>> [{'label': '5 stars', 'score': 0.923753023147583}]

And that's all there is to getting some functionally basic NLP task-specific out of the box model up and running using the Transformers library and its Pipeline class.

If you want to test this out a bit more before we move on to deploying it via a REST API, give this modified script a shot, which allows us to pass text from the command line and responds with a more nicely formatted reply:

import sys

from transformers import pipeline

if len(sys.argv) != 2:

print('Usage: python model_test.py <input_string>')

sys.exit(1)

text = sys.argv[1]

# Instantiate a pipeline object with our task and model passed as parameters

nlp = pipeline(task='sentiment-analysis',

model='nlptown/bert-base-multilingual-uncased-sentiment')

# Get and process result

result = nlp(text)

sent = ''

if (result[0]['label'] == '1 star'):

sent = 'very negative'

elif (result[0]['label'] == '2 star'):

sent = 'negative'

elif (result[0]['label'] == '3 stars'):

sent = 'neutral'

elif (result[0]['label'] == '4 stars'):

sent = 'positive'

else:

sent = 'very positive'

prob = result[0]['score']

# Format and print results

print(f"{{'sentiment': '{sent}', 'probability': '{prob}'}}")

Let's run this a few times to see how it performs:

python model_test.py 'the sky is blue'

>>> {'sentiment': 'neutral', 'probability': '0.2726307213306427'}

python model_test.py 'i really hate this restaurant!'

>>> {'sentiment': 'very negative', 'probability': '0.9228281378746033'}

python model_test.py 'i love this movie!!! :)'

>>> {'sentiment': 'very positive', 'probability': '0.923753023147583'}

This is better functionality, since we don't have to hard code the text we want analyzed into our program, and we have also made the results a bit more user-friendly. Let's extend this more useful version and deploy it as a REST API.

Creating The API

Time to deploy this ridiculously simple sentiment analysis app via REST API built using FastAPI. If you want to learn more about FastAPI and how to format your code using the framework, check out its documentation. What you absolutely need to know here is that we will create an instance of FastAPI (app), and then must define get requests, attach them to URLs, and assign responses for these requests via functions.

Below, we will define 2 such get requests; one for the root URL ('/'), which displays a welcome message, and another for accepting strings for performing sentiment analysis on ('/sentiment_analysis/'). The code for both is quite simple; you should recognize much of what is contained in the analyze_sentiment() function that the '/sentiment_analysis/' request calls from our standalone app.

from transformers import pipeline

from fastapi import FastAPI

nlp = pipeline(task='sentiment-analysis',

model='nlptown/bert-base-multilingual-uncased-sentiment')

app = FastAPI()

@app.get('/')

def get_root():

return {'message': 'This is the sentiment analysis app'}

@app.get('/sentiment_analysis/')

async def query_sentiment_analysis(text: str):

return analyze_sentiment(text)

def analyze_sentiment(text):

"""Get and process result"""

result = nlp(text)

sent = ''

if (result[0]['label'] == '1 star'):

sent = 'very negative'

elif (result[0]['label'] == '2 star'):

sent = 'negative'

elif (result[0]['label'] == '3 stars'):

sent = 'neutral'

elif (result[0]['label'] == '4 stars'):

sent = 'positive'

else:

sent = 'very positive'

prob = result[0]['score']

# Format and return results

return {'sentiment': sent, 'probability': prob}

And now we have a REST API capable of accepting get requests and performing sentiment analysis. Before we try it out, we have to run the Uvicorn web server which will provide the necessary back end functionality. In order to do so, and assuming you saved the above code in a file called main.py and left the name of the FastAPI instance as app, run this:

uvicorn main:app --reload

You should then see something like this:

INFO: Uvicorn running on http://127.0.0.1:8000 (Press CTRL+C to quit) INFO: Started reloader process [18271] using statreload INFO: Started server process [18273] INFO: Waiting for application startup. INFO: Application startup complete.

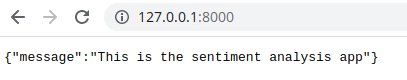

Open your browser as indicated to http://127.0.0.1:8000 and you should see:

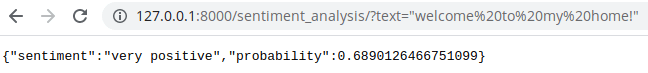

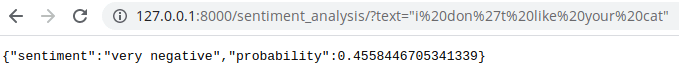

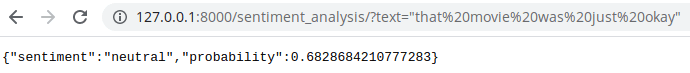

If you see the welcome message, congrats, it works! We can try using the browser address bar to make some requests, pasting what is in the quotation marks below after the request URL and query string (http://127.0.0.1:8000/sentiment_analysis/?text=):

"welcome to my home!"

"i don't like your cat"

"that movie was just okay"

Great, we get results! Now what if we want to treat this like an API and access it accordingly? In Python, we could use the requests library. Make sure it's installed using:

pip install requests

Then give a script like this a try:

import requests

query = {'text':'i love the fettucine alfredo and would definitely recommend this restaurant to my friends!'}

response = requests.get('http://127.0.0.1:8000/sentiment_analysis/', params=query)

print(response.json())

After saving, execute the script and you should get a result like this:

python rest_request.py

>>> {'sentiment': 'very positive', 'probability': 0.8293750882148743}

This worked as well. Excellent!

You can read more about the requests library here.

Conclusions

There is obviously much more we could have done here. Preprocessing the data would have been useful. Performing error checking would have been advisable. As confirmation of this, try using some crazy characters or not wrapping a lengthy request with punctuation in quotes and see what happens.

Next time I promise we will expand upon what we have done here today and will make something more robust and far more interesting. I'm already working on it.

Related: