Unlocking GPT-4 Summarization with Chain of Density Prompting

Unlock the power of GPT-4 summarization with Chain of Density (CoD), a technique that attempts to balance information density for high-quality summaries.

Image created by Author with Midjourney

Key Takeaways

- Chain of Density (CoD) is a novel prompt engineering technique designed for optimizing summarization tasks in Large Language Models like GPT-4

- The technique deals with controlling the information density in the generated summary, providing a balanced output that is neither too sparse nor too dense

- CoD has practical implications for data science, especially in tasks that require high-quality, contextually appropriate summarizations

Selecting the "right" amount of information to include in a summary is a difficult task.

Introduction

Prompt engineering is the fuel that powers advancements in the efficacy of generative AI. While existing prompting stalwarts such as Chain-of-Thought and Skeleton-of-Thought focus on structured and efficient output, a recent technique called Chain of Density (CoD) aims to optimize the quality of text summarizations. This technique addresses the challenge of selecting the "right" amount of information for a summary, ensuring it is neither too sparse nor too dense.

Understanding Chain of Density

Chain of Density is engineered to improve the summarization capabilities of Large Language Models like GPT-4. It focuses on controlling the density of information in the generated summary. A well-balanced summary is often the key to understanding complex content, and CoD aims to strike that balance. It uses special prompts that guide the AI model to include essential points while avoiding unnecessary details.

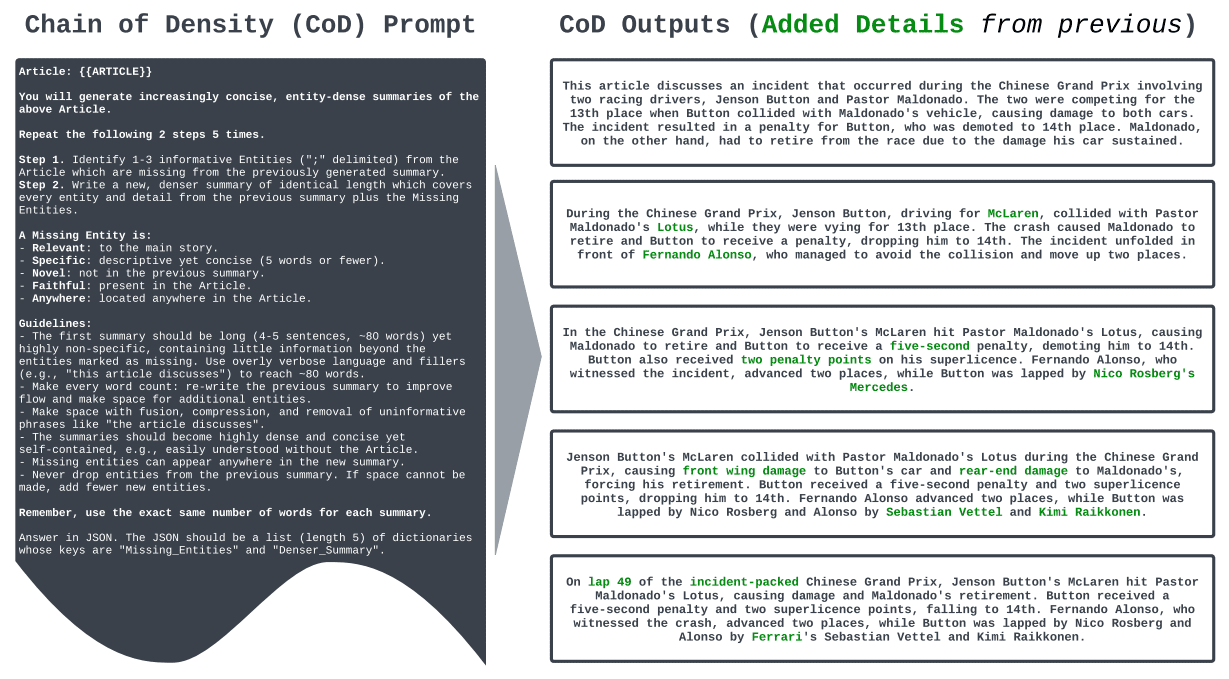

Figure 1: The Chain of Density process using an example (From Sparse to Dense: GPT-4 Summarization with Chain of Density Prompting) (Click to enlarge)

Implementing Chain of Density

Implementing CoD involves the use of a series of chained prompts that guide the model in generating a summary. These prompts are designed to control the model's focus, directing it toward essential information and away from irrelevant details. For example, you might start with a general prompt for summarization and then follow up with specific prompts to adjust the density of the generated text.

Steps of the Chain of Density Prompting Process

- Identify the Text for Summarization: Choose the document, article, or any piece of text that you wish to summarize.

- Craft the Initial Prompt: Create an initial summarization prompt tailored to the selected text. The aim here is to guide the Large Language Model (LLM) like GPT-4 towards generating a basic summary.

- Analyze the Initial Summary: Review the summary generated from the initial prompt. Identify if the summary is too sparse (missing key details) or too dense (containing unnecessary details).

- Design Chained Prompts: Based on the initial summary's density, construct additional prompts to adjust the level of detail in the summary. These are the "chained prompts" and are central to the Chain of Density technique.

- Execute Chained Prompts: Feed these chained prompts back to the LLM. These prompts are designed to either increase the density by adding essential details or decrease it by removing non-essential information.

- Review the Adjusted Summary: Examine the new summary generated by executing the chained prompts. Ensure that it captures all essential points while avoiding unnecessary details.

- Iterate if Necessary: If the summary still doesn't meet the desired criteria for information density, return to step 4 and adjust the chained prompts accordingly.

- Finalize the Summary: Once the summary meets the desired level of information density, it is considered finalized and ready for use.

Chain of Density Prompt

The following CoD prompt is taken directly from the paper.

Article: {{ ARTICLE }}

You will generate increasingly concise, entity-dense summaries of the above Article.

Repeat the following 2 steps 5 times.

Step 1. Identify 1-3 informative Entities ("; " delimited) from the Article which are missing from the previously generated summary.

Step 2. Write a new, denser summary of identical length which covers every entity and detail from the previous summary plus the Missing Entities.A Missing Entity is:

- Relevant: to the main story.

- Specific: descriptive yet concise (5 words or fewer).

- Novel: not in the previous summary.

- Faithful: present in the Article.

- Anywhere: located anywhere in the Article.Guidelines:

- The first summary should be long (4-5 sentences, ~80 words) yet highly non-specific, containing little information beyond the entities marked as missing. Use overly verbose language and fillers (e.g., "this article discusses") to reach ~80 words.

- Make every word count: rewrite the previous summary to improve flow and make space for additional entities.

- Make space with fusion, compression, and removal of uninformative phrases like "the article discusses".

- The summaries should become highly dense and concise yet self-contained, e.g., easily understood without the Article.

- Missing entities can appear anywhere in the new summary.

- Never drop entities from the previous summary. If space cannot be made, add fewer new entities.Remember, use the exact same number of words for each summary.

Answer in JSON. The JSON should be a list (length 5) of dictionaries whose keys are "Missing_Entities" and "Denser_Summary".

Chain of Density is not a one-size-fits-all solution. It requires careful crafting of chained prompts to suit the specific needs of a task. However, when implemented correctly, it can significantly improve the quality and relevance of AI-generated summaries.

Conclusion

Chain of Density offers a new avenue in prompt engineering, specifically geared towards improving summarization tasks. Its focus on controlling information density makes it an invaluable tool for generating high-quality summaries. By incorporating CoD into your projects, you can tap into the advanced summarization capabilities of next-generation language models.

Matthew Mayo (@mattmayo13) holds a Master's degree in computer science and a graduate diploma in data mining. As Editor-in-Chief of KDnuggets, Matthew aims to make complex data science concepts accessible. His professional interests include natural language processing, machine learning algorithms, and exploring emerging AI. He is driven by a mission to democratize knowledge in the data science community. Matthew has been coding since he was 6 years old.

Matthew Mayo (@mattmayo13) holds a master's degree in computer science and a graduate diploma in data mining. As managing editor of KDnuggets & Statology, and contributing editor at Machine Learning Mastery, Matthew aims to make complex data science concepts accessible. His professional interests include natural language processing, language models, machine learning algorithms, and exploring emerging AI. He is driven by a mission to democratize knowledge in the data science community. Matthew has been coding since he was 6 years old.