Vowpal Wabbit: Fast Learning on Big Data

Vowpal Wabbit is a fast out-of-core machine learning system, which can learn from huge, terascale datasets faster than any other current algorithm. We also explain the cute name.

The Vowpal Wabbit (VW) is a project started at Yahoo! Research and now sponsored by Microsoft Research. Started and led by John Langford, VW focuses on fast learning by building an intrinsically fast learning algorithm. John gave two guest lectures to us on AllReduce and Bandits during NYU Big Data class this semester. From what I see, he is a reputed researcher and really passionate about online learning algorithm.

The Vowpal Wabbit name is oddly pronounced and strange. Langford explained that Vowpal Wabbit is how Elmer Fudd would pronounce “Vorpal Rabbit”. As for “Vorpal”, if you Google it, you will find “Vorpal Bunny”, which is also known as a “killer rabbit” in a popular computer game. Maybe this is exactly what he wants VW to be – cute but also powerful and fast.

VW supports a number of machine learning problems, importance weighting, a selection of loss functions and optimization algorithms, like SGD (Stochastic Gradient Descent), BFGS (a a popular algorithm for parameter estimation), conjugate gradient etc. It has been used to learn a sparse terafeature (i.e. 1012 sparse features) dataset on 1000 nodes in one hour, which beats all current machine linear learning algorithms. According to its tutorial on John Langford’s GitHub, VW is about a factor of 3 faster than svmsgd on the RCV1 example, which is a collection for text categorization.

The default mode of VW is a SGD online learner with a squared loss function. To run VW, the data is expected to be in a particular format, which is

“label [weight]| Namespace Feature1:Value1|Namespace Feature2:Value2 …”.

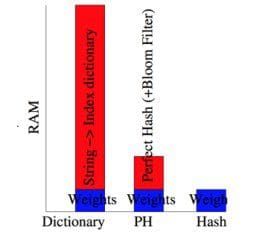

The format is also ideal for sparse representations since feature value of zero doesn’t need to be specified. If you are not sure whether the data is in the right format, you can always paste lines of the data in the Data Format Validation. VW is efficient and very scalable. To vectorize features it uses a hash trick, which takes almost no RAM and is 10 times faster since no hash-map table is maintained internally.

A dozen of companies are using VW. One of them is eHarmony, which helps people find true love. It is John’s favorite app as he said on NIPS 2011. VW was also used for solving several Kaggle competitions. More information can be found on

Note: Vowpal Wabbit is different from Deep Learning – see here Where to Learn Deep Learning – Courses, Tutorials, Software.

Related:

- MLTK: Machine Learning Toolkit in Java – free download

- Watch: Basics of Machine Learning

- Data Workflows for Machine Learning

Comment from Dan Rice on LinkedIn:

What is interesting about Vowpal is that it is fast because it does sampling, as it is a serial processing based method. This sampling is not done in the traditional way by sampling observations. Instead, they sample one feature at a time in the gradient descent – in what is stochastic gradient descent. So those in the machine learning community who criticize sampling also need to be aware that their own fast methods like Vowpal also clearly use sampling.

The Vowpal results sometimes might be as accurate as traditional gradient descent methods in standard logistic regression, but are often substantially less accurate. In fact, it is probably possible to match the speed and accuracy performance in Vowpal with large numbers of observations by using standard logistic regression and simply sampling observations. As referenced below, the inventor of Vowpal – Langford, has recently been publishing on hybrid approaches that mix the stochastic gradient descent approach of Vowpal with traditional gradient descent approaches in logistic regression to try to increase accuracy. But these are now parallel processing methods in part. With parallel processing today, the issue of speed is much less important than it was a few years ago, but the other issues like reliability, accuracy, stability and absence of bias are still far more important. If sampling is done, the key is to make sure that it does not compromise accuracy as it did in the case of Vowpal which is why there has been a movement to new more accurate methods by its inventor.

A Reliable Effective Terascale Linear Learning System, by Alekh Agarwal, Olivier Chapelle, Miroslav Dudik, John Langford.