Paradoxes of Data Science

There are many paradoxes, ironies and disconnects in today’s world of data science: pain points, things ignored, shoved under the rug, denied or paid lip.

By Thomas Ball, Analytics Professional.

There are many paradoxes, ironies and disconnects in today’s world of data science: pain points, things ignored, shoved under the rug, denied or paid lip service to as the data science juggernaut continues to roll out across the digital space. For instance, and despite Hal Varian’s widely quoted observation that “the sexiest job in the next 10 years will be statisticians,” the advent of easy-to-use, point-and-click statistical software and analytic tools has meant that literally anyone can push buttons and get an answer. It matters little whether or not this is like handing a loaded bazooka to a gorilla and telling it to go out and have a good time. The unspoken reality is that statistical analysis has been relegated to the status of a commoditized skill of secondary importance to the much more urgently needed tasks requiring real skills associated with managing the architecture of the digital fire hose. A corollary to this is the simplifying assumption that anyone with a computer science background has the requisite statistical and analytical skills, whether they actually do or not.

There are many paradoxes, ironies and disconnects in today’s world of data science: pain points, things ignored, shoved under the rug, denied or paid lip service to as the data science juggernaut continues to roll out across the digital space. For instance, and despite Hal Varian’s widely quoted observation that “the sexiest job in the next 10 years will be statisticians,” the advent of easy-to-use, point-and-click statistical software and analytic tools has meant that literally anyone can push buttons and get an answer. It matters little whether or not this is like handing a loaded bazooka to a gorilla and telling it to go out and have a good time. The unspoken reality is that statistical analysis has been relegated to the status of a commoditized skill of secondary importance to the much more urgently needed tasks requiring real skills associated with managing the architecture of the digital fire hose. A corollary to this is the simplifying assumption that anyone with a computer science background has the requisite statistical and analytical skills, whether they actually do or not.

It is a platitude to say that the times are disruptive but, as McLuhan noted, civilization is on the cusp of a digital, networked transformation as momentous as the printing of the Gutenberg Bible. It took several centuries for the effects of that innovation to cascade through religion and culture as mass-produced Bibles disseminated throughout Europe, minimizing the need for the clergy and authority of the Catholic Church to mediate the relationship with God, coalescing in the Protestant Reformation in Luther’s understanding that the printed Bible made each man a theologian.

In this democratized, historicizing moment, virtually no one is giving the digital revolution several centuries to play out. Hundreds of thousands of voices have sprung up on blogs or PLOS One, publishing millions of articles and books annually that collectively channel a tsunami of hope, hype and smoke and mirrors. Snake oil vendors and PT Barnums of big data and data science are proliferating along with a paralyzing surfeit of knowledge, and no clear line of sight through the chaos. In many ways ignorance has become the ironic arbiter of our time – we can praise folly but no one wants to pay for it.

Proxies towards a line of sight through the chaos are possible, however. One proxy is the outsized market valuations given by Wall St to entities such as Google, Apple, Amazon and FB vs the huge agency and media holding companies: WPP, Publicis, Omnicom and Interpublic – Yahoo could be included in this group as well. Realistic or not, the cumulated market caps of the tech players have multipliers in the neighborhood of 20 times the valuations of their big media counterparts (as of Friday, August 14, 2015). While these disparities may seem painfully obvious, the reasons behind them are not.

Key among these is the fact that the tech entities were founded and/or are run by tech-literate engineers and mathematicians while the, at best, tech semi-literate holding companies are run by traditional, old-school marketers: c-suite executives who have risen to the top by virtue of their superior communication and interpersonal skills.

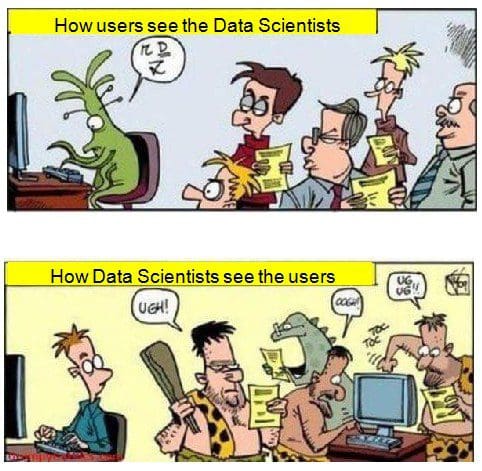

This underscores an easily identified line demarcating the tech-literate, forward thinking business segment from the innumerate traditionalist segment: traditionalists are the ones pandered to, the lowest common denominator of uninformed tech illiteracy who shut down, eyes glazed, when confronted with even modestly challenging presentations, demanding the information be dumbed down to the level of pablum for instantaneous comprehension and digestion. On the other hand, these same individuals are totally comfortable paying fluent lip service to the digital era, oblivious to the discontinuity, taking comfort in the fact that any offending, too tech-literate subordinates will be forced out of their organizations for “lack of fit.”

These traditionalists are the source of the tremendous inertia and equilibria in favor of the “common sense” ways of doing things and making money: the way it’s always been done. They’ve built their business models around maintenance of the status quo: just consider the evidence from the battle over the IP rights to music that began with Napster and continue to this day. The irony and paradox in all of this is that few are willing to identify themselves as old school or traditionalist, even if they are. It’s like the behavioral economic research showing that 80% of people think they are above average. Everyone today is a self-proclaimed, forward thinking innovator jumping on the bandwagon of the latest “big thing” to participate in all of the excitement, breathless buzz and hoopla of the times, making it extremely difficult to separate the wheat from the chaff.

This has implications for data science, particularly with respect to the appetite, potential for and investment in predictive analytics, algorithmic learning and artificial intelligence – those highly technical, least understood areas with the most significant prospective potential. There is an unacknowledged bifurcation out there in the tension between the demand for data scientists possessing so-called intuitive, creative insights along with the ability to make complex ideas “intelligible” vs those driving unintuitive, black box, algorithmic answers.

The unintuitive, tech-literate players are delivering foundational, algorithmically-driven innovations, the fodder from 20th century science fiction seeing the light of day in the form of driverless cars, virtual reality, wearable tech, research labs on a chip, etc. The media entities, on the other hand, remain hesitant and risk averse, so far downstream in this cascade of innovations that the word almost loses its meaning. They prefer things that are “comprehensible” and that have been widely consecrated, generating little controversy, e.g. in health care, using CMS approved methods such as Medicare’s CDPS for chronic illness cost analysis and containment vs, for instance, leveraging an emerging body of academic work focused on fast and efficient, machine learning approaches to dynamic diagnosis and treatment. After all, the CDPS way is “validated” while the other has little or no ground truth and is just “making things up” (that’s a quote from the front lines of the data science divide).

Another huge disconnect in all of this is the so-called war for talent, the myth that firms are competing for a dearth of skilled resources. One significant aspect of this “dearth” of talent is the stunningly unrealistic, almost delusional, nature of all too many data science job descriptions. If HR costs are the single biggest line item on a firm’s P&L, in a misguided attempt at minimizing those costs, firms seek candidates resembling nobody so much as Steve Jobs — even for junior positions — enabling them to condense into a single person a dizzying array of skills from computer science, IT and hands-on analysis to repping the company at industry conferences, driving innovation, strategic planning, team leadership, product development and client management. Finding a resource in possession of the intersection of all of these skills is no easy matter but deep pocketed companies are willing to wait, boiling the ocean until the perfect hire – the proverbial purple squirrel — comes along.

That said, the reality is that there is a deluge of talent – take 2 seconds and go on Google Trends to witness the exponential growth in just the last few years in use of the keywords “data scientist” for compelling evidence of this. This means that the competition isn’t for talent per se, it’s for good, creative, cheap talent – people willing to step up and work for subsistence level wages. The emphasis is on “cheap” here as the salary scales among many startups – the willingness to pay even for highly skilled, senior data science resources – are not only at subsistence levels, they are so low as to be not so subtle expressions of contempt for the profession. Ever since Y2K, the move to leverage off shore resources is another expression of this institutionalized contempt disguised as cost-savings.

This shift has had the effect of resetting expectations about normative data science compensation to an incredibly low baseline: why pay more for North American talent when you can get the “same thing” at an enormous discount from the sub-continent? These are remorseless, MBA-driven, financial decisions that, like most cost-cutting initiatives, impact univariate dimensions of a firm’s balance sheet. Other less easily quantified metrics such as the quality of the work delivered, innovations developed or answers obtained may be hobbled in the process, but being less readily quantified, they become, therefore, unimportant.

One way to understand this is as a false dichotomy and legacy from the manufacturing industries dividing services into de facto white vs blue collar skillsets and remuneration corresponding to the side of the divide which you fall into (gender is another implicit divisor in this accounting). Since everyone uses a computer of some kind, the division is defined both by the platform (e.g., mobile or tablet vs fixed workstations) as well as the software used on an everyday basis. Anyone who writes code is, by definition, a blue collar drone – a programmer — possessing second- or third-class, commoditized status in the organization, someone who is simply painting by numbers to the brilliantly creative, tactical outline developed by white collar thinkers and strategists– and compensated accordingly.

Related:

- 10 reasons why I love data and analytics

- History of Data Science Infographic in 5 strands

- I’ve Been Replaced by an Analytics Robot

- Interview: Michael Brodie on Industry Lessons, Knowledge Discovery, and Future Trends