Why Deep Learning is Radically Different From Machine Learning

Why Deep Learning is Radically Different From Machine Learning

There is a lot of confusion these days about Artificial Intelligence (AI), Machine Learning (ML) and Deep Learning (DL), yet the distinction is very clear to practitioners in these fields. Are you able to articulate the difference?

There is a lot of confusion these days about Artificial Intelligence (AI), Machine Learning (ML) and Deep Learning (DL). There certainly is a massive uptick of articles about AI being a competitive game changer and that enterprises should begin to seriously explore the opportunities. The distinction between AI, ML and DL are very clear to practitioners in these fields. AI is the all encompassing umbrella that covers everything from Good Old Fashion AI (GOFAI) all the way to connectionist architectures like Deep Learning. ML is a sub-field of AI that covers anything that has to do with the study of learning algorithms by training with data. There are whole swaths (not swatches) of techniques that have been developed over the years like Linear Regression, K-means, Decision Trees, Random Forest, PCA, SVM and finally Artificial Neural Networks (ANN). Artificial Neural Networks is where the field of Deep Learning had its genesis from.

Some ML practitioners who have had previous exposure to Neural Networks (ANN), after all it was invented in the early 60’s, would have the first impression that Deep Learning is nothing more than ANN with multiple layers. Furthermore, the success of DL is more due to the availability of more data and the availability of more powerful computational engines like Graphic Processing Units (GPU). This of course is true, the emergence of DL is essentially due to these two advances, however the conclusion that DL is just a better algorithm than SVM or Decision Trees is akin to focusing only on the trees and not seeing the forest.

To coin Andreesen who said “Software is eating the world”, “Deep Learning is eating ML”. Two publications by practitioners of different machine learning fields have summarized it best as to why DL is taking over the world. Chris Manning an expert in NLP writes about the “Deep Learning Tsunami“:

Deep Learning waves have lapped at the shores of computational linguistics for several years now, but 2015 seems like the year when the full force of the tsunami hit the major Natural Language Processing (NLP) conferences. However, some pundits are predicting that the final damage will be even worse.

Nicholas Paragios writes about the “Computer Vision Research: the Deep Depression“:

It might be simply because deep learning on highly complex, hugely determined in terms of degrees of freedom graphs once endowed with massive amount of annotated data and unthinkable — until very recently — computing power can solve all computer vision problems. If this is the case, well it is simply a matter of time that industry (which seems to be already the case) takes over, research in computer vision becomes a marginal academic objective and the field follows the path of computer graphics (in terms of activity and volume of academic research).

These two articles do highlight how the field of Deep Learning are fundamentally disruptive to conventional ML practices. Certainly it should be equally disruptive in the business world. I am however stunned and perplexed that even Gartner fails to recognize the difference between ML and DL. Here is Gartner’s August 2016 Hype Cycle and Deep Learning isn’t even mentioned on the slide:

What a travesty! It’s bordering on criminal that they their customer’s have a myopic notion of ML and are going to be blind sided by Deep Learning.

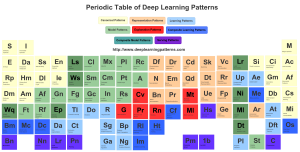

Anyway, despite being ignored, DL continues to by hyped. The current DL hype tends to be that we have these commoditized machinery, that given enough data and enough training time, is able to learn on its own. This of course either an exaggeration of what the state-of-the-art is capable of or an over simplification of the actual practice of DL. DL has over the past few years given rise to a massive collection of ideas and techniques that were previously either unknown or known to be untenable. At first this collection of concepts, seems to be fragmented and disparate. However over time patterns and methodologies begin to emerge and we are frantically attempting to cover this space in “Design Patterns of Deep Learning“.

Deep Learning today goes beyond just multi-level perceptrons but instead is a collection of techniques and methods that are used to building composable differentiable architectures. These are extremely capable machine learning systems that we are only right now seeing just the tip of the iceberg. The key take away from this is that, Deep Learning may look like alchemy today, but we eventually will learn to practice it like chemistry. That is, we would have a more solid foundation so as to be able to build our learning machines with greater predictability of its capabilities.

BTW, if you are interested in finding out how Deep Learning can help you in your work, please feel to leave your email at: www.intuitionmachine.com or join the discussion in Facebook: www.facebook.com/groups/deeplearningpatterns/

Bio: Carlos Perez is a software developer presently writing a book on "Design Patterns for Deep Learning". This is where he sources his ideas for his blog posts.

Original. Reposted with permission.

Related:

- Shortcomings of Deep Learning

- Deep Learning Research Review: Reinforcement Learning

- Predictive Science vs Data Science

Why Deep Learning is Radically Different From Machine Learning

Why Deep Learning is Radically Different From Machine Learning