Python Data Preparation Case Files: Removing Instances & Basic Imputation

This is the first of 3 posts to cover imputing missing values in Python using Pandas. The slowest-moving of the series (out of necessity), this first installment lays out the task and data at the risk of boring you. The next 2 posts cover group- and regression-based imputation.

Data preparation covers a lot of potential ground: data integration, data transformation, feature selection, feature engineering, and much, much more. One of the most basic, and most important, aspects of data preparation is dealing with missing values.

From a practical standpoint, there are 3 general approaches to dealing with data instances which include missing values:

- Delete data instances with missing values

- Fill in missing values with some derived value

- Leave missing values as is, if your algorithm can handle them

Keep in mind that these approaches are only from a technical point of view. There do not in any way address which approach, or combination of approaches, are appropriate in a given scenario. Such decisions depend on an understanding of the data, the domain, and the desired outcome, and cannot be covered in a post such as this.

There are, of course, varying implementations of the first approach, deleting data instances with missing values: delete all instances with any number of missing values; delete all instances with 2 or more missing values; delete all instances missing only a particular feature's value. Likewise, the second approach, filling in missing values -- or imputation -- can be based on a variety of measures, including mean, median, mode, regression, or other strategies. The third approach alludes to the fact that some machine learning algorithms, such as Random Forests, can often sufficiently deal with missing values, while others cannot.

As points #1 and #3 are quite straightforward (again, the reasoning behind their selections may be complex, but the actions taken after deciding to adopt them are quite simple), point #2, imputation, is what we will be mainly discussing in this series of posts. As you will see, imputation is varied enough to warrant extra consideration.

I just want to caution, again, that this is a brief outline of a few approaches to dealing with missing data values, and stress that there is no endorsement of any particular approach to any particular scenario, especially concerning domain-related holistic decisions to dealing with missing data. You should be warned that you need to look elsewhere for guidance in these matters.

The Data

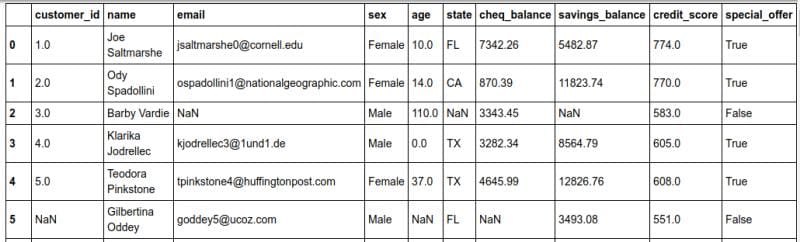

For these exercises, we will use a mock banking information dataset built using Mockaroo. The small dataset includes 10 variables, or features (including the class, or target, variable) -- coinciding with columns of a table -- and 1000 instances -- coinciding with rows of a table (see Figure 1). The idea of our exercise is to emulate a process which uses bank customer data to decide (and predict) whether or not a special offer will be extended to the customer, with the assumption that "better" customers will receive an offer.

What exactly is a "better" customer? How will we be modeling our data? We don't really care right yet. We will partake in some amateur speculation during our data preparation exercise, but ultimately we are concerned with getting our dataset ready for this modeling and prediction process, as opposed to performing said process. In real life you don't want to fake it til you make it with domain knowledge, but we'll make some reasoned assumptions about our data usefulness as we proceed.

Our plan of attack includes some data inspection along with using some basic imputation methods to help fill in our dataset's missing values, as well as deciding as to whether we should drop some instances based on which variables they are missing.

Let's start with importing the dataset and having a look at it. We will also summarize the missing values. First, grab the dataset here.

import pandas as pd

import numpy as np

# Importing the dataset

dataset_filename = 'mock_bank_data_original.csv'

df = pd.read_csv(dataset_filename)

# Summarize missing values

print 'Null values by variable:'

print '------------------------'

df.isnull().sum()

Null values by variable:

------------------------

customer_id 18

name 0

email 158

sex 111

age 113

state 40

cheq_balance 23

savings_balance 96

credit_score 0

special_offer 0

dtype: int64

Figure 1: Mock bank dataset for our exercise.

The Process

This post will deal with the first set of data preprocessing tasks, specifically dropping some instances and preforming some basic imputation. A pair of follow up posts will demonstrate imputing a value based on the category membership of a different variable (such as using the mean salary of everyone living in Washington state to determine the missing salary values of Washington state residents) and performing imputation by regression (such as using a combination of variables to perform linear regression, and basing missing values of a different variable on the resultant linear regression model).

Dropping Instances with Missing State Value

Our first half-educated assumption is that we will be basing much of our preparation around states in which our customers live, and so we will unfortunately be sacrificing any instance that lacks a value for this variable. The bad news is that we will be down to 960 instances; the good news is that (hopefully) we won't have to sacrifice any more.

Imputing Missing Credit Scores

Let's assume that those with domain knowledge have indicated that the median credit score for all customers would be a valid missing value replacement. Sure, mean could be used, or the most frequent value, or could be devised by some other more complex scheme, but our experts assure us this is appropriate for filling in this particular variable's missing values.

Note that something state-specific, such as replacing with the mean credit score of bank customers in the same state, could also have been used. We will save this approach for use in the next post's tasks, however.

Discarding Unnecessary Variables

Since we don't need all of the variables to be able to make predictions about which customer gets the offer (for example, whether the customer's name is "Daniel" or Michael" should not make a difference), we will go ahead and discard the unnecessary data. We might save this task for after all data preprocessing has been complete, but since this first post is a bit shy on steps, let's take advantage and work it out now.

Specifically, we definitely won't be needing the 'customer_id', 'name', or 'email' variables.

Saving the New Dataset

Next, since we want to save the dataset in its new form to use in the next tutorial, we will create a new, up-to-date CSV.

The Code

The full code with comments to accomplish the above is shown below.

This was a slow start, and we didn't break much ground, but hopefully the trouble was worth it for next time, when we cover imputation by category membership, or group-based imputation, followed by regression-based imputation. It's also good that we have demonstrated basic imputation based on all values of the same variable at this point as well, in order to have something to compare our more creative methods to.

Hey, at least we've done the boring stuff already, at this point. The next post will be live sooner than later; in the meantime, check out the related posts below for some more data preparation-related reading material.

Related: