Introduction to Apache Spark

This is the first blog in this series to analyze Big Data using Spark. It provides an introduction to Spark and its ecosystem.

By Suvro Banerjee, Machine Learning Engineer @ Juniper Networks

By Alexander Savchuk

What is Apache Spark ?

Apache Spark is a cluster computing platform designed to be fast and general-purpose.

At its core, Spark is a “computational engine” that is responsible for scheduling, distributing, and monitoring applications consisting of many computational tasks across many worker machines, or a computing cluster.

On the speed side, Spark extends the popular MapReduce model to efficiently support more types of computations, including interactive queries and stream processing. One of the main features Spark offers for speed is the ability to run computations in memory.

On the generality side, Spark is designed to cover a wide range of workloads that previously required separate distributed systems, including batch applications, iterative algorithms, interactive queries, and streaming which is often necessary in production data analysis pipelines.

Spark is designed to be highly accessible, offering simple APIs in Python, Java, Scala, and SQL, and rich built-in libraries. It also integrates closely with other Big Data tools. In particular, Spark can run in Hadoop clusters and access any Hadoop data source, including Cassandra (NoSQL database management system)

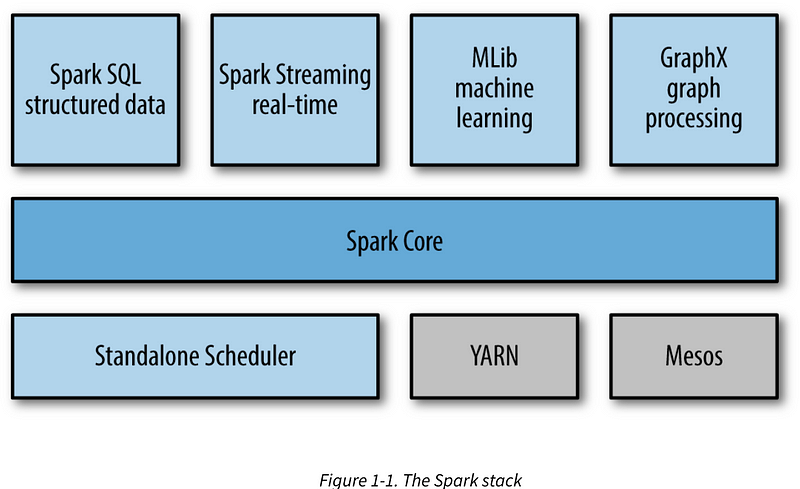

Briefly introduce each of Spark’s components

Spark Core : Spark Core contains the basic functionality of Spark, including components for task scheduling, memory management, fault recovery, interacting with storage systems etc. Spark Core is also home to the API that defines resilient distributed datasets (RDDs), which are Spark’s main programming abstraction. RDDs represent a collection of items distributed across many compute nodes that can be manipulated in parallel.

Spark SQL : Spark SQL is Spark’s package for working with structured data. It allows querying data via SQL as well as the Apache Hive variant of SQL — called the Hive Query Language (HQL) — and it supports many sources of data, including Hive tables, Parquet, and JSON.

Spark Streaming : Spark Streaming is a Spark component that enables processing of live streams of data. Examples of data streams include logfiles generated by production web servers, or queues of messages containing status updates posted by users of a web service.

MLlib : Spark comes with a library containing common machine learning (ML) functionality, called MLlib. MLlib provides multiple types of machine learning algorithms, including classification, regression, clustering, and collaborative filtering, as well as supporting functionality such as model evaluation and data import etc. All of these methods are designed to scale out across a cluster.

GraphX : GraphX is a library for manipulating graphs (e.g., a social network’s friend graph) and performing graph-parallel computations.

Cluster Managers : Under the hood, Spark is designed to efficiently scale up from one to many thousands of compute nodes. To achieve this while maximizing flexibility, Spark can run over a variety of cluster managers, including Hadoop YARN, Apache Tez, Apache Mesos, and a simple cluster manager included in Spark itself called the Standalone Scheduler. If you are just installing Spark on an empty set of machines, the Standalone Scheduler provides an easy way to get started; if you already have a Hadoop YARN or Apache Tez cluster, however, Spark’s support for these cluster managers allows your applications to also run on them.

Who uses Spark, and for What ?

Because Spark is a general-purpose framework for cluster computing, it is used for a diverse range of applications.

Data Science Tasks : For our purposes a data scientist is somebody whose main task is to analyze and model data. Data scientists may have experience with SQL, statistics, predictive modeling (machine learning), and programming, usually in Python, Matlab, or R. Data scientists also have experience with techniques necessary to transform data into formats that can be analyzed for insights (sometimes referred to as data wrangling).

The Spark shell makes it easy to do interactive data analysis using Python or Scala. Spark SQL also has a separate SQL shell that can be used to do data exploration using SQL. Machine learning and data analysis is supported through the MLLib libraries.

After initial exploration phase, the work of a data scientist will be “productized,” or extended, hardened (i.e., made fault-tolerant), and tuned to become a production data processing application, e.g. Production Recommender System that is integrated into a web application and used to generate product suggestions to users.

Data Processing Applications : The other main use case of Spark can be described in the context of the engineer persona. For our purposes here, we think of engineers as a large class of software developers who use Spark to build production data processing applications. These developers usually have an understanding of the principles of software engineering, such as encapsulation, interface design, and object-oriented programming.

For engineers, Spark provides a simple way to parallelize these applications across clusters, and hides the complexity of distributed systems programming, network communication, and fault tolerance.

A Brief History of Spark

Spark started in 2009 as a research project in the UC Berkeley RAD Lab, later to become the AMPLab. The researchers in the lab had previously been working on Hadoop MapReduce, and observed that MapReduce was inefficient for iterative and interactive computing jobs. Thus, from the beginning, Spark was designed to be fast for interactive queries and iterative algorithms, bringing in ideas like support for in-memory storage and efficient fault recovery.

Soon after its creation it was already 10–20× faster than MapReduce for certain jobs.

Some of Spark’s first users were other groups inside UC Berkeley, including machine learning researchers such as the Mobile Millennium project, which used Spark to monitor and predict traffic congestion in the San Francisco Bay Area.

Spark was first open sourced in March 2010, and was transferred to the Apache Software Foundation in June 2013, where it is now a top-level project. It is an open source project that has been built and is maintained by a thriving and diverse community of developers. In addition to UC Berkeley, major contributors to Spark include Databricks, Yahoo!, and Intel.

Internet powerhouses such as Netflix, Yahoo, and eBay have deployed Spark at massive scale, collectively processing multiple petabytes of data on clusters of over 8,000 nodes. It has quickly become the largest open source community in big data, with over 1000 contributors from 250+ organizations.

Concluding Note

Spark can create distributed datasets from any file stored in the Hadoop distributed filesystem (HDFS) or other storage systems supported by the Hadoop APIs (including your local filesystem, Amazon S3, Cassandra, Hive, HBase, etc.).

It’s important to remember that Spark does not require Hadoop; it simply has support for storage systems implementing the Hadoop APIs. Spark supports text files, SequenceFiles, Avro, Parquet, and any other Hadoop InputFormat.

My next blog in this Series

Downloading Spark and getting started with Python notebooks

Sources

- Learning Spark by Matei Zaharia, Patrick Wendell, Andy Konwinski, Holden Karau

- Databricks — Unifying Data Science, Engineering and Business

Bio: Suvro Banerjee is a Machine Learning Engineer @ Juniper Networks.

Original. Reposted with permission.

Related:

- Apache Spark : Python vs. Scala

- Deep Learning With Apache Spark: Part 1

- The Data Scientist’s Guide to Apache Spark™