Google’s New Explainable AI Service

Google’s New Explainable AI Service

Google has started offering a new service for “explainable AI” or XAI, as it is fashionably called. Presently offered tools are modest, but the intent is in the right direction.

AI has an explainability problem

Artificial intelligence is set to transform global productivity, working patterns, and lifestyles and create enormous wealth.

Research firm Gartner expects the global AI economy to increase from about $1.2 trillion last year to about $3.9 Trillion by 2022, while McKinsey sees it delivering global economic activity of around $13 trillion by 2030.

AI techniques, especially Deep Learning (DL) models are revolutionizing the business and technology world with jaw-dropping performances in one application area after another — image classification, object detection, object tracking, pose recognition, video analytics, synthetic picture generation — just to name a few.

They are being used in — healthcare, I.T. services, finance, manufacturing, autonomous driving, video game playing, scientific discovery, and even the criminal justice system.

However, they are like anything but classical Machine Learning (ML) algorithms/techniques. DL models use millions of parameters and create extremely complex and highly nonlinear internal representations of the images or datasets that are fed to them.

They are, therefore, often called the perfect black-box ML techniques. We can get highly accurate predictions from them after we train them with large datasets, but we have little hope of understanding the internal features and representations of the data that a model uses to classify a particular image into a category.

Google has started a new service to tackle that

Without a doubt, Google (or its parent company Alphabet) has a big stake in the proper development of the enormous AI-enabled economy, as projected by business analysts and economists (see the previous section).

Google, had famously set its official strategic policy to be “AI-first”, back in 2017.

Therefore, it is perhaps feeling the pressure to be the torchbearer in the industry for making AI less mysterious and more amenable to the general user base — by offering service in explainable AI.

What is explainable AI (or xAI)?

The notion is, as simple as the name suggests. You want your model to spit out, not only predictions but also some bit of explanation, on why the predictions turned out to be that way.

But why is it needed?

This article covers some essential points. Primary reasons for AI systems to offer explainability are —

- Improve human readability

- Determine the justifiability of the decision made by the machine

- To help in deciding accountability, liability leading to good policy-making

- Avoid discrimination

- Reduce societal bias

There is still much debate around it, but a consensus is emerging that post-prediction justification is not a correct approach. Explainability goals should be built into the AI model/system at the core design stage and should be an integral part of the system rather than an attachment.

Some popular methods have been proposed.

- Understand the data better — intuitive visualization showing the discriminative features

- Understand the model better — visualizing the activation of neural net layers.

- Understand user psychology and behavior better — incorporating behavior models in the system alongside the statistical learning, and generate/ consolidate appropriate data/explanations along the way

Even DARPA has started a whole program to build and design these XAI principles and algorithms for future AI/ML-driven defense systems.

Explainability goals should be built into the AI model/system at the core design stage

Read this article for a thorough discussion of the concept.

Should AI explain itself? or should we design Explainable AI so that it doesn’t have to

Google Cloud hopes to lead in xAI

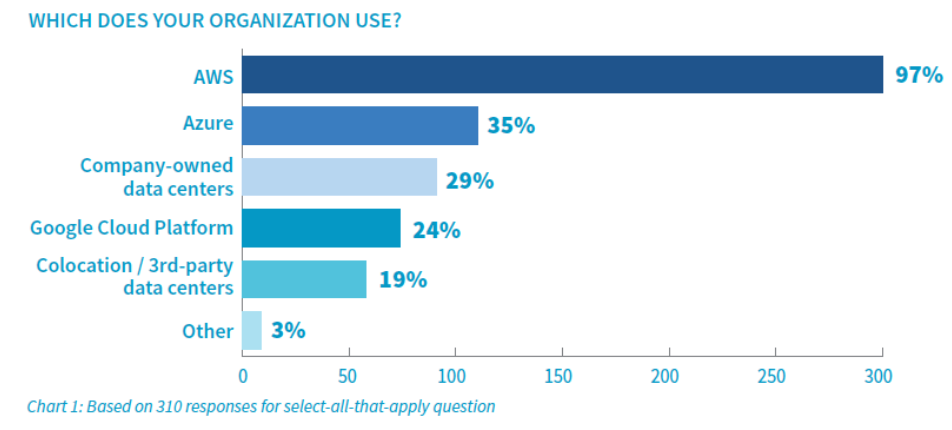

Google is a leader in attracting AI and ML talents and it is the undisputed giant in the current information-based economy of the world. However, its cloud services are a distant third in comparison to that from Amazon and Microsoft.

However, as this article points out, although the traditional infrastructure-as-a-service wars have been largely decided, new technologies such as AI and ML have opened the field up to the players for novel themes, strategies, and approaches to try on.

Building on those lines of thought, at an event in London this week, Google’s cloud computing division pitched a new facility that it hopes will give it the edge on Microsoft and Amazon.

The famous AI researcher Prof. Andrew Moore introduced and explained this service in London.

From their official blog,

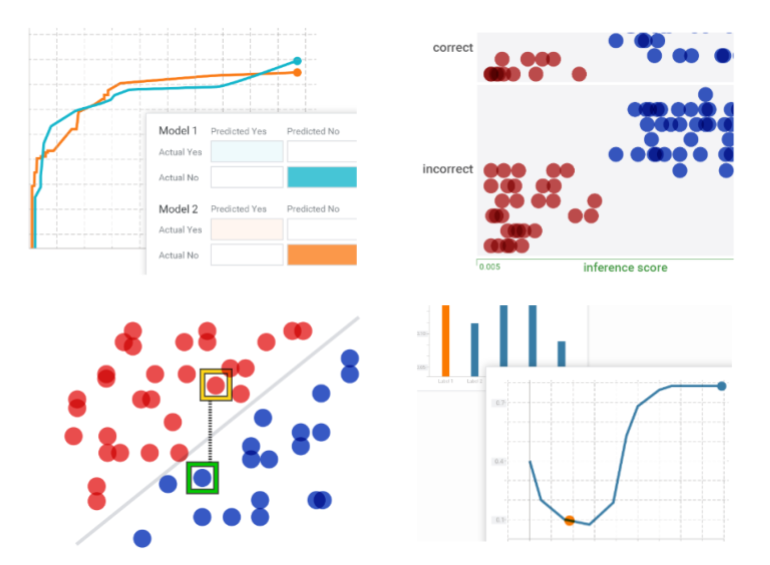

“Explainable AI is a set of tools and frameworks to help you develop interpretable and inclusive machine learning models and deploy them with confidence. With it, you can understand feature attributions in AutoML Tables and AI Platform and visually investigate model behavior using the What-If Tool.”

Initially — modest goals

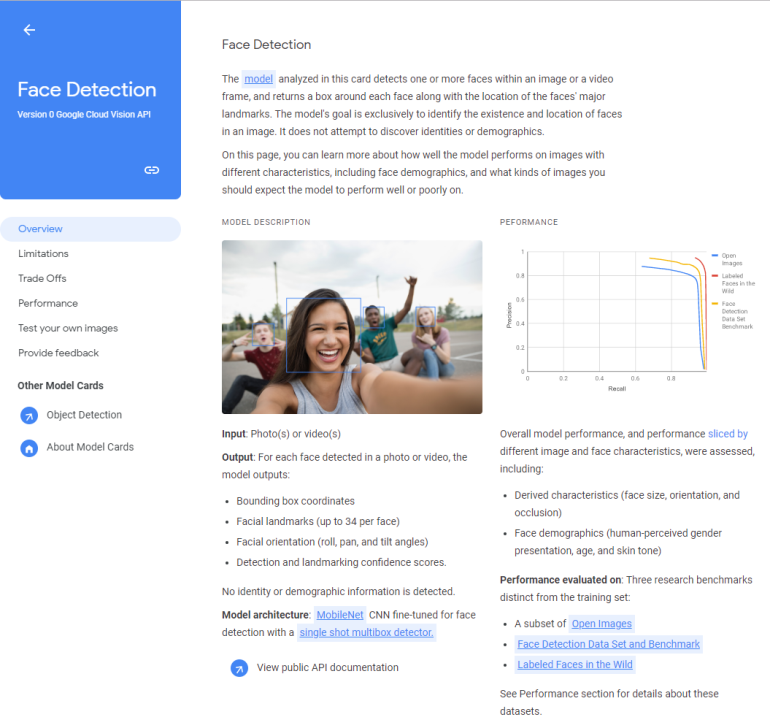

Initially, the goals and reach are rather modest. The service will provide information about the performance and potential shortcomings of the face- and object-detection models.

However, with time, GCP hopes to offer a wider set of insights and visualizations to help make the inner workings of its AI systems less mysterious and more trustworthy to everybody.

New technologies such as AI and ML have opened the field up to the Cloud service players for novel themes, strategies, and approaches to try on.

Prof. Moore was candid in his acceptance that AI systems have given even the best minds at Google a hard time in the matter of explainability,

“One of the things which drives us crazy at Google is we often build really accurate machine learning models, but we have to understand why they’re doing what they’re doing. And in many of the large systems, we built for our smartphones or for our search-ranking systems, or question-answering systems, we’ve internally worked hard to understand what’s going on.”

One of the ways, Google hopes to give users a better explanation, is through the so-called model cards.

Google used to offer a scenario analysis What-If tool. They are encouraging users to pair up new explainability tools with this scenario analysis framework.

“You can pair AI Explanations with our What-If tool to get a complete picture of your model’s behavior,” said Tracy Frey, Director of Strategy at Google Cloud.

And, it’s a free add-on, for now. Explainable AI tools are provided at no extra charge to users of AutoML Tables or AI Platform.

For more details and a historical perspective, please consider reading this wonderful whitepaper.

Overall, this sounds like a good start. Although, not everybody, even within Google, is enthusiastic about the whole idea of xAI.

Some say bias is a bigger issue

In the past, Peter Norvig, Google research director, had said about explainable AI,

“You can ask a human, but, you know, what cognitive psychologists have discovered is that when you ask a human you’re not really getting at the decision process. They make a decision first, and then you ask, and then they generate an explanation and that may not be the true explanation.”

So, essentially, our decision-making process is limited by psychology and it will be no different for a machine. Do we really need to alter these mechanics for machine intelligence and what if the answer and insights that come out, are not palatable to the users?

Instead, he argued that tracking and identifying bias and fairness in the decision-making process of the machine should be given more thought and importance.

For this to happen, inner working of a model is not necessarily the best place to look at. One can look at the ensemble of output decisions made by the system over time and identify specific pattern of hidden bias mechanisms.

Should bias and fairness be given more importance than a mere explanation for future AI systems?

If you apply for a loan and get rejected, an explainable AI service may spit out a statement like — “your loan application was rejected because of lack of sufficient income proof”. However, anybody who has built ML models, know that the process is not that one dimensional and the specific structure and weights of mathematical models that give rise to such a decision (often as an ensemble) depend on the collected dataset, which can be biased against certain section of people in the society where matter of income and economic mobility is concerned.

So, the debate will rage on the relative importance of having merely a system showing rudimentary, watered-down explanation, and building a system with less bias and a much higher degree of fairness.

If you have any questions or ideas to share, please contact the author at tirthajyoti[AT]gmail.com. Also, you can check the author’s GitHub repositories for code, ideas, and resources in machine learning and data science. If you are, like me, passionate about AI/machine learning/data science, please feel free to add me on LinkedIn or follow me on Twitter.

Original. Reposted with permission.

Related:

- Opening Black Boxes: How to leverage Explainable Machine Learning

- Build Pipelines with Pandas Using pdpipe

- Explainability: Cracking open the black box, Part 1