The Best NLP with Deep Learning Course is Free

The Best NLP with Deep Learning Course is Free

Stanford's Natural Language Processing with Deep Learning is one of the most respected courses on the topic that you will find anywhere, and the course materials are freely available online.

One of the most acclaimed courses on using deep learning techniques for natural language processing is freely available online.

To be clear, this isn't a recent occurrence; Stanford's Natural Language Processing with Deep Learning (CS224n) materials have been available online for quite some time, years in fact, and the available materials are constantly being updated to closely reflect what the in-school course looks like at any given time. And to be even more clear, there is no option to enroll, as this is not a MOOC; it is simply the freely available materials from this world-class course on the topic of deep learning with NLP.

First, to provide clarity, here is the course's self-description:

Natural language processing (NLP) or computational linguistics is one of the most important technologies of the information age. Applications of NLP are everywhere because people communicate almost everything in language: web search, advertising, emails, customer service, language translation, virtual agents, medical reports, etc. In recent years, deep learning (or neural network) approaches have obtained very high performance across many different NLP tasks, using single end-to-end neural models that do not require traditional, task-specific feature engineering. In this course, students will gain a thorough introduction to cutting-edge research in Deep Learning for NLP. Through lectures, assignments and a final project, students will learn the necessary skills to design, implement, and understand their own neural network models.

The course is taught by renowned academic, researcher, and author Christopher Manning, along with head TA Matthew Lamm, course coordinator Amelie Byun, and a small army of teaching assistants.

The CS224n webpage materials reflect the winter 2020 offering of the class, so it's about as up to date as one could hope for. Lecture slides, notes, reading materials curated from around the web, assignments, code; it's all there, and it's all of high quality.

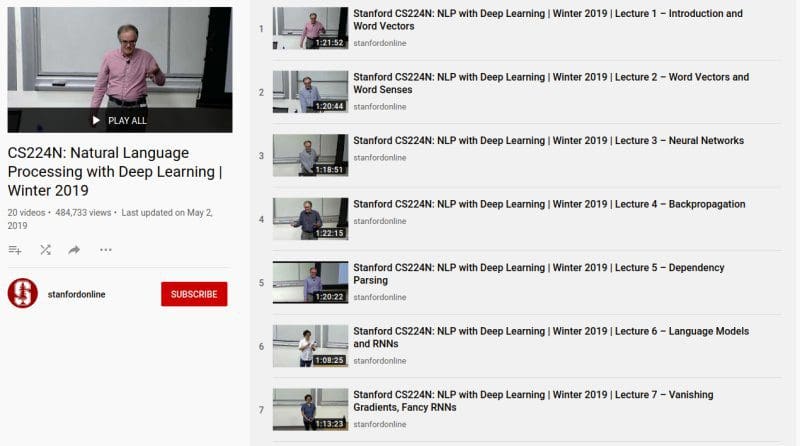

The video lecture situation differs only slightly. Without being able to log into Stanford's secure student portal to access the most recent course lecture videos, the best we can currently do is to access the videos from last winter's course offering on the official Stanford YouTube channel. The videos line up with the more recent iteration's other material very well. The only major difference will be the comparative lack of post-BERT lecture material, it would seem, for reasons which make sense given the timing of the videos' recording.

Topics covered in the course include word vectors, neural networks with PyTorch basics, backpropagation, linguistics structure, language models, RNNs, attention, machine translation, convolutional neural nets, language generation, and much more. Recommended prerequisites include proficiency in Python, college level calculus and linear algebra, basic probability and statistics, and foundational knowledge of Machine Learning.

One can also access the most recent final project reports from students, applying what they've learned during the course. While standout projects which have been recognized as such are listed at the top of the page, an exhaustive list of what seems to be all of the projects from the 2019 cohort seem to be available.

There are a number of freely available NLP courses and other learning resources one can find online. Some are good, some are not. Stanford's Natural Language Processing with Deep Learning almost transcends these, being regarded as nrealy authoritative in come circles. If you are looking to understand NLP better, regardless of your exposure to the topics covered in this course, CS224n is almost definitely a resource you want to take seriously.

Related:

- 10 Free Top Notch Natural Language Processing Courses

- Natural Language Processing Recipes: Best Practices and Examples

- 5 Fantastic Practical Natural Language Processing Resources

The Best NLP with Deep Learning Course is Free

The Best NLP with Deep Learning Course is Free