5 Fantastic Practical Natural Language Processing Resources

This post presents 5 practical resources for getting a start in natural language processing, covering a wide array of topics and approaches.

Are you interested in some practical natural language processing resources?

There are so many NLP resources available online, especially those relying on deep learning approaches, that sifting through to find the quality can be quite a task. There are some well-known, top notch mainstay resources of mainly theoretical depth, especially the Stanford and Oxford NLP with deep learning courses:

- Natural Language Processing with Deep Learning (Stanford)

- Deep Learning for Natural Language Processing (Oxford)

But what if you've completed these, have already gained a foundation in NLP and want to move to some practical resources, or simply have an interest in other approaches, which may not necessarily be dependent on neural networks? This post (hopefully) will be helpful.

1. Natural Language Processing with Python – Analyzing Text with the Natural Language Toolkit

This is the introductory natural language processing book, at least from the dual perspectives of practicality and the Python ecosystem.

This book provides a highly accessible introduction to the field of NLP. It can be used for individual study or as the textbook for a course on natural language processing or computational linguistics, or as a supplement to courses in artificial intelligence, text mining, or corpus linguistics.

As the title suggests, the book approaches NLP by using the Natural Language Toolkit (NLTK), which you have either heard of already or need to start learning about right away.

NLTK includes extensive software, data, and documentation, all freely downloadable from http://nltk.org/. Distributions are provided for Windows, Macintosh and Unix platforms. We strongly encourage you to download Python and NLTK, and try out the examples and exercises along the way.

The best part of the book is that it gets right to it; no messing around, just plenty of code and concepts.

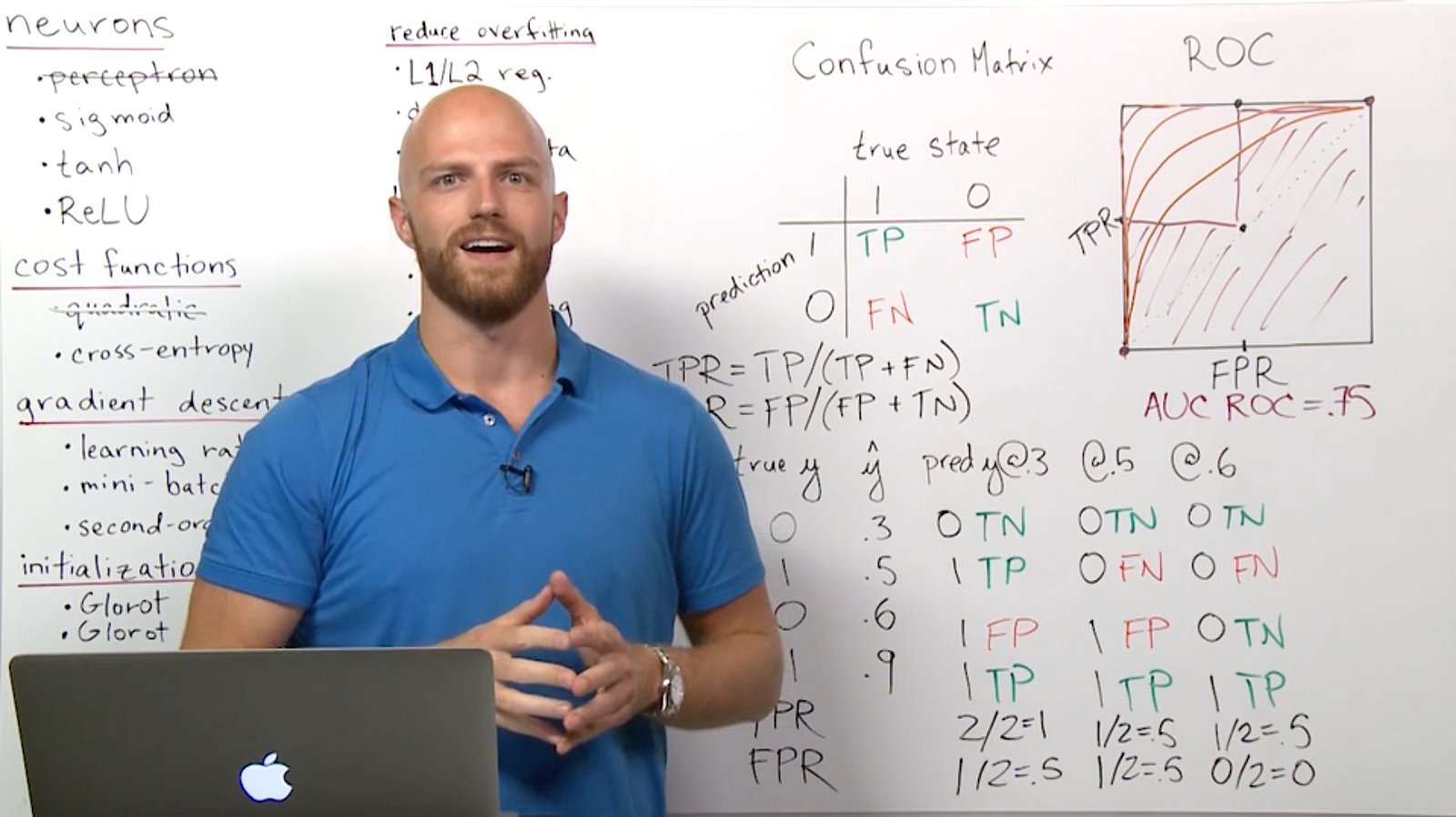

2. Deep Learning for Natural Language Processing: Tutorials with Jupyter Notebooks

This is a repo of the Jupyter notebooks which go along with Jon Krohn's fantastic set of videos on deep learning for NLP. The notebooks are lifted directly from his video walkthroughs, and so you don't really miss out on much as far as content. Protip: if you are interested in watching his videos -- which are offered via O'Reilly's Safari platform -- sign up for a free 10 day trial and rip through the few hours of video before it expires.

Here's an overview of what Jon covers in these notebooks and the accompanying videos:

These tutorials are for you if you’d like to learn how to:

- preprocess natural language data for use in machine learning applications;

- transform natural language into numerical representations (with word2vec);

- make predictions with deep learning models trained on natural language;

- apply advanced NLP approaches with Keras, the high-level TensorFlow API; or

- improve deep learning model performance by tuning hyperparameters.

The combination of the notebooks, the videos, and your own environment to follow along in are a great way to kill a long afternoon.

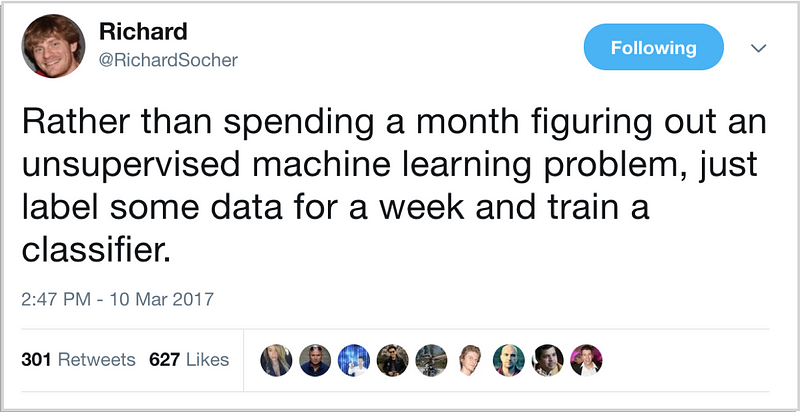

3. How to solve 90% of NLP problems: a step-by-step guide

This is another really great set of tutorials in the form of notebooks, following a trajectory similar in style to Krohn, above.

Emmanuel Ameisen of Insight AI breaks down what steps are needed to accomplish what tasks, but his summary post really excels at tying the lessons together and providing some nice visualizations.

After reading this article, you’ll know how to:

- Gather, prepare and inspect data

- Build simple models to start, and transition to deep learning if necessary

- Interpret and understand your models, to make sure you are actually capturing information and not noise

We wrote this post as a step-by-step guide; it can also serve as a high level overview of highly effective standard approaches

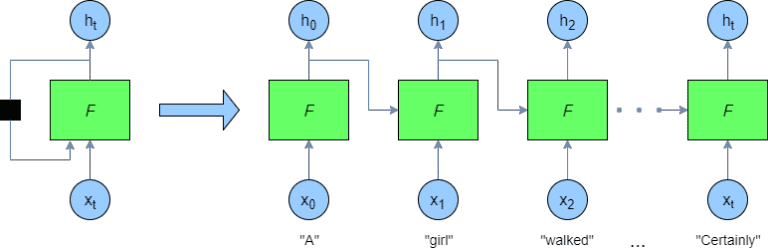

4. Keras LSTM tutorial – How to easily build a powerful deep learning language model

This tutorial is much more focused than the previous resources, in that it covers implementing an LSTM for language modeling in Keras. That's it. But it does so in detail, with explanation, code, and visuals, and gets the point across. Less of a time investment than the other resource thus far, you can get through this start to finish, including reproducing the code yourself, within a couple of hours.

In this tutorial, I’ll concentrate on creating LSTM networks in Keras, briefly giving a recap or overview of how LSTMs work. In this Keras LSTM tutorial, we’ll implement a sequence-to-sequence text prediction model by utilizing a large text data set called the PTB corpus.

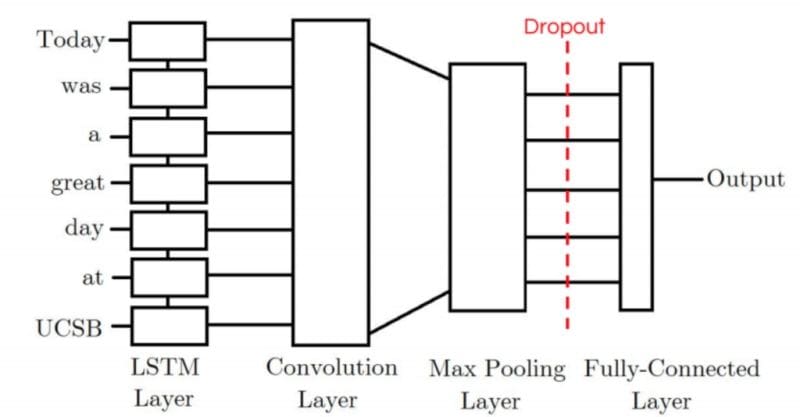

5. Twitter Sentiment Analysis Using Combined LSTM-CNN Models

I purposefully sought a new sentiment analysis resource to include herein, and the reason is this: probably more than anything else, people ask me for suggestions on quality sentiment analysis resources.

This shorter tutorial post -- which is an overview of a paper, which has code here -- uses a combined LSTM/CNN approach to analyzing sentiment. The project flips up architectures, and reports the varying performances.

Our CNN-LSTM model achieved an accuracy of 3% higher than the CNN model, but 3.2% worse than the LSTM model. Meanwhile, our LSTM-CNN model performed 8.5% better than a CNN model and 2.7% better than an LSTM model.

I can't independently endorse the project's results; however, the innovative approach to sentiment (and the fact that it was a sentiment analysis-based resource) paired with mixing in some different neural network architectures is what led me to include it in this list, despite its shorter length.

Related:

- 5 Free Resources for Getting Started with Deep Learning for Natural Language Processing

- Natural Language Processing Key Terms, Explained

- A Framework for Approaching Textual Data Science Tasks