AI Papers to Read in 2020

Reading suggestions to keep you up-to-date with the latest and classic breakthroughs in AI and Data Science.

By Ygor Rebouças Serpa, developing explainable AI tools for the healthcare industry

Artificial Intelligence is one of the most rapidly growing fields in science and is one of the most sought skills of the past few years, commonly labeled as Data Science. The area has far-reaching applications, being usually divided by input type: text, audio, image, video, or graph; or by problem formulation: supervised, unsupervised, and reinforcement learning. Keeping up with everything is a massive endeavor and usually ends up being a frustrating attempt. In this spirit, I present some reading suggestions to keep you updated on the latest and classic breakthroughs in AI and Data Science.

Although most papers I listed deal with image and text, many of their concepts are fairly input agnostic and provide insight far beyond vision and language tasks. Alongside each suggestion, I listed some of the reasons I believe you should read (or re-read) the paper and added some further readings, in case you want to dive a bit deeper into a given subject.

Before we begin, I would like to apologize to the Audio and Reinforcement Learning communities for not adding these subjects to the list, as I have only limited experience with both.

Here we go.

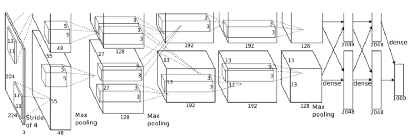

#1 AlexNet (2012)

Krizhevsky, Alex, Ilya Sutskever, and Geoffrey E. Hinton. “Imagenet classification with deep convolutional neural networks.” Advances in neural information processing systems. 2012.

In 2012, the authors proposed the use of GPUs to train a large Convolutional Neural Network (CNN) for the ImageNet challenge. This was a bold move, as CNNs were considered too heavy to be trained on such a large scale problem. To everyone surprise, they won first place, with a ~15% Top-5 error rate, against ~26% of the second place, which used state-of-the-art image processing techniques.

Reason #1: While most of us know AlexNet’s historical importance, not everyone knows which of the techniques we use today were already present before the boom. You might be surprised by how familiar many of the concepts introduced in the paper are, such as dropout and ReLU.

Reason #2: The proposed network had 60 million parameters, complete insanity for 2012 standards. Nowadays, we get to see models with over a billion parameters. Reading the AlexNet paper gives us a great deal of insight on how things developed since then.

Further Reading: Following the history of ImageNet champions, you can read the ZF Net, VGG, Inception-v1, and ResNet papers. This last one achieved super-human performance, solving the challenge. After it, other competitions took over the researchers’ attention. Nowadays, ImageNet is mainly used for Transfer Learning and to validate low-parameter models, such as:

#2 MobileNet (2017)

Howard, Andrew G., et al. “Mobilenets: Efficient convolutional neural networks for mobile vision applications.” arXiv preprint arXiv:1704.04861 (2017).

MobileNet is one of the most famous “low-parameter” networks. Such models are ideal for low-resources devices and to speed-up real-time applications, such as object recognition on mobile phones. The core idea behind MobileNet and other low-parameter models is to decompose expensive operations into a set of smaller (and faster) operations. Such compound operations are often orders-of-magnitude faster and use substantially fewer parameters.

Reason #1: Most of us have nowhere near the resources the big tech companies have. Understanding the low-parameter networks is crucial to make your own models less expensive to train and use. In my experience, using depth-wise convolutions can save you hundreds of dollars in cloud inference with almost no loss to accuracy.

Reason #2: Common knowledge is that bigger models are stronger models. Papers such as MobileNet show that there is a lot more to it than adding more filters. Elegance matters.

Further Reading: So far, MobileNet v2 and v3 have been released, providing new enhancements to accuracy and size. In parallel, other authors have devised many techniques to further reduce the model size, such as the SqueezeNet, and to downsize regular models with minimal accuracy loss. This paper gives a comprehensive summary of several models size vs accuracy.

#3 Attention is All You Need (2017)

Vaswani, Ashish, et al. “Attention is all you need.” Advances in neural information processing systems. 2017.

The paper that introduced the Transformer Model. Prior to this paper, language models relied extensively on Recurrent Neural Networks (RNN) to perform sequence-to-sequence tasks. However, RNNs are awfully slow, as they are terrible to parallelize to multi-GPUs. In contrast, the Transformer model is based solely on Attention layers, which are CNNs that capture the relevance of any sequence element to each other. The proposed formulation achieved significantly better state-of-the-art results and trains markedly faster than previous RNN models.

Reason #1: Nowadays, most of the novel architectures in the Natural-Language Processing (NLP) literature descend from the Transformer. Models such as GPT-2 and BERT are at the forefront of innovation. Understanding the Transformer is key to understanding most later models in NLP.

Reason #2: Most transformer models are in the order of billions of parameters. While the literature on MobileNets addresses more efficient models, the research on NLP addresses more efficient training. In combination, both views provide the ultimate set of techniques for efficient training and inference.

Reason #3: While the transformer model has mostly been restricted to NLP, the proposed Attention mechanism has far-reaching applications. Models such as Self-Attention GAN demonstrate the usefulness of global-level reasoning a variety of tasks. New papers on Attention applications pop-up every month.

Further Reading: I highly recommend reading the BERT and SAGAN paper. The former is a continuation of the Transformer model, and the latter is an application of the Attention mechanism to images in a GAN setup.

#4 Stop Thinking with Your Head / Reformer (~2020)

Merity, Stephen. “Single Headed Attention RNN: Stop Thinking With Your Head.” arXiv preprint arXiv:1911.11423 (2019).

Kitaev, Nikita, Łukasz Kaiser, and Anselm Levskaya. “Reformer: The Efficient Transformer.” arXiv preprint arXiv:2001.04451 (2020).

Transformer / Attention models have attracted a lot of attention. However, these tend to be resource-heavy models, not meant for ordinary consumer hardware. Both mentioned papers criticize the architecture, providing computationally efficient alternatives to the Attention module. As for the MobileNet discussion, elegance matters.

Reason #1: “Stop Thinking With Your Head” is a damn funny paper to read. This counts as a reason on its own.

Reason #2: Big companies can quickly scale their research to a hundred GPUs. We, normal folks, can’t. Scaling the size of models is not the only avenue for improvement. I can’t overstate that. Reading about efficiency is the best way to ensure you are efficiently using your current resources.

Further Reading: Since these are late 2019 and 2020, there isn’t much to link. Consider reading the MobileNet paper (if you haven’t already) for other takes on efficiency.

#5 Human Baselines for Pose Estimation (2017)

Xiao, Bin, Haiping Wu, and Yichen Wei. “Simple baselines for human pose estimation and tracking.” Proceedings of the European conference on computer vision (ECCV). 2018.

So far, most papers have proposed new techniques to improve the state-of-the-art. This paper, on the opposite, argues that a simple model, using current best practices, can be surprisingly effective. In sum, they proposed a human pose estimation network based solely on a backbone network followed by three de-convolution operations. At the time, their approach was the most effective at handling the COCO benchmark, despite its simplicity.

Reason #1: Being simple is sometimes the most effective approach. While we all want to try the shiny and complicated novel architectures, a baseline model might be way faster to code and, yet, achieve similar results. This paper reminds us that not all good models need to be complicated.

Reason #2: Science moves in baby steps. Each new paper pushes the state-of-the-art a bit further. Yet, it does not need to be a one-way road. Sometimes it is worthwhile to backtrack a bit and take a different turn. “Stop Thinking with Your Head,” and “Reformer” are two other good examples of this.

Reason #3: Proper data augmentation, training schedules, and a good problem formulation matter more than most people would acknowledge.

Further Reading: If interested in the Pose Estimation topic, you might consider reading this comprehensive state-of-the-art review.

#6 Bag of Tricks for Image Classification (2019)

He, Tong, et al. “Bag of tricks for image classification with convolutional neural networks.” Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2019.

Many times, what you need is not a fancy new model, just a couple of new tricks. In most papers, one or two new tricks are introduced to achieve a one or two percentage points improvement. However, these are often forgotten amid the major contributions. This paper collects a set of tips used throughout the literature and summarizes them for our reading pleasure.

Reason #1: Most tips are easily applicable

Reason #2: High are the odds you are unaware of most approaches. These are not the typical “use ELU” kind of suggestions.

Further Readings: Many other tricks exist, some are problem-specific, some are not. A topic I believe deserves more attention is class and sample weights. Consider reading this paper on class weights for unbalanced datasets.

#7 The SELU Activation (2017)

Klambauer, Günter, et al. “Self-normalizing neural networks.” Advances in neural information processing systems. 2017.

Most of us use Batch Normalization layers and the ReLU or ELU activation functions. In the SELU paper, the authors propose a unifying approach: an activation that self-normalizes its outputs. In practice, this renders batch normalization layers obsolete. Therefore, models using SELU activations are simpler and need fewer operations.

Reason #1: In the paper, the authors mostly deal with standard machine learning problems (tabular data). Most data scientists deal primarily with images. Reading a paper on purely dense networks is a bit of a refreshment.

Reason #2: If you have to deal with tabular data, this is one of the most up-to-date approaches to the topic within the Neural Networks literature.

Reason #3: The paper is math-heavy and uses a computationally derived proof. This, in itself, is a rare but beautiful thing to be seen.

Further Reading: If you want to dive into the history and usage of the most popular activation functions, I wrote a guide on activation functions here on Medium. Check it out :)

#8 Bag-of-local-Features (2019)

Brendel, Wieland, and Matthias Bethge. “Approximating cnns with bag-of-local-features models works surprisingly well on imagenet.” arXiv preprint arXiv:1904.00760 (2019).

If you break an image into jigsaw-like pieces, scramble them, and show them to a kid, it won’t be able to recognize the original object; a CNN might. In this paper, the authors found that classifying all 33x33 patches of an image and then averaging their class predictions achieves near state-of-the-art results on ImageNet. Moreover, they further explore this idea with VGG and ResNet-50 models, showing evidence that CNNs rely extensively on local information, with minimal global reasoning

Reason #1: While many believe that CNNs “see,” this paper shows evidence that they might be way dumber than we would dare to bet our money.

Reason #2: Only once in a while we get to see a paper with a fresh new take on the limitations of CNNs and their interpretability.

Further Reading: Related in its findings, the adversarial attacks literature also shows other striking limitations of CNNs. Consider reading the following article (and its reference section):

Breaking neural networks with adversarial attacks

Are the machine learning models we use intrinsically flawed?

#9 The Lottery Ticket Hypothesis (2019)

Frankle, Jonathan, and Michael Carbin. “The lottery ticket hypothesis: Finding sparse, trainable neural networks.” arXiv preprint arXiv:1803.03635 (2018).

Continuing on the theoretical papers, Frankle et al. found that if you train a big network, prune all low-valued weights, rollback the pruned network, and train again, you will get a better performing network. The lottery analogy is seeing each weight as a “lottery ticket.” With a billion tickets, winning the prize is certain. However, most of the tickets won’t win, only a couple will. If you could go back in time and buy only the winning tickets, you would maximize your profits. “A billion tickets” is a big initial network. “Training” is running the lottery and seeing which weights are high-valued. “Going back in time” is rolling-back to the initial untrained network and rerunning the lottery. In the end, you will get a better performing network.

Reason #1: The idea is insanely cool.

Reason #2: As for the Bag-of-Features paper, this sheds some light on how limited our current understanding of CNNs is. After reading this paper, I realized how underutilized our millions of parameters are. An open question is how much. The authors managed to reduce networks to a tenth of their original sizes, how much more might be possible in the future?

Reason #3: These ideas also give us more perspective on how inefficient behemoth networks are. Consider the Reformer paper, mentioned before. It drastically reduced the size of the Transformer by improving the algorithm. How much more could be reduced by using the lottery technique?

Further Reading: Weight initialization is an often overlooked topic. In my experience, most people stick to the defaults, which might not always be the best option. “All You Need is a Good Init” is a seminal paper on the topic. As for the lottery hypothesis, the following is an easy to read review:

Breaking down the Lottery Ticket Hypothesis

Distilling the ideas from MIT CSAIL’s intriguing paper: “The Lottery Ticket Hypothesis: Finding Sparse, Trainable...

#10 Pix2Pix and CycleGAN (2017)

Isola, Phillip, et al. “Image-to-image translation with conditional adversarial networks.” Proceedings of the IEEE conference on computer vision and pattern recognition. 2017.

Zhu, Jun-Yan, et al. “Unpaired image-to-image translation using cycle-consistent adversarial networks.” Proceedings of the IEEE international conference on computer vision. 2017.

This list would not be complete without some GAN papers.

Pix2Pix and CycleGAN are the two seminal works on conditional generative models. Both perform the task of converting images from a domain A to a domain B and differ by leveraging paired and unpaired datasets. The former perform tasks such as converting line drawings to fully rendered images, and the latter excels at replacing entities, such as turning horses into zebras or apples into oranges. By being “conditional,” these models allow users to have some degree of control over what is being generated by tweaking the inputs.

Reason #1: GAN papers are usually focused on the sheer quality of the generated results and place no emphasis on artistic control. Conditional models, such as these, provide an avenue for GANs to actually become useful in practice. For instance, at being a virtual assistant to artists.

Reason #2: Adversarial approaches are the best examples of multi-network models. While generation might not be your thing, reading about multi-network setups might be inspiring for a number of problems.

Reason #3: The CycleGAN paper, in particular, demonstrates how an effective loss function can work wonders at solving some difficult problems. A similar idea is given by the Focal loss paper, which considerably improves object detectors by just replacing their traditional losses for a better one.

Further Reading: While AI is growing fast, GANs are growing faster. I highly recommend coding a GAN if you never have. Here are the official Tensorflow 2 docs on the matter. One application of GANs that is not so well known (and you should check out) is semi-supervised learning.

With these twelve papers and their further readings, I believe you already have plenty of reading material to look at. This surely isn’t an exhaustive list of great papers. However, I tried my best to select the most insightful and seminal works I have seen and read. Please let me know if there are any other papers you believe should be on this list.

Good reading :)

Edit: After writing this list, I compiled a second one with ten more AI papers read in 2020 and a third on GANs. If you enjoyed reading this list, you might enjoy its continuations:

Ten More AI Papers to Read in 2020

Additional reading suggestions to keep you up-to-date with the latest and classic breakthroughs in AI and Data Science

GAN Papers to Read in 2020

Reading suggestions on Generative Adversarial Networks.

Bio: Ygor Rebouças Serpa (@ReboucasYgor) is a Master in Computer Science, by Universidade de Fortaleza, and is currently working on R&D, developing explainable AI tools for the healthcare industry. His interests range from music and game development to theoretical computer science and AI. See his Medium profile for more writing.

Original. Reposted with permission.

Related:

- 13 must-read papers from AI experts

- Must-read NLP and Deep Learning articles for Data Scientists

- 5 Essential Papers on Sentiment Analysis