Covid or just a Cough? AI for detecting COVID-19 from Cough Sounds

Increased capabilities in screening and early testing for a disease can significantly support quelling its spread and impact. Recent progress in developing deep learning AI models to classify cough sounds as a prescreening tool for COVID-19 has demonstrated promising early success. Cough-based diagnosis is non-invasive, cost-effective, scalable, and, if approved, could be a potential game-changer in our fight against COVID-19.

By Gowtham Ramesh, researcher at Bosch Centre for Data Science and AI at IIT Madras and Dr. Sundeep Teki, a leader in AI and Neuroscience.

Can you spot the Covid-19 cough? Click here to watch the video.

Coughing and sneezing were believed to be symptoms of the bubonic plague pandemic that ravaged Rome in the late sixth century. The origins of the benevolent phrase, “God bless you,” after a person coughs or sneezes is often attributed to Pope Gregory I, who hoped that this prayer would offer protection from certain death. The flu-like symptoms associated with the plague co-occur during the current Covid-19 pandemic as well, to the extent where “normal” coughs draw immediate alarm and concern. However, in the present technologically advanced times, we need not resort only to prayers. We can now build sophisticated AI models that learn complex acoustic features to distinguish between cough sounds from Covid-19 positive and otherwise healthy patients.

Since the start of the Covid-19 pandemic, multiple AI research teams have been working towards leveraging AI to improve screening, contact tracing, and diagnosis. Most of the preliminary work involved CT or X-ray scans [1,2,3,4] to diagnose Covid-19 faster and, in some cases, with better accuracy than the RT-PCR test. Recently, AI researchers have started testing cough sounds for preliminary diagnosis or a prescreening technique for Covid-19 detection in asymptomatic individuals. This is beneficial because, while someone may not have noticeable symptoms, the virus may still cause subtle changes in their body that may be detected by specific algorithms combining audio signal processing and machine learning. Cough-based audio diagnosis is non-invasive, cost-effective, scalable, and, if approved, could be a potential game-changer in our fight against Covid-19. This technology might also prove to have better efficacy than the standard strategy of prescreening for Covid-19 on the basis of temperature, especially for asymptomatic patients.

The intuition behind using cough sounds

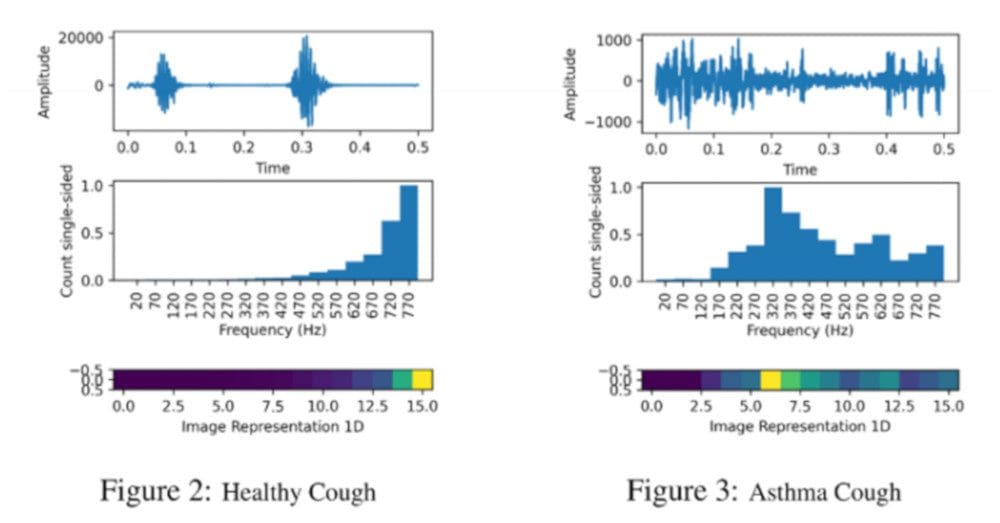

Figures 1a and 1b: Four types of cough sounds with the original amplitude, Fast Fourier transform output, and 1D spectrogram (source).

Cough, along with fever and fatigue, is one of the key symptoms of Covid-19 [5]. Studies have shown that cough from different respiratory ailments has unique characteristics due to the different nature and location of the underlying irritants [6]. Though a human ear cannot differentiate these features, AI models can be trained to learn these features and discriminate between a cough from a Covid-19 positive and negative patient.

One of the significant challenges is the availability of the right quantity and quality of data to build an AI model that can make robust predictions about the underlying medical ailment based on cough sounds. Cough is, unfortunately, a common symptom of many respiratory and non-respiratory diseases (see Figure 2). Hence, an AI model must also learn to distinguish coughs related to Covid-19 from coughs caused by other respiratory ailments. The prediction of such AI models could be considered as such or be further substantiated by other clinical tests, for instance, an RT-PCR screening test.

Figure 2: Non-Covid-19 infections that can cause cough (source).

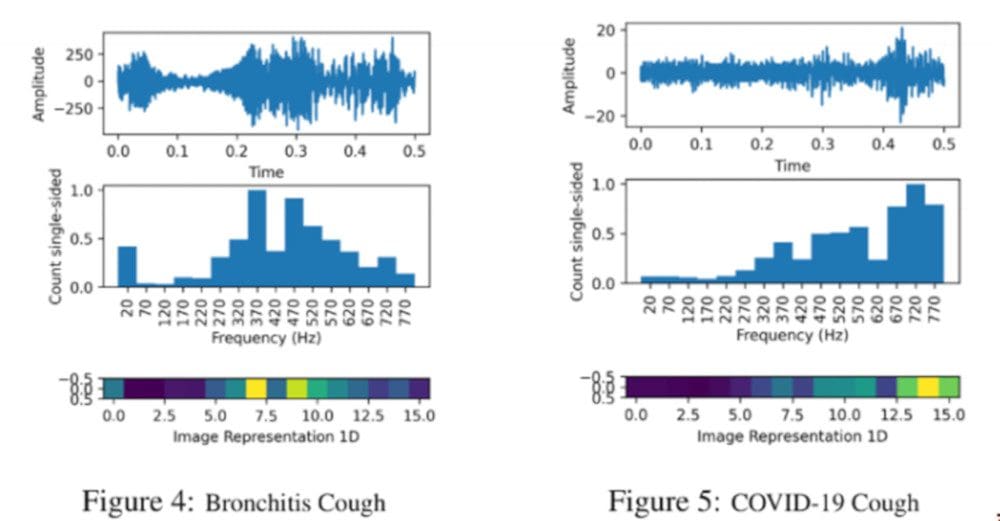

Figure 3: Overview of AI-based prescreening app by ‘Cough against Covid-19’ project (source).

Since spring 2020, AI researchers have collected cough sound data from the general public via mobile apps and websites and developed AI solutions for cough-based prescreening tools. Some of these works include - AI4Covid-19 [6] from the University of Oklahoma, Covid-19 sounds [7] from the University of Cambridge, Coswara [8] from IISC Bangalore, Cough against Covid-19 [9] from Wadhwani AI, Covid-19 Voice detector [10] from CMU, COUGHVID from EPFL [11], Opensigma from MIT [12], Saama AI research [13] and UK startup Novoic amongst others.

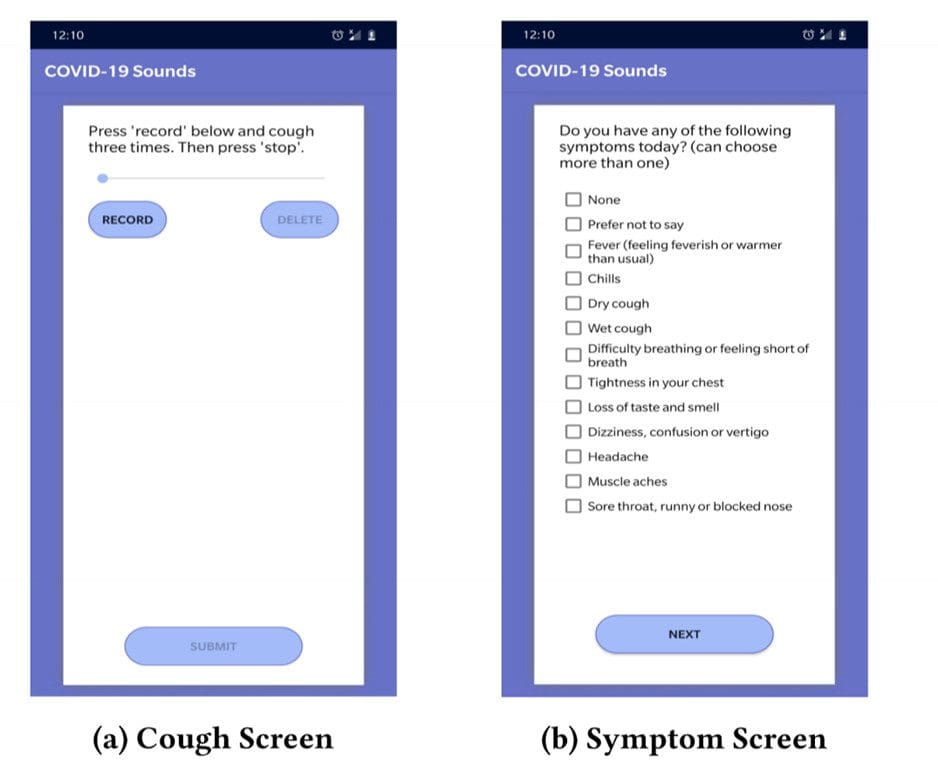

Figure 4: Interface of Covid-19 sounds app from a University of Cambridge team (source).

While the cough data in the AI4Covid-19, Cough against Covid-19, and Saama AI research projects are collected in a controlled setting or collected from hospitals under clinical supervision, Coswara, Covid-19 sounds, and COUGHVID, MIT’s project, use crowdsourced and uncontrolled data collected through their websites or app. The website/app records forced coughs (Coswara also collects more audio - breathings sounds, vowel pronunciations, counting numbers from one to twenty) and gather useful metadata like age, gender, ethnicity, and health status information, like details of a recent Covid-19 test, current symptoms, and health status, like the occurrence of diabetes, asthma, heart disease, amongst others.

The AI4Covid-19, Covid-19 sounds, and Saama AI research projects also train models to differentiate Covid-19 cough sounds from non-Covid-19 infection coughs like pertussis, asthma, and bronchitis. MIT researchers used features from their previous work to detect Alzheimer’s from cough sounds [14] and fine-tuned their AI model to detect Covid-19 from a healthy person’s cough. The connection between Covid-19 and the brain with recently reported symptoms of neurological impairments in Covid-19 patients led authors to test the same biomarkers - vocal cord strength, sentiment, lung performance, and muscular degradation for detecting Covid-19 coughs. “Our research uncovers a striking similarity between Alzheimer’s and Covid-19 discrimination. The exact same biomarkers can be used as a discrimination tool for both, suggesting that perhaps, in addition to temperature, pressure, or pulse, there are some higher-level biomarkers that can sufficiently diagnose conditions across specialties once thought mostly disconnected.” [11]

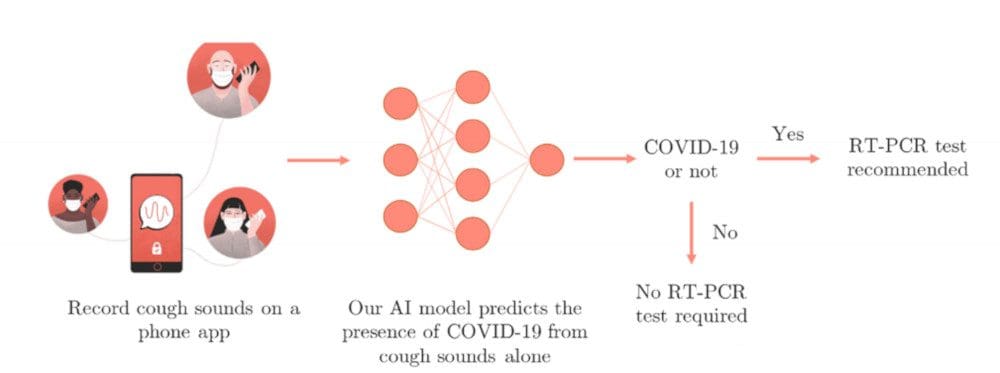

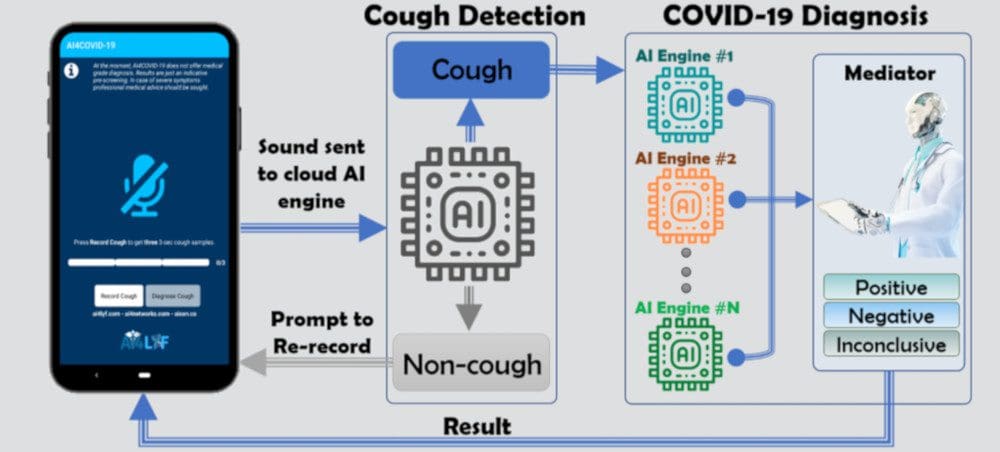

Figure 5: Overview architecture of AI4Covid-19 COVID-19 discriminator with cough recordings as input (source).

Once an AI model is trained, it can be incorporated into a user-friendly app where users can log in and submit their cough sounds via their phones to get instant results. The model prediction can be used to ascertain whether a user might be infected and follow-up to confirm with a formal test like RT-PCR. Figure 5 shows an overview of the architecture developed by the AI4covid-19 team. It includes a cough detection model to check the quality of the cough sound and prompts the user to re-record in case of noisy recording or non-cough sound. The detected cough is then sent to Covid-19 diagnosis model(s) to discriminate between a cough from a Covid-19 positive and negative patient.

The preliminary results of most of the teams look promising and confirm the hypothesis that cough sounds contain unique information and latent features to aid diagnosis and prescreening for Covid-19. The MIT lab has collected around 70,000 audio samples of different coughs with 2,500 coughs from confirmed Covid-19 positive patients. The trained model correctly identified 98.5% of people with Covid-19 and correctly ruled out Covid-19 in 94.2% of people without the disease. For asymptomatic patients, the model correctly identified 100% of people with Covid-19, and correctly ruled out Covid-19 in 83.2% of people without the disease. Cambridge’s Covid-19 sounds project reported an 80% success rate in July 2020.

In spite of the similar acoustic modeling pipeline and deep learning approaches, it is difficult to compare these preliminary results across these projects as each AI model is trained using distinct datasets (owing to the scarcity of publicly available datasets to different benchmark works). Since cough also covaries with age and gender, it is important to collect diverse data to make any AI solution generalize across patient populations around the world and accepted as a standard non-invasive prescreening tool for Covid-19. The data collection for most of the projects is still ongoing, and readers are suggested to check out these websites, donate coughs, and help save lives: Covid-19 sounds, Coswara, Cough against Covid-19, Covid-19 Voice detector, COUGHVID, Opensigma, Novoic, and AI4COVID-19.

References:

[1] L. Wang, A. Wong ‘‘Covid-19-Net: a tailored deep convolutional neural network design for detection of Covid-19 cases from chest radiography images,’’ (2020) arXiv preprint arXiv:2003.09871vol. 1

[2] Zhang I, Xie Y, Li Y, Shen C, Xia Y. ‘‘Covid-19 screening on chest X-ray images using deep learning based anomaly detection,’’. 2020. arXiv preprint arXiv: 2003.12338.

[3] Li L, Qin L, Xu Z, Yin Y, Wang X, Kong B, Bai J, Lu Y, Fang Z, Song Q, et al. ‘‘Artificial intelligence distinguishes Covid-19 from community acquired pneumonia on chest ct. ’’ Radiology; 2020. 200905

[4] Zhao W, Zhong Z, Xie X, Yu Q, Liu J. ‘‘Relation between chest ct findings and clinical conditions of coronavirus disease (Covid-19) pneumonia: a multicenter study. ’ American Journal of Roentgenology 2020:1–6.

[5] WHO. 2020b. Q&A on coronaviruses (COVID19). https://www.who.int/emergencies/diseases/novelcoronavirus-2019/question-and-answers-hub/q-a-detail/qa-coronaviruses. Accessed: 2020-11-17.

[6] Imran, Ali, et al. "AI4COVID-19: AI enabled preliminary diagnosis for COVID-19 from cough samples via an app." (2020) arXiv preprint arXiv:2004.01275

[7] Brown, Chloë, et al. "Exploring Automatic Diagnosis of COVID-19 from Crowdsourced Respiratory Sound Data." (2020) arXiv preprint arXiv:2006.05919

[8] Sharma, Neeraj, et al. "Coswara--A Database of Breathing, Cough, and Voice Sounds for COVID-19 Diagnosis." (2020) arXiv preprint arXiv:2005.10548

[9] Bagad, Piyush, et al. "Cough Against COVID: Evidence of COVID-19 Signature in Cough Sounds." arXiv preprint arXiv:2009.08790 (2020).

[10] Deshmukh, Soham, Mahmoud Al Ismail, and Rita Singh. "Interpreting glottal flow dynamics for detecting COVID-19 from voice." arXiv preprint arXiv:2010.16318 (2020).

[11] Orlandic, Lara, Tomas Teijeiro, and David Atienza. "The COUGHVID crowdsourcing dataset: A corpus for the study of large-scale cough analysis algorithms." arXiv preprint arXiv:2009.11644 (2020).

[12] Laguarta, Jordi, Ferran Hueto, and Brian Subirana. "COVID-19 Artificial Intelligence Diagnosis using only Cough Recordings." IEEE Open Journal of Engineering in Medicine and Biology (2020).

[13] Pal, Ankit, and Malaikannan Sankarasubbu. "Pay Attention to the cough: Early Diagnosis of COVID-19 using Interpretable Symptoms Embeddings with Cough Sound Signal Processing." arXiv preprint arXiv:2010.02417 (2020).

[14] J. Laguarta, F. Hueto, P. Rajasekaran, S. Sarma, and B. Subirana, “Longitudinal speech biomarkers for automated alzheimer’s detection,” Cognitive Neuroscience, Preprint, pp. 1–10, 2020. https://www.researchsquare.com/article/rs-56078/latest.pdf

Bios: Gowtham Ramesh is a Post Baccalaureate fellow and researcher at Robert Bosch Centre for Data Science and Artificial Intelligence (RBCDSAI) at IIT Madras. He was previously a Senior Machine Learning Engineer at Quantiphi, where he worked on low resource speech to text, handwritten OCR, and Conversational Question answering, amongst others.

Dr. Sundeep Teki is the Founder of a new EdTech startup (stealth) focused on AI reskilling to create a talent pipeline at scale that will build AI for India. He is a leader in AI and neuroscience with professional experience in BigTech (Conversational AI at Amazon Alexa AI, Seattle), unicorn startup (Applied AI at Swiggy, Bangalore), and academia (Cognitive Neuroscience at Oxford University and University College London). He has published 40+ papers on Neuroscience and AI (~1800 citations), secured £500k+ in research funding, and is recognised by the Royal Society, UK as an ‘Emerging Leader in Neuroscience.’

Related: