How to troubleshoot memory problems in Python

Memory problems are hard to diagnose and fix in Python. This post goes through a step-by-step process for how to pinpoint and fix memory leaks using popular open source python packages.

By Freddy Boulton, Software Engineer at Alteryx

Finding out that an application is running out of memory is one of the worst realizations a developer can have. Memory problems are hard to diagnose and fix in general, but I’d argue it’s even harder in Python. Python’s automatic garbage collection makes it easy to get up and going with the language, but it’s so good at being out of the way that when it doesn’t work as expected, developers can be at a loss for how to identify and fix the problem.

In this blog post, I will show how we diagnosed and fixed a memory problem in EvalML, the open-source AutoML library developed by Alteryx Innovation Labs. There is no magic recipe for solving memory problems, but my hope is that developers, specifically Python developers, can learn about tools and best practices they can leverage when they run into this kind of problem in the future.

After reading this blog post, you should walk away with the following:

- Why it’s important to find and fix memory problems in your programs,

- What circular references are and why they can cause memory leaks in Python, and

- Knowledge of Python’s memory profiling tools and some steps you can take to identify the cause of memory problems.

Setting the stage

The EvalML team runs a suite of performance tests before releasing a new version of our package to catch any performance regressions. These performance tests involve running our AutoML algorithm on a variety of datasets, measuring the scores our algorithm achieves as well as the runtime, and comparing those metrics to our previously released version.

One day I was running the tests, and suddenly the application crashed. What happened?

Step 0 - What is memory, and what is a leak?

One of the most important functions of any programming language is its ability to store information in the computer’s memory. Each time your program creates a new variable, it’ll allocate some memory to use to store the contents of that variable.

The kernel defines an interface for programs to access the computer’s CPUs, memory, disk storage and more. Every programming language provides ways to ask the kernel to allocate and deallocate chunks of memory for use by a running program.

Memory leaks occur when a program asks the kernel to set aside a chunk of memory to use, but then due to a bug or a crash, the program never tells the kernel when it is finished using that memory. In that case, the kernel will continue to think the forgotten chunks of memory are still being used by the running program, and other programs won’t be able to access those chunks of memory.

If the same leak occurs repeatedly while running a program, the total size of forgotten memory can grow so large that it consumes a large portion of the computer’s memory! In that situation, if a program then tries to ask for more memory, the kernel will raise an “out of memory” error and the program will stop running, or in other words, “crash.”

So, it is important to find and fix memory leaks in programs you write, because if you don’t, your program could eventually run out of memory and crash, or it could cause other programs to crash.

Step 1: Establish that it is a memory problem

An application can crash for a number of reasons — maybe the server running the code crashed, maybe there’s a logical error in the code itself — so it’s important to establish that the problem at hand is a memory problem.

The EvalML performance tests crashed in an eerily quiet way. All of a sudden, the server stopped logging progress, and the job quietly finished. The server log would display any stack traces caused by coding errors, so I had a hunch this silent crash was caused by the job using all of the available memory.

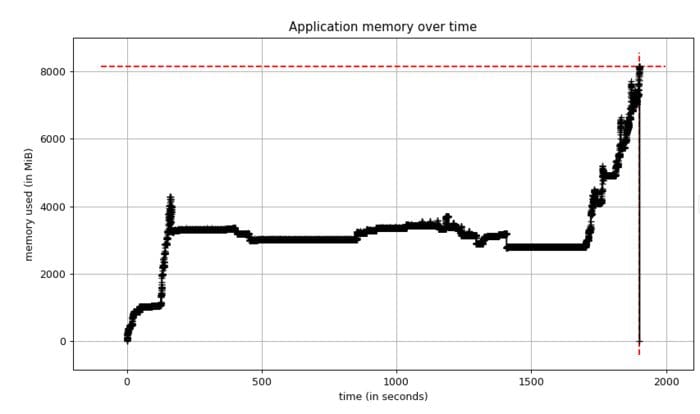

I reran the performance tests again, but this time with Python’s memory-profiler enabled to get a plot of the memory usage over time. The tests crashed again and when I looked at the memory plot, I saw this:

Memory profile of the performance tests

Memory profile of the performance testsOur memory usage stays stable over time, but then it reaches 8 gigabytes! I know that our application server has 8 gigabytes of RAM, so this profile confirms we’re running out of memory. Moreover, when the memory is stable, we’re using about 4 GB of memory, but our previous version of EvalML used about 2 GB of memory. So, for some reason, this current version is using about twice as much memory as normal.

Now I needed to find out why.

Step 2: Reproduce the memory problem locally with a minimal example

Pinpointing the cause of a memory problem involves a lot of experimentation and iteration because the answer is not usually obvious. If it was, you probably wouldn’t have written it into the code! For this reason, I think it is important to reproduce the problem with as few lines of code as possible. This minimal example makes it possible for you to quickly run it under a profiler while you modify the code to see if you are making progress.

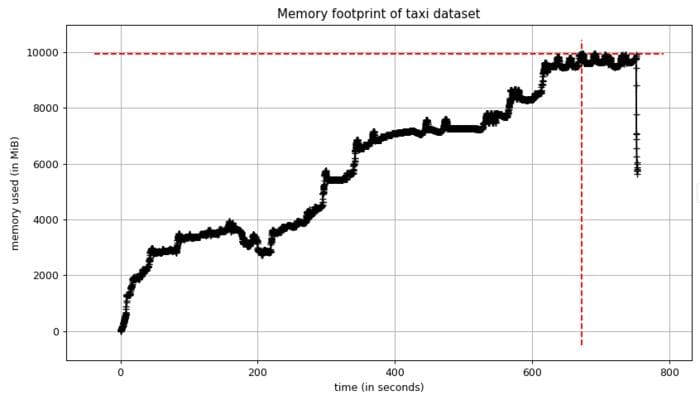

In my case, I knew from experience that our application runs a taxi dataset with 1.5 million rows at about the time I saw the big spike. I stripped down our application to only the part that runs this dataset. I saw a spike similar to what I described above, but this time, the memory usage reached 10 gigabytes!

After seeing this, I knew had a good enough minimal example to dive deeper.

Memory footprint of local reproducer on taxi dataset

Memory footprint of local reproducer on taxi dataset

Step 3: Find the lines of code that are allocating the most memory

Once we’ve isolated the problem to as small a code chunk as possible, we can see where the program is allocating the most memory. This can be the smoking gun you need to be able to refactor the code and fix the problem.

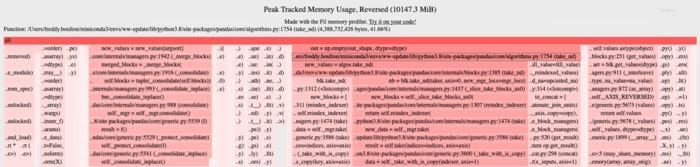

I think the filprofiler is a great Python tool to do this. It displays the memory allocation of each line of code in your application at the point of peak memory usage. This is the output on my local example:

The output of fil-profile

The output of fil-profileThe filprofiler ranks the lines of code in your application (and your dependencies’ code) by their memory allocation. The longer and redder the line is, the more memory is allocated.

The lines that allocate the most memory are creating pandas dataframes (pandas/core/algorithms.py and pandas/core/internal/managers.py) and amount to 4 gigabytes of data! I’ve truncated the output of filprofiler here but it’s able to track the pandas code to code in EvalML that creates pandas dataframes.

Seeing this was a bit perplexing. Yes, EvalML creates pandas dataframes, but these dataframes are short-lived throughout the AutoML algorithm and should be deallocated as soon as they are no longer used. Since this was not the case, and these dataframes were still in memory long enough EvalML was done with them, I thought the latest version had introduced a memory leak.

Step 4: Identify Leaking objects

In the context of Python, a leaking object is an object that is not deallocated by Python’s garbage collector after it is done being used. Since Python uses reference counting as one of its primary garbage collection algorithms, these leaking objects are usually caused by objects holding a reference to them longer than they should.

These kinds of objects are tricky to find, but there are some Python tools you can leverage to make the search tractable. The first tool is the gc.DEBUG_SAVEALL flag of the garbage collector. By setting this flag, the garbage collector will store unreachable objects in the gc.garbage list. This will let you investigate those objects further.

The second tool is the objgraph library. Once the objects are in the gc.garbage list, we can filter this list to pandas dataframes, and use objgraph to see what other objects are referring to these dataframes and keeping them in memory. I got the idea for this approach by reading this O’Reilly blog post.

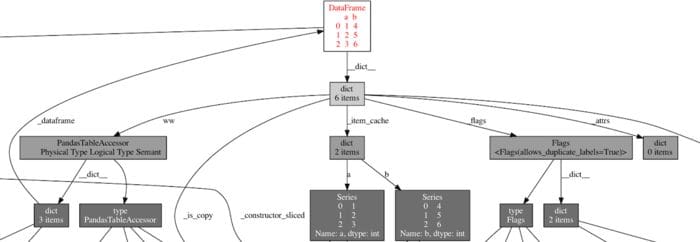

This is a subset of the object graph I saw when I visualized one of these dataframes:

A graph of the memory used by a pandas dataframe, showing a circular reference which results in a memory leak.

This is the smoking gun I was looking for! The dataframe makes a reference to itself via something called the PandasTableAccessor, which creates a circular reference, so this will keep the object in memory until Python’s garbage collector runs and is able to free it. (You can trace the cycle via dict, PandasTableAccessor, dict, _dataframe.) This was problematic for EvalML because the garbage collector was keeping these dataframes in memory so long that we ran out of memory!

I was able to trace the PandasTableAccessor to the Woodwork library and bring this issue up to the maintainers. They were able to fix it in a new release and file a relevant issue to the pandas repository — a great example of the collaboration that’s possible in the open source ecosystem.

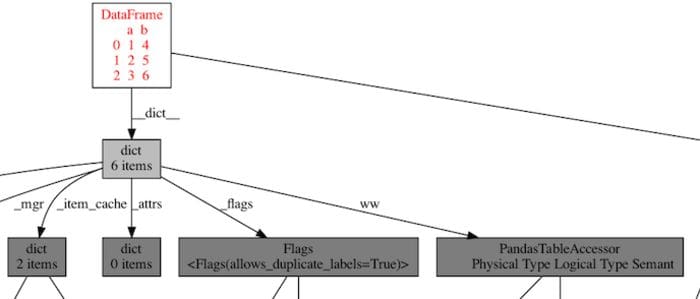

After the Woodwork update was released, I visualized the object graph of the same dataframe, and the cycle disappeared!

Object graph of pandas dataframe after the woodwork upgrade. No more cycles!

Object graph of pandas dataframe after the woodwork upgrade. No more cycles!

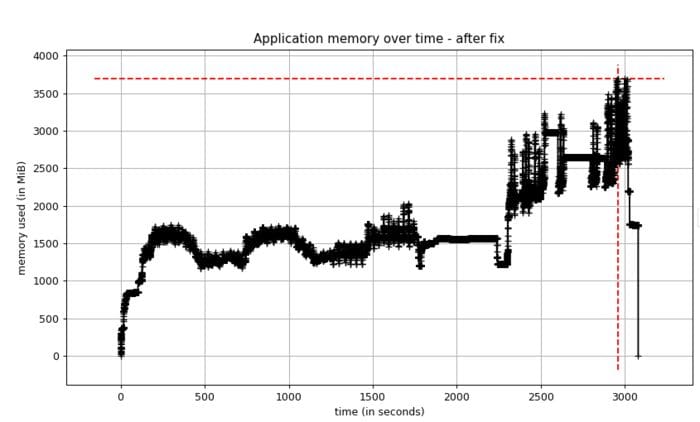

Step 5: Verify the fix works

Once I upgraded the Woodwork version in EvalML, I measured the memory footprint of our application. I’m happy to report that the memory usage is now less than half of what it used to be!

Memory of performance tests after the fix

Memory of performance tests after the fix

Closing thoughts

As I said at the beginning of this post, there is no magic recipe for fixing memory problems, but this case study offers a general framework and set of tools you can leverage if you run into this situation in the future. I found memory-profiler and filprofiler to be helpful tools for debugging memory leaks in Python.

I also want to emphasize that circular references in Python can increase the memory footprint of your applications. The garbage collector will eventually free the memory but, aswe saw in this case, maybe not until it’s too late!

Circular references are surprisingly easy to introduce unintentionally in Python. I was able to find an unintentional one in EvalML, scikit-optimize, and scipy. I encourage you to keep your eyes peeled, and if you see a circular reference in the wild, start a conversation to see if it is actually needed!

Bio: Freddy Boulton is a software engineer at Alteryx. He enjoys working on open source projects and riding his bicycle all over Boston.

Original. Reposted with permission.

Related:

- Top Programming Languages and Their Uses

- Data Scientists, You Need to Know How to Code

- 5 Tasks To Automate With Python