Back to Basics Week 3: Introduction to Machine Learning

Welcome back to Week 3 of KDnuggets’ "Back to Basics" series. This week, we will be diving into the world of machine learning.

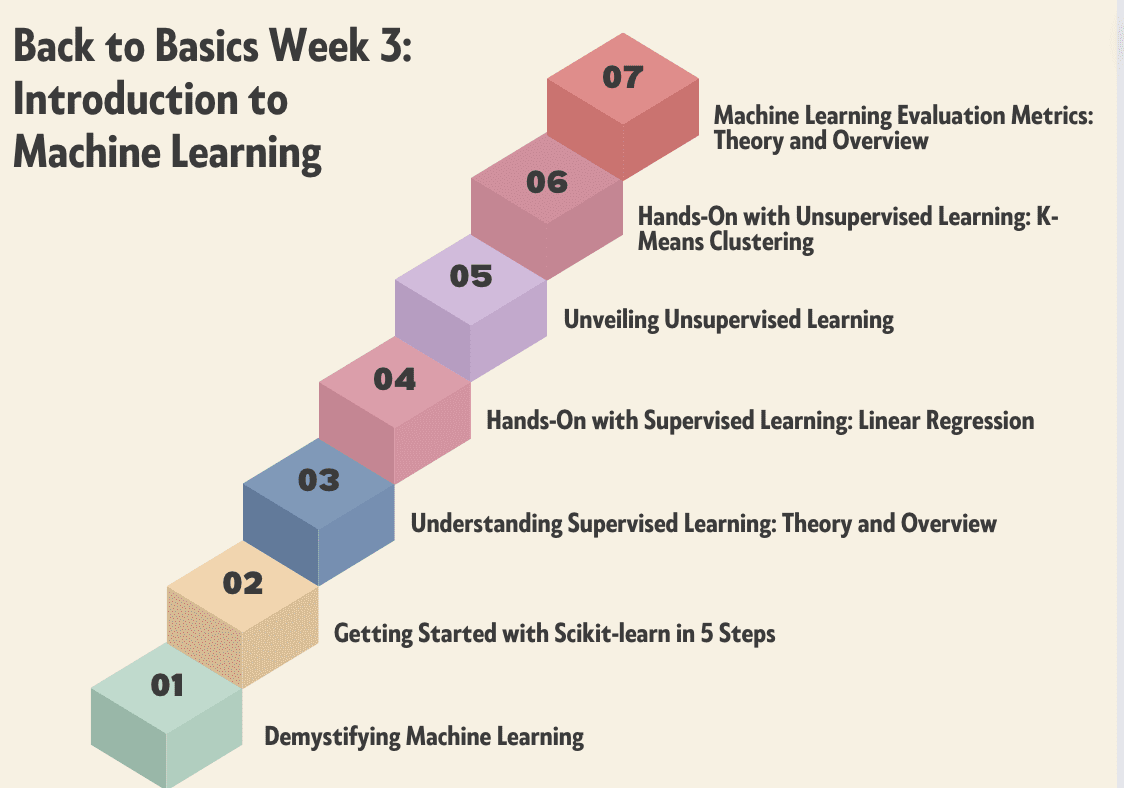

Image by Author

Join KDnuggets with our Back to Basics pathway to get you kickstarted with a new career or a brush up on your data science skills. The Back to Basics pathway is split up into 4 weeks with a bonus week. We hope you can use these blogs as a course guide.

If you haven’t already, have a look at:

- Week 1: Python Programming & Data Science Foundations

- Week 2: Database, SQL, Data Management and Statistical Concepts

Moving onto the third week, we will dive into machine learning.

- Day 1: Demystifying Machine Learning

- Day 2: Getting Started with Scikit-learn in 5 Steps

- Day 3: Understanding Supervised Learning: Theory and Overview

- Day 4: Hands-On with Supervised Learning: Linear Regression

- Day 5: Unveiling Unsupervised Learning

- Day 6: Hands-On with Unsupervised Learning: K-Means Clustering

- Day 7: Machine Learning Evaluation Metrics: Theory and Overview

Demystifying Machine Learning

Week 3 - Part 1: Demystifying Machine Learning

Traditionally, computers used to follow an explicit set of instructions. For instance, if you wanted the computer to perform a simple task of adding two numbers, you had to spell out every step. However, as our data became more complex, this manual approach of giving instructions for each situation became inadequate.

This is where Machine Learning emerged as a game changer. We wanted computers to learn from examples just like we learn from our experiences. Imagine teaching a child how to ride a bicycle by showing it a few times and then letting him fall, figure it out, and learn on his own. That's the idea behind Machine Learning. This innovation has not only transformed industries but has become an indispensable necessity in today's world.

Getting Started with Scikit-learn in 5 Steps

Week 3 - Part 2: Getting Started with Scikit-learn in 5 Steps

This tutorial offers a comprehensive hands-on walkthrough of machine learning with Scikit-learn. Readers will learn key concepts and techniques including data preprocessing, model training and evaluation, hyperparameter tuning, and compiling ensemble models for enhanced performance.

When learning about how to use Scikit-learn, we must obviously have an existing understanding of the underlying concepts of machine learning, as Scikit-learn is nothing more than a practical tool for implementing machine learning principles and related tasks. Machine learning is a subset of artificial intelligence that enables computers to learn and improve from experience without being explicitly programmed. The algorithms use training data to make predictions or decisions by uncovering patterns and insights.

Understanding Supervised Learning: Theory and Overview

Week 3 - Part 3: Understanding Supervised Learning: Theory and Overview

Supervised is a subcategory of machine learning in which the computer learns from the labelled dataset containing both the input as well as the correct output. It tries to find the mapping function that relates the input (x) to the output (y). You can think of it as teaching your younger brother or sister how to recognize different animals. You will show them some pictures (x) and tell them what each animal is called (y).

After a certain time, they will learn the differences and will be able to recognize the new picture correctly. This is the basic intuition behind supervised learning.

Hands-On with Supervised Learning: Linear Regression

Week 3 - Part 4: Hands-On with Supervised Learning: Linear Regression

If you're looking for a hands-on experience with a detailed yet beginner-friendly tutorial on implementing Linear Regression using Scikit-learn, you're in for an engaging journey.

Linear regression is the fundamental supervised machine learning algorithm for predicting the continuous target variables based on the input features. As the name suggests it assumes that the relationship between the dependant and independent variable is linear.

So if we try to plot the dependent variable Y against the independent variable X, we will obtain a straight line.

Unveiling Unsupervised Learning

Week 3 - Part 5: Unveiling Unsupervised Learning

Explore the unsupervised learning paradigm. Familiarize yourself with the key concepts, techniques, and popular unsupervised learning algorithms.

In machine learning, unsupervised learning is a paradigm that involves training an algorithm on an unlabeled dataset. So there’s no supervision or labeled outputs.

In unsupervised learning, the goal is to discover patterns, structures, or relationships within the data itself, rather than predicting or classifying based on labelled examples. It involves exploring the inherent structure of the data to gain insights and make sense of complex information.

Hands-On with Unsupervised Learning: K-Means Clustering

Week 3 - Part 6: Hands-On with Unsupervised Learning: K-Means Clustering

This tutorial provides hands-on experience with the key concepts and implementation of K-Means clustering, a popular unsupervised learning algorithm, for customer segmentation and targeted advertising applications.

K-means clustering is one of the most commonly used unsupervised learning algorithms in data science. It is used to automatically segment datasets into clusters or groups based on similarities between data points.

In this short tutorial, we will learn how the K-Means clustering algorithm works and apply it to real data using scikit-learn. Additionally, we will visualize the results to understand the data distribution.

Machine Learning Evaluation Metrics: Theory and Overview

Week 3 - Part 7: Machine Learning Evaluation Metrics: Theory and Overview

High-level exploration of evaluation metrics in machine learning and their importance.

Building a machine learning model that generalizes well on new data is very challenging. It needs to be evaluated to understand if the model is enough good or needs some modifications to improve the performance.

If the model doesn’t learn enough of the patterns from the training set, it will perform badly on both training and test sets. This is the so-called underfitting problem.

Learning too much about the patterns of training data, even the noise, will lead the model to perform very well on the training set, but it will work poorly on the test set. This situation is overfitting. The generalization of the model can be obtained if the performances measured both in training and test sets are similar.

Wrapping it Up

Congratulations on completing week 3!!

The team at KDnuggets hope that the Back to Basics pathway has provided readers with a comprehensive and structured approach to mastering the fundamentals of data science.

Week 4 will be posted next week on Monday - stay tuned!

Nisha Arya is a data scientist, freelance technical writer, and an editor and community manager for KDnuggets. She is particularly interested in providing data science career advice or tutorials and theory-based knowledge around data science. Nisha covers a wide range of topics and wishes to explore the different ways artificial intelligence can benefit the longevity of human life. A keen learner, Nisha seeks to broaden her tech knowledge and writing skills, while helping guide others.