Big Data, Bible Codes, and Bonferroni

This discussion will focus on 2 particular statistical issues to be on the look out for in your own work and in the work of others mining and learning from Big Data, with real world examples emphasizing the importance of statistical processes in practice.

Big Data presents opportunities for data mining and machine learning previously unimaginable, given the vast size of datasets from which we are able to learn, cluster upon, find associations within, and generally search for insights not before attainable. Mining Big Data is not a plug-and-play, one-size-fit-all, (insert another cliche here) process, however; though there seems to be alarmingly little discussion anymore of their importance in relation to Big Data, statistical thinking, methods, and processes matter.

It is possible that the lack of discussion is because most people understand this fundamental truth already, which I find doubtful. Perhaps I simply have not come across relevant such topics of late, and they do, in fact, exist. I also find this doubtful. I fear that oversight or an essential lack of understanding are more likely to blame.

This article is not a blanket criticism of learning from Big Data; instead, it is much more accurately a reminder that time-tested statistical methods are more valid now than ever, in this Era of Big Data. In that regard, this discussion will focus on 2 particular statistical issues to be on the look out for in your own work and in the work of others mining and learning from Big Data.

And for the practitioners out there, this is not about abstract statistical theory. This is about practicality. And the highly improbable probabilities that can be improperly gleaned from Big Data.

The Bonferroni Principle

There is a concept in statistics that goes like this: even in completely random datasets, you can expect particular events of interest to occur, and to occur in increasing numbers as the amount of data grows. These occurrences are nothing more than collections of random features that appear to be instances of interest, but are not. This bears repeating: even amounts of random data lead to what seem to be events of interest, and the number of these seemingly interesting events grows as does the size of the dataset.

There is a concept in statistics that goes like this: even in completely random datasets, you can expect particular events of interest to occur, and to occur in increasing numbers as the amount of data grows. These occurrences are nothing more than collections of random features that appear to be instances of interest, but are not. This bears repeating: even amounts of random data lead to what seem to be events of interest, and the number of these seemingly interesting events grows as does the size of the dataset.

The Bonferroni Principle1 is a statistical method for accounting for these random events. To employ it, determine the number of expected random events of interest in the dataset, and if the observed number is significantly greater than this number, the chances of any observations providing useful insight are almost nonexistent. The Bonferroni Correction is a technique for helping to avoid such observations.

Torture the data, and it will confess to anything.

— Ronald Coase, economist, Nobel Prize Laureate.

One of the most prominent and easy to understand examples of the Bonferroni Principle is that of the George W. Bush administration's Total Information Awareness data-collection and data mining plan of 20021. The criticism of the plan's effectiveness, and its relationship to the Bonferroni Principal, are as follows.

Suppose we are looking for terrorists, from a potential pool made up of a very large number of individuals. Let's say that, in actuality, however, there is an incredibly small number of individuals who are terrorists. Now suppose these potential terrorists are thought to be deliberately visiting particular locations in pairs for meetings, but let's further suppose that these potential terrorists are actually non-terrorists moving about randomly. By using hard numbers for such a scenario and working out the probabilities, Rajaraman & Ullman gives the example of one billion potential "evil-doers," and though the actual number may be something very small (they give the example of 10 pairs), statistical probabilities could put the number of suspected pairs meeting at given locations due to pure randomness at 250,000 (again, in this particular example).

Now, this is clearly a problem. In purely practical terms, imagine having to recruit, train, and pay enough police personnel to investigate each of these flagged individuals!

If a Big Data mining practitioner had first computed some number which could be proven a reasonable number of expected random events (the Bonferroni Principle in action), the entire investigation would have been immediately recognized as flawed, given the near-absolute certainty that this Bonferroni number would have been less than a quarter of a million, the suspected number of significant events shown above.

Knowing when our out-of-the-gate quantitative assumptions are off base is critically useful in the Era of Big Data. The Bonferroni Principle is one example of how Big Data can result in highly unlikely outcomes masquerading as statistically sound.

The Bible Code

The Look-elsewhere Effect is closely related to the Bonferroni Principle, and may be considered a more formal statement of the statistical concept. The Look-elsewhere Effect can be precisely stated in relation to p-values; we'll skip that discussion here, but check the Wikipedia page for more details.

The Look-elsewhere Effect is closely related to the Bonferroni Principle, and may be considered a more formal statement of the statistical concept. The Look-elsewhere Effect can be precisely stated in relation to p-values; we'll skip that discussion here, but check the Wikipedia page for more details.

The Look-elsewhere Effect occurs in data when what appears to be a statistically significant event is actually a result of chance, and only arises due to the large size of parameter search space. It is also referred to as the multiple comparison problem.

The Bible Code is a supposed collection of secretly-encoded messages hidden throughout the Bible, or more accurately, the Hebrew Torah. The idea of the Bible Code was popularized in the 1990s in a book by Michal Drosnin2. While originally enjoying some limited popularity and apprehensive acceptance by curious scholars (again, limited) and lay folk alike, the Bible Code has since been discredited in a number of different ways by a number of different people, although it apparently still enjoys confidence in some circles, and recently "predicted" Brexit vote results. You can read more about Bible code discreditation here and here and here.

In God we trust. All others must bring data.

— W. Edwards Deming, engineer, statistician, professor, author, lecturer, and management consultant.

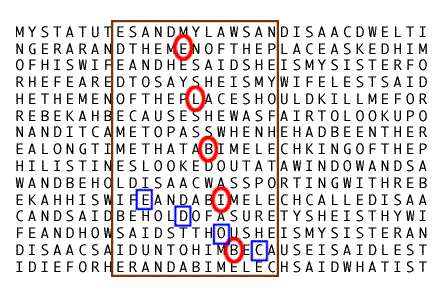

These supposed secretly-encoded messages of the Bible Code are uncovered by employing Equidistant Letter Sequence (ELS) (original paper). ELS a simple procedure which encompasses starting at a given letter in a string and skipping the same number of letters to put together a new string made up of the letters encountered during this skipping process.

In the example phrase below, starting at the second letter (H) and employing an ELS of 2 spells out the word 'HELLO':

The Bible Code is much more complex than this simple pattern searching, however. Variable skip letter values are employed, and the entire Torah is searched with each value. But then each of these results are overlayed with the results of other skip letter value results (see the red and blue highlighted letters in the above figure), in order to see if "relevant" terms overlap at some "significant" intersection, and so on, and so on, layering these ELS results one on top of another. Given this complexity, ELS and its application to the Bible Code is a problem that can be reduced to the cross product of an incredible number of parameters and, as such, result permutations become astronomical in size, and eventually something "significant" arises.

Similar results of significant findings have been replicated in a variety of other works of non-trivial length (see the above discrediting links). Given enough letters, skip sequences, and layers, even if you don't find some initial result of interest, continued (indefinite?) looking (elsewhere) should eventually turn up something juicy (Presidential election results, terror attacks, assassinations, all apparently encoded in the Bible).

This continued searching, without regard to a hypothesis or the bounds of probability that go along with these result set permutations, suggest that actual significant events will be lost in the fray. The Look-elsewhere Effect is another example of how Big Data can be abused unwittingly.

Conclusion

Statistics: they aren't just the outdated grandparents of data mining and analytics. The field of statistics is a time-tested set of tools and way of thinking which helps eliminate much of the structured uncertainty that can emerge from mining Big Data for insights. Employing statistical thinking from project inception is more relevant than ever in the Era of Big Data, and its oversight and the general lack of statistical rigor can lead to all sorts of practical problems, as outlined above.

To consult the statistician after an experiment is finished is often merely to ask him to conduct a post mortem examination. He can perhaps say what the experiment died of.

— Ronald Fisher, statistician, and biologist.

We know that Big Data is everywhere, and that it's potential may be limitless. But nobody says that Big Data on its own provides insights of any sort, and not applying proper procedures while extracting insights will not lead to clarity.

Remember your statistics.

References:

[1] Rajaraman, A., & Ullman, J. D. (2012). Mining of massive datasets. New York, NY: Cambridge University Press.

[2] Drosnin, M. (1997). The Bible code. New York: Simon & Schuster.

Related:

- Data Science of Variable Selection: A Review

- Eugenics – journey to the dark side at the dawn of statistics

- When Good Advice Goes Bad