And the Winner is… Stepwise Regression

This post evaluates several methods for automating the feature selection process in large-scale linear regression models and show that for marketing applications the winner is Stepwise regression.

By Jacob Zahavi and Ronen Meiri, DMWay Analytics.

Editor's note: This blog post was an entrant in the recent KDnuggets Automated Data Science and Machine Learning blog contest, where it received an honorable mention.

Predictive Analytics (PA), a core area of data science, is engaged in predicting future response based on past observations with known response values. The major problem afflicting PA models in this era of big data is the dimensionality issue which renders the model building process very tedious and time consuming.

The most complex component is the feature selection problem - selecting the most influential predictors “explaining” response. There are two conflicting concerns here: prediction accuracy, determined by the variance of the prediction error, and prediction bias when applying the model results on new observations. As more predictors are introduced to a model, model accuracy usually improves, but the bias may get worse. Hence a trade-off analysis between accuracy and bias is often required to find the “best” set of predictors for a model. Further complications are introduced because of such factors as noisy data, redundant predictors, multi-collinearity, missing values, outliers and others.

The magnitude of the feature selection problem led to the development of several methods to automate this process, involving three classes

of methods - statistical methods, stochastic methods and dimensionality reduction methods. In this study we conducted a research to find the best performing model involving “representative” models from each class of models – StepWise Regression (SWR) for statistical methods, Simulated Annealing (SA) for stochastic methods and Principal Component Analysis (PCA) and Radial Basis Function (RBF) for dimensionality reduction methods. SWR was calibrated using the False Discovery Rate (FDR) algorithm; SA uses a random, yet systematic, search method increasing its likelihood to converge to a global optimum; and, finally, the dimensionality reduction methods collapse multiple attributes into a smaller set of “mega” predictors of response.

We conducted this study on the Linear Regression (LR) model, not only because it is one of the most common models in Machine Learning (ML) but because it constitutes the foundation for many other ML methods.

To compare “apples” to “apples”, we optimized the model configuration for each model class and then compared the best performing models to one another. At this point we ignored transformations of the original variables, which may give advantage for some model types over the others, and conducted the comparative analysis using the original variables only. All methods were applied on three realistic marketing datasets provided by DMEF (The Direct Marketing Educational Foundation), referred to as non-profit, specialty and gift, each containing around 100,000 observations and scores of potential predictors.

We used the well-known R2 (“R-square”) criterion as the measure of fit, seeking the model that maximizes R2 for the validation dataset, subject to the condition that the ratio between the R2 for the validation dataset and the training dataset is more-or-less equal to 1.0. Maximizing R2 ensure high model accuracy. The constraint that the R2 ratio is close to 1 implies no over-fitting. The methods were evaluated based on several performance metrics common in data mining, including gains charts, maximum lift (M-L) and Gini coefficients.

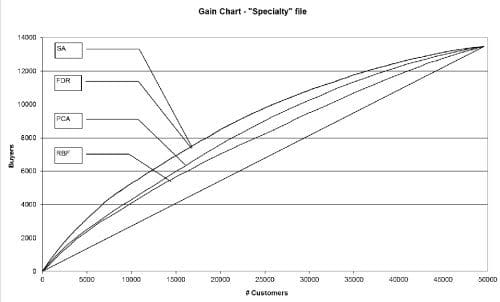

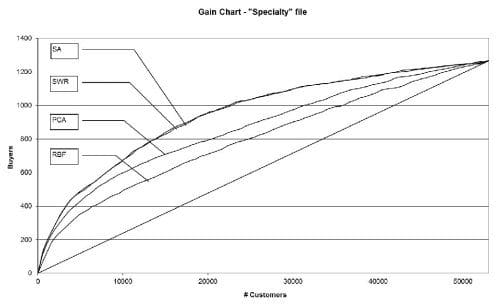

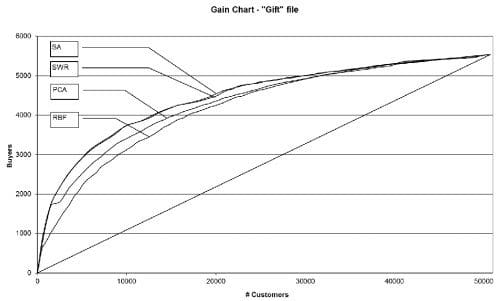

For easy evaluation we superimpose the gains charts for all four models (SWR, SA, PCA and RBF) one on top of the other, for each of the data files mentioned above (Figures 1-3). Each gains chart corresponds to the optimal parameter configuration for the respective model within the model class. Table 1 presents the corresponding summary statistics. All gains charts, as well as the related Gini and M-L measures, are presented for the validation datasets.

Surprisingly, the famous SWR model yields similar results to the supposedly more powerful SA model. We attribute these results to the fact that people behave rationally in the marketplace which, when reflected in the database, yields “well behaved” datasets with no complex relationships between the data elements. As a result, even the myopic SWR model can identify the most influential set of predictors. As to the dimensionality reduction methods, both performed much poorer than SWR for all data files. A possible explanation for this phenomenon is that in reducing the dimensions no regards is given to the response variable thus yielding “weaker” predictors of response.

These conclusions have major practical implications because they suggest that for marketing applications one may just as well use the conventional SWR algorithm to build large scale LR models. Not only SWR is well-known with wide software availability, but further studies with this algorithm suggest that SWR has enough of a leeway to allow one to use any reasonable range of p-values to remove/introduce variables to the model and still get a valid model. While further research is required to generalize the results of this study to other domains, for marketing applications, in all likelihood, the winner is... stepwise regression!

Dr. Jacob Zahavi is Co-Founder and CAO of DMWay Analytics and Professor (emeritus) at the Faculty of Management at Tel Aviv University. His main area of interest is in data mining where his experience spans over 25 years encompassing several fronts – research, teaching, software development and applications. He is also the two-time winner of the gold medal award in the KDD CUP competition.

Dr. Ronen Meiri, Co-Founder and CTO of DMWay Analytics, has 15 years of practical experience in advance analytics in various industries including actuary, behavioural targeting, credit risk, foresting, customer retention, fraud detection and more.

Related: