Yann LeCun Quora Session Overview

Here is a quick oversight, with excerpts, of the Yann LeCun Quora Session which took place on Thursday July 28, 2016.

On Thursday July 28, 2016, Yann LeCun took part in a Quora Session, a Q&A session hosted by Quora roughly analogous to Reddit's AMA format. LeCun was posed numerous questions, and provided insightful answers to many.

What follows are a few excerpts from the session, including questions posed and partial LeCun answers.

What are some recent and potentially upcoming breakthroughs in deep learning?

LeCun first selects one exemplar breakthrough.

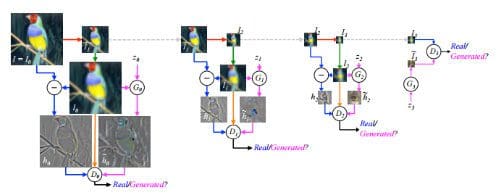

The most important one, in my opinion, is adversarial training (also called GAN for Generative Adversarial Networks). This is an idea that was originally proposed by Ian Goodfellow when he was a student with Yoshua Bengio at the University of Montreal (he since moved to Google Brain and recently to OpenAI).

This, and the variations that are now being proposed is the most interesting idea in the last 10 years in ML, in my opinion.

He then elaborates on its importance.

Why is that so interesting? It allows us to train a discriminator as an unsupervised “density estimator”, i.e. a contrast function that gives us a low value for data and higher output for everything else. This discriminator has to develop a good internal representation of the data to solve this problem properly. It can then be used as a feature extractor for a classifier, for example.

But perhaps more interestingly, the generator can be seen as parameterizing the complicated surface of real data: give it a vector Z, and it maps it to a point on the data manifold. There are papers where people do amazing things with this, like generating pictures of bedrooms, doing arithmetic on faces in the Z vector space: [man with glasses] - [man without glasses] + [woman without glasses] = [woman with glasses].

What are your recommendations for self-studying machine learning?

LeCun provides the following pointed guidance:

- an overview paper in Nature by myself, Yoshua Bengio and Geoff Hinton with lots of pointers to the literature: https://scholar.google.com/citat...

- The Deep Learning textbook by Goodfellow, Bengio and Courville: Deep Learning

- A recent series of 8 lectures on deep learning that I gave at Collège de France in Paris. The lectures were taught in French and later dubbed in English:

- the Coursera course on neural nets by Geoff Hinton (starting to be a bit dated).

- the lectures from the 2012 IPAM Summer School on Deep Learning: Graduate Summer School: Deep Learning, Feature Learning (Schedule) - IPAM

- my 2015 course on Deep Learning at NYU: deeplearning2015:schedule | CILVR Lab @ NYU (unfortunately, the videos of the lectures had to be taken down due to stupid legal reasons, but the slides are there). I’m teaching this course again in the Spring of 2017.

- The 2015 deep learning summer school: Deep Learning Summer School, Montreal 2015

- Various tutorials generally centered on using a particular software platform, like Torch, TensorFlow or Theano.

What are the limits of deep learning?

After a quick setup, LeCun provides this great insight.

To enable deep learning systems to reason, we need to modify them so that they don’t produce a single output (say the interpretation of an image, the translation of a sentence, etc), but can produce a whole set of alternative outputs (e.g the various ways a sentence can be translated). This is what energy-based models are designed to do: give you a score for each possible configuration of the variables to be inferred. A particular instance of energy-based models is factor graphs (non-probabilistic graphical models). Combining learning systems with factor graphs is known as “structured prediction” in machine learning. There have been many proposals to combine neural nets and structured prediction in the past, going back to the early 1990s. In fact, the check reading system my colleague and I built at Bell Labs in the early 1990s used a form of structured prediction on top of convolutional nets that we called “Graph Transformer Networks”. There has been a number of recent works on sticking graphical models on top of ConvNets and training the whole thing end to end (e.g. for human body pose estimation and such).

For a review/tutorial on energy-based models and structured prediction on top of neural nets (or other models) see this paper: https://scholar.google.com/citat...

The rest of the Yann LeCun Quora Session is just as great a read as the above excerpts. I highly recommend you take a look at it ASAP.

Related: