An Overview of Logistic Regression

Logistic regression is an extension of linear regression to solve classification problems. Read more on the specifics of this algorithm here.

By Arvind Thorat, Kavikulguru Institute of Technology and Science

Machine Learning is the science (and art) of programming computers so they can learn from data. Here is a slightly more general definition:

[Machine Learning is the] field of study that gives computers the ability to learn without being explicitly programmed.

—Arthur Samuel, 1959

And a more engineering-oriented one:

A computer program is said to learn from experience E with respect to some task T and some performance measure P, if its performance on T, as measured by P, improves with experience E.

—Tom Mitchell, 1997

In this article we are going to discuss about the term logistic regression, which is a widely-used algorithm in many industries and machine learning applications.

Logistic Regression

Logistic regression is an extension of linear regression to solve classification problems. We will see how a simple logistic regression problem is solved using optimization based on gradient descent, which is one of the most popular optimization methods.

Assumptions

Many of you may be questioning if logistic regression be used for many independent variables as well??

The answer is yes, logistic regression can be used for as many independent variables as you want. However, be aware that you won’t be able to visualize the results in more than three dimensions.

Before these points, let's first discuss the logistic function aka the sigmoid function.

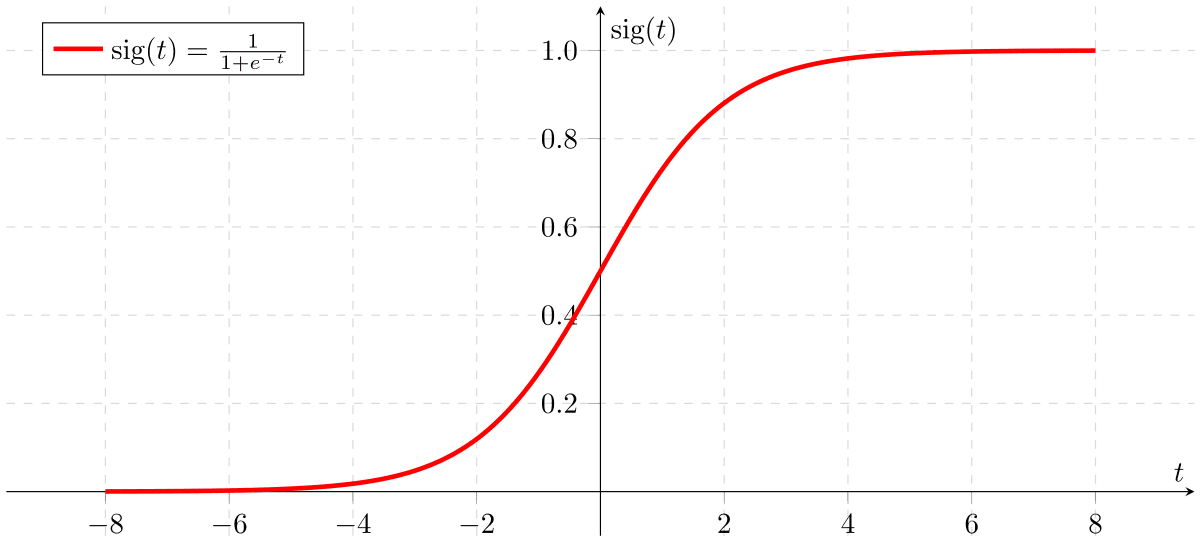

Sigmoid Function (from Figure 1)

Logistic regression is named for the function used at core of the method, the logistic function. It is also called the sigmoid function. It is used to describe properties of population growth in ecology, rising quickly and maxing out at the carrying capacity of the environment. It's a three shape curve that can take any value number and map it into a value between 0 and 1, but never exactly at those limits.

We can derive

Sigmoid Function = 1 / 1 + e^-value

where:

e = base of the natural logarithm

It is also used when we can't separate the data into classes by a linear boundary.

From the example:

p / 1 - p

where;

p = probability of an event

We can create formula from this

log p / 1 - p

It was also used in biological science in the early days. It was then used in many social science applications. It is used when the dependent variable is categorical.

Here is a simple model:

Output = 0 or 1

Hypothesis => Z = Wx + B

hҩ(x) = Sigmoid(z)

There are two conditions:

Z = ∞ (infinity)

and

Y(predict) = 1

If Z = -∞

Y(predict) => 0

Before we implement any algorithm, we have to prepare data for it. Here are some data preparation methods for logistic regression.

Methodology

- Data preparation

- Binary output variable

- Remove Noise

- Gaussian distribution

- Remove co-related Inputs

Examples of Usage

- Credit Scoring

- Medicine

- Text Editing

- Gaming

Advantages

Logistic regression is one of the most efficient techniques for solving classification problems. Some of the advantages of using logistic regression:

- Logistic Regression is easy to implement, interpret and very efficient to train. It is very fast at classifying unknown records.

- It performs well when the dataset is linearly separable.

- It can interpret model coefficients as indicators of feature importance.

Disadvantages

- It constructs linear boundaries; logistic regression needs that independent variable are linearly related to the odds.

- The major limitation of logistic regression is the assumption of linearity between the dependent variable and the independent variable.

- More powerful and compact algorithms such as neural networks can easily outperform this algorithm.

Conclusion

Logistic regression is one of the traditional machine learning methods. It forms a foundational set of ML approaches alongside algorithms such as linear regression, k-suggest clustering, major component analysis, and a few others. Neural networks have been developed leveraging logistic regression. You can efficiently use logistic regression even if you are not an ML specialist, not the case with many other algorithms. Conversely, it is not possible to become an ML master without a solif understanding of logistic regression.

Arvind Thorat is a Data Science Intern at NPPD.