The big data ecosystem for science: X-ray crystallography

Diffract-and-destroy experiments to accurately determine three-dimensional structures of nano-scale systems can produce 150 TB of data per sample. We review how such Big Data is processed.

By Wahid Bhimji, NERSC

This is part 4, a continuation of Big Data Ecosystem for Science: Physics, LHC, and Cosmology, Big Data Ecosystem for Science: Genomics, and Big Data Ecosystem for Science: Climate Change.

Primary Collaborators: Prabhat, Eli Dart, Michael Wehner, and Dáithí Stone (LBNL)

Introduction

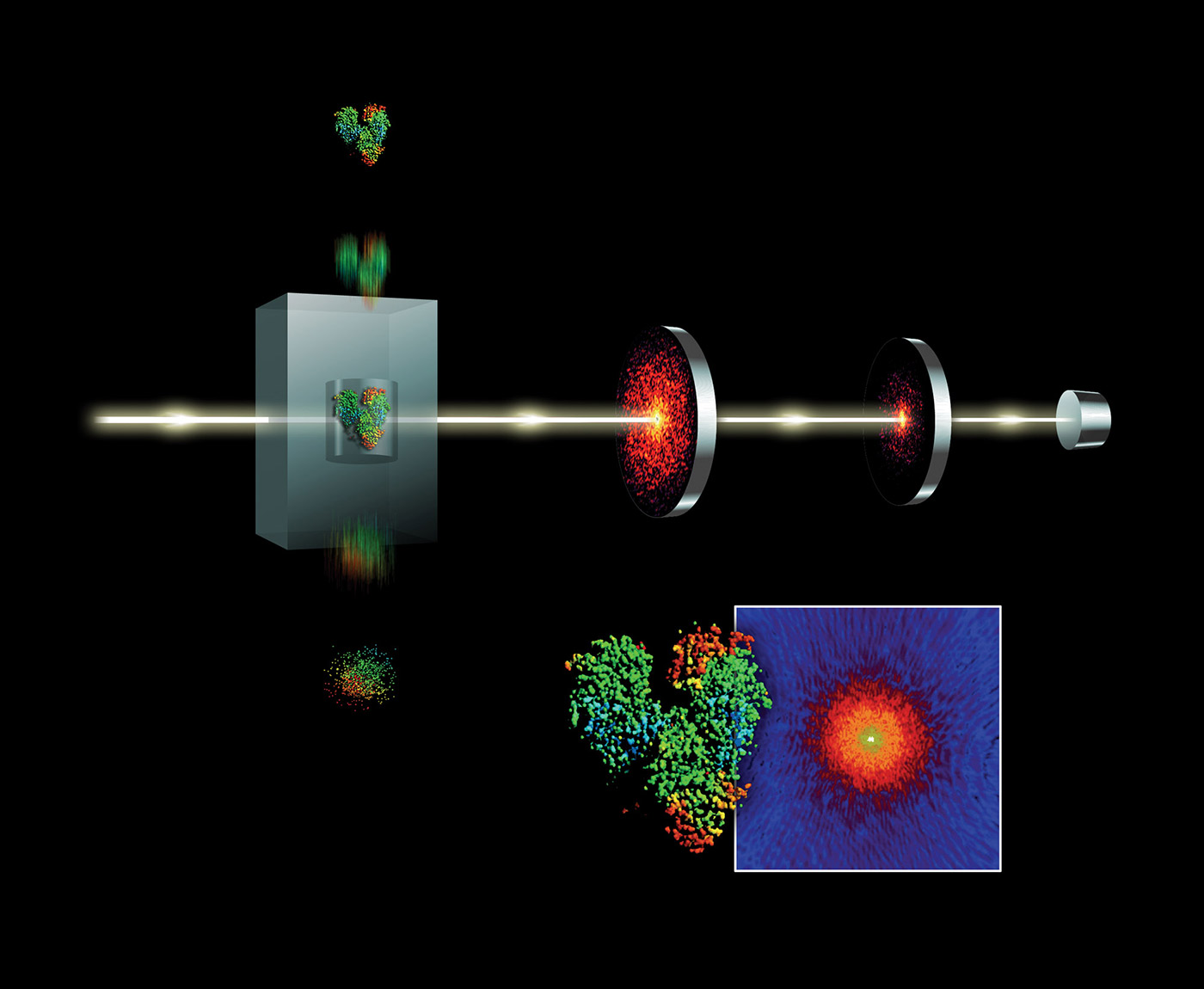

Free-electron lasers (FELs) have many applications. Diffract-and-destroy experiments such as those done at Linear Coherent Light Source (LCLS) to accurately determine three-dimensional structures of nano-scale systems can produce 150 TB of data per sample. Scientists seek out FEL beamlines in part to image biological systems in their native state. Determining the structure of complex bio-chemical engines involved in photosynthesis and metabolism is complicated by the fact that these systems do not easily form crystals. However, using FEL’s fast and bright bursts of light we can accurately resolve these structures despite this constraint. Liquid nanocrystalline samples are aerosolized as they are injected into the LCLS beamline and individually drift in the path of the x-ray beam. As a droplet is destroyed by an x-ray pulse, but before the nuclei can move, a diffraction pattern is registered on a detector. Images are combined and collected from a sequence of these pulses to reconstruct fundamental chemical and biological processes. Diffract-and-destroy methods, however, allow for imaging of tiny samples (nanocrystallography) without the need to grow large crystals. This data-intensive technique has been applied to imaging studies of photosynthesis and protein chemistry. The use of streaming data analytics and machine learning will become more important in meeting these data challenges. The data rates from future FEL science (see Figure 6) will require fast analytic filters such as classification on streams of data as they are collected.

Figure 6. A schematic of a diffract-and-destroy experiment at LCLS. X-ray diffraction data (seen in the box outlined in white) is collected to reconstruct and image nano-scale systems. High-resolution detectors and ultrafast x-ray pulses are pushing data collection rates. Source: SLAC National Accelerator Laboratory; used with permission.

Data acquisition and ingestion

The bursty nature of x-ray free electron laser (XFEL) data is in part due to the high pulse rate of the laser and also uncertainties in sample preparation. With a good sample, the data production rate may be close to peak (0.5 GB/s) but can vary significantly over a 12-hour beamtime shift. Diffractive images acquired on the CSPAD detector are sent through front-end electronics to a local file system that then buffers events (per pulse data) into 20-GB chunks in a custom binary XTC file format. (XTC to HDF conversion is supported.)

Data storage and transfer

Currently data sets are stored at LCLS temporarily while users ship their data through transfer nodes. Internal-to-LCLS data management is handled by integrated Rule-Oriented Data Systems(IRODS). Data transfer nodes at each site handle movement of data to NERSC’s Cori system for analysis. The process is not fully automated, but advances are being made through HPC-connected APIs, sponsorship of storage resources across facilities, and workflow containerization. Advances in software defined networking (SDN) and growing WAN bandwidth from ESnet are important in connecting the instrument to HPC.

Data processing and analysis

XFEL experiments vary widely, and beamlines do not have a single type of analysis. At LCLS, the largest data and computing demands come from nanocrystallography and single particle imaging. In these analyses, streams of images are fed through a series of analysis kernels to find high-quality diffraction data and are then used to reconstruct or improve a spatial model of the sample. This workflow has a large data movement phase in which images are streamed from the instrument detector to a parallel filesystem. This is followed by compute phases that are dominated by compute-intensive image analysis kernels (Fourier and Winograd transforms). In the case of diffuse x-ray scattering experiments, the image stream may be compared to concurrently running molecular dynamics simulations. Those operating the device benefit from fast feedback analysis, but researchers also conduct multiple offline analyses months after the experiment is completed. Fast feedback analysis can work with reduced or filtered data streams, and offline analyses are typically exhaustive over the entire data set.

The future

The future of FEL science focuses on faster pulse rates of brighter and more coherent photons. An upgrade to LCLS-II in 2019 will increase repetition rates from 120 Hz toward 50 KHz. In the same timeframe, detector resolution will increase four-fold, and sample preparation and delivery technologies will also see advances in throughput. Future FELs are planning for two to three orders of magnitude increase in data production and analysis needs. Future compute node architectures suited to image analysis kernels (e.g., hardware accelerators) will greatly accelerate the analysis of such a growing volume of data. An ongoing collaboration between the SLAC National Accelerator Laboratory, ESnet, and Lawrence Berkeley National Laboratory seeks to orchestrate the network, computing, and storage resources to meet this challenge.

This post originally appeared on oreilly.com,

organizers of Strata Hadoop World. Republished with permission.

Strata + Hadoop World | March 13–16, 2017 | San Jose, CA

"One of the most valuable events to advance your career."

Strata + Hadoop World is a rich learning experience at the intersection of data science and business. Thousands of innovators, leaders, and practitioners gather to develop new skills, share best practices, and discover how tools and technologies are evolving to meet new challenges. Find out how big data, machine learning, and analytics are changing not only business, but society itself at Strata + Hadoop World. Save 20% on most passes with discount code PCKDNG.

www.oreilly.com/pub/cpc/35776

Related: