5 Machine Learning Projects You Should Not Overlook, June 2018

5 Machine Learning Projects You Should Not Overlook, June 2018

Here is a new installment of 5 more machine learning or machine learning-related projects you may not yet have heard of, but may want to consider checking out!

We're back. Again. The "Overlook..." posts have been dormant for a few months, but fret not, here's another installment. We continue on with the modest quest of bringing formidable, lesser-known machine learning projects to a few additional sets of eyes.

This outing is made up exclusively of Python projects, not by intention design, but no doubt influenced by my own biases. While previous iterations have included projects in all sorts of lnauges (R, Go, C++, Scala, Java, etc.), I promise an all R version sometime soon, and will employ some outside assistance when it comes to evaluating those projects (I'm admittedly not very well tuned into the R ecosystem).

Here are 5 hand-picked projects for some potential fresh machine learning ideas.

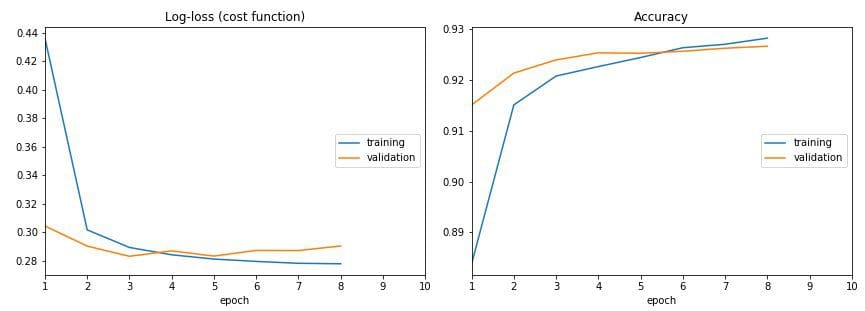

Don't train deep learning models blindfolded! Be impatient and look at each epoch of your training!

A live training loss plot in Jupyter Notebook for Keras, PyTorch and other frameworks. An open source Python package by Piotr Migdał et al.

When used with Keras, Live Loss Plot is a simple callback function.

from livelossplot import PlotLossesKeras

model.fit(X_train, Y_train,

epochs=10,

validation_data=(X_test, Y_test),

callbacks=[PlotLossesKeras()],

verbose=0)

2. Parfit

Out next project comes from Jason Carpenter, a Master's candidate in Data Science at University of San Francisco, and a Machine Learning Engineer Intern at Manifold.

A package for parallelizing the fit and flexibly scoring of sklearn machine learning models, with visualization routines.

Once imported, you can use bestFit() or other functions freely.

A code example:

from parfit import bestFit # Necessary if you wish to use bestFit

# Necessary if you wish to run each step sequentially

from parfit.fit import *

from parfit.score import *

from parfit.plot import *

from parfit.crossval import *

grid = {

'min_samples_leaf': [1, 5, 10, 15, 20, 25],

'max_features': ['sqrt', 'log2', 0.5, 0.6, 0.7],

'n_estimators': [60],

'n_jobs': [-1],

'random_state': [42]

}

paramGrid = ParameterGrid(grid)

best_model, best_score, all_models, all_scores = bestFit(RandomForestClassifier(), paramGrid,

X_train, y_train, X_val, y_val, # nfolds=5 [optional, instead of validation set]

metric=roc_auc_score, greater_is_better=True,

scoreLabel='AUC')

print(best_model, best_score)

3. Yellowbrick

Yellowbrick is "Visual analysis and diagnostic tools to facilitate machine learning model selection." In more detail:

Yellowbrick is a suite of visual diagnostic tools called "Visualizers" that extend the scikit-learn API to allow human steering of the model selection process. In a nutshell, Yellowbrick combines scikit-learn with matplotlib in the best tradition of the scikit-learn documentation, but to produce visualizations for your models!

Check out the Github repo for examples, and the documentation for much more.

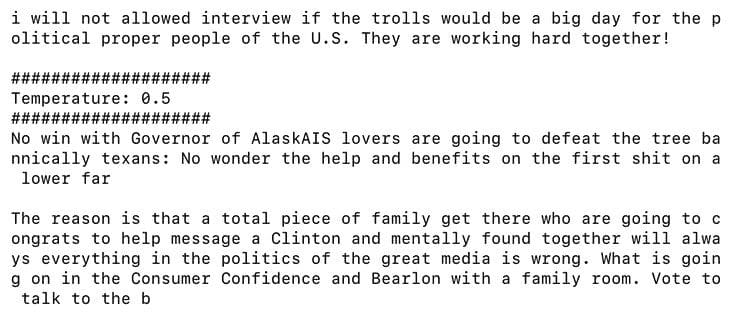

4. textgenrnn

textgenrnn brings an additional layer of abstraction to text generation tasks, and aims to allow you to "easily train your own text-generating neural network of any size and complexity on any text dataset with a few lines of code."

The project is built on top of Keras and boasts the following select features:

- A modern neural network architecture which utilizes new techniques as attention-weighting and skip-embedding to accelerate training and improve model quality.

- Able to train on and generate text at either the character-level or word-level.

- Able to configure RNN size, the number of RNN layers, and whether to use bidirectional RNNs.

- Able to train on any generic input text file, including large files.

- Able to train models on a GPU and then use them to generate text with a CPU.

- Able to utilize a powerful CuDNN implementation of RNNs when trained on the GPU, which massively speeds up training time as opposed to typical LSTM implementations.

textgenrnn is incredibly easy to get up and running with:

from textgenrnn import textgenrnn

textgen = textgenrnn()

textgen.train_from_file('hacker-news-2000.txt', num_epochs=1)

textgen.generate()

You can find more info and examples on the Github repo linked above.

5. Magnitude

Magnitude is "a fast, simple vector embedding utility library."

A feature-packed Python package and vector storage file format for utilizing vector embeddings in machine learning models in a fast, efficient, and simple manner developed by Plasticity. It is primarily intended to be a simpler / faster alternative to Gensim, but can be used as a generic key-vector store for domains outside NLP.

The repo provides links to a variety of popular embedding models which have been prepared in the .magnitude format for usage, and also includes instructions on converting any other word embeddings file to the same format.

How to import?

from pymagnitude import *

vectors = Magnitude("/path/to/vectors.magnitude")

Right to the point.

The Github repo is filled with more info, including everything you know to get up and running with this simplified library for using pre-trained word embeddings.

Related:

- 5 Machine Learning Projects You Should Not Overlook, Feb 2018

- 5 Machine Learning Projects You Can No Longer Overlook

- 5 More Machine Learning Projects You Can No Longer Overlook

5 Machine Learning Projects You Should Not Overlook, June 2018

5 Machine Learning Projects You Should Not Overlook, June 2018