Statistics, Causality, and What Claims are Difficult to Swallow: Judea Pearl debates Kevin Gray

While KDnuggets takes no side, we present the informative and respectful back and forth as we believe it has value for our readers. We hope that you agree.

Recently, renowned computer scientist and artificial intelligence researcher Judea Pearl released his latest book, titled "The Book of Why: The New Science of Cause and Effect," which he co-authored with Dana Mackenzie. While the book has quickly become a best seller, it has also struck a chord with some segment of readers.

After its release, marketing scientist and analytics consultant (and regular KDnuggets contributor) Kevin Gray penned what could be considered both a review and a retort to the book, which was run on KDnuggets. Pearl then responded to Gray, who then responded in turn, after which Pearl responded... you get the picture.

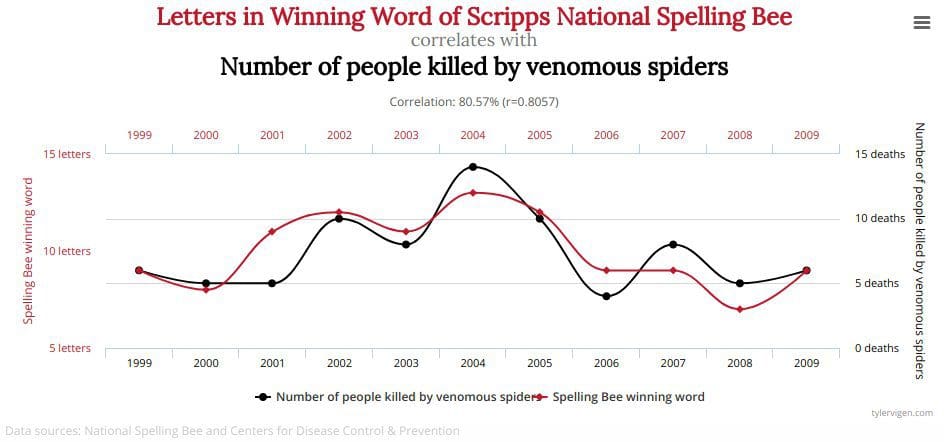

Image source: Spurious Correlations

This post includes the debate which has arisen from Kevin's original response article. While KDnuggets takes no side, we present the informative and respectful back and forth as we believe it has value for our readers. We hope that you agree.

Reader Carlos Cinelli pointed out that Judea Pearl replied to Kevin Gray's article, with the response reproduced below.

Kevin’s prediction that many statisticians may find my views “odd or exaggerated” is accurate. This is exactly what I have found in numerous conversations I have had with statisticians in the past 30 years. However, if you examine my views more closely, you will find that they are not as whimsical or thoughtless as they may appear at first sight.

Of course many statisticians will scratch their heads and ask: “Isn’t this what we have been doing for years, though perhaps under a different name or not name at all?” And here lies the essence of my views. Doing it informally, under various names, while refraining from doing it mathematically under uniform notation has had a devastating effect on progress in causal inference, both in statistics and in the many disciplines that look to statistics for guidance. The best evidence for this lack of progress is the fact that, even today, only a small percentage of practicing statisticians can solve any of the causal toy problems presented in the Book of Why.

Take for example:

Selecting a sufficient set of covariants to control for confounding

Articulating assumptions that would enable consistent estimates of causal effects

Finding if those assumptions are testable

Estimating causes of effect (as opposed to effects of cause)

More and more.

Every chapter of The Book of Why brings with it a set of problems that statisticians were deeply concerned about, and have been struggling with for years, albeit under the wrong name (eg. ANOVA or MANOVA) “or not name at all.” The results are many deep concerns but no solution.A valid question to be asked at this point is what gives humble me the audacity to state so sweepingly that no statistician (in fact no scientist) was able to properly solve those toy problems prior to the 1980’s. How can one be so sure that some bright statistician or philosopher did not come up with the correct resolution of the Simpson’s paradox or a correct way to distinguish direct from indirect effects? The answer is simple: we can see it in the syntax of the equations that scientists used in the 20th century. To properly define causal problems, let alone solve them, requires a vocabulary that resides outside the language of probability theory, This means that all the smart and brilliant statisticians who used joint density functions, correlation analysis, contingency tables, ANOVA, etc., and did not enrich them with either diagrams or counterfactual symbols have been laboring in vain — orthogonally to the question — you can’t answer a question if you have no words to ask it. (Book of Why, page 10)

It is this notational litmus test that gives me the confidence to stand behind each one of statements that you were kind enough to cite from the Book of Why. Moreover, if you look closely at this litmus test, you will find that it not just notational but conceptual and practical as well. For example, Fisher’s blunder of using ANOVA to estimate direct effects is still haunting the practices of present day mediation analysts. Numerous other examples are described in the Book of Why and I hope you weigh seriously the lesson that each of them conveys.

Yes, many of your friends and colleagues will be scratching their head saying: “Hmmm... Isn’t this what we have been doing for years, though perhaps under a different name or not name at all?” What I hope you will be able to do after reading “The Book of Why” is to catch some of the head-scratchers and tell them: “Hey, before you scratch further, can you solve any of the toy problems in the Book of Why?” You will be surprised by the results — I was!

For me, solving problems is the test of understanding, not head scratching. That is why I wrote this Book.

Kevin Gray then noted Judea Pearl's reply and has provided this follow up response below.

I appreciate Judea Pearl taking the time to read and respond to my blog post. I’m also flattered, as I have been an admirer and follower of Pearl for many years. For some reason - this has happened before - I am having difficulty with Disqus and have asked Matt Mayo to post this (admittedly hastily written) response on my behalf.

In short, I do not find Pearl’s comments regarding my post substantive, as he essentially restates views I have questioned in the piece.

The suggestion of his opening comment is that I am only superficially acquainted with his views. Obviously, I do not feel this is the case. Also, nowhere in the article do I describe any of Pearl’s views as whimsical or thoughtless. I take them very seriously, or I wouldn’t have bothered writing my blog article in the first place.

Like many other statisticians, including academics, I feel his characterizations of statisticians are inaccurate, however, and that his approach to caution is overly simplistic. That is the essence of my views (and, as I indicated, I am not alone). There are many possible reasons for these discrepancies, I will not speculate here as to Why. ????

That “you can’t answer a question if you have no words to ask it” is certainly true. Practicing statisticians - as opposed to theoretically focused academics - work closely with their clients, who are specialists in a specific area. The language of that field largely defines the language of causation used in a particular context. That is why there has been no universal causal framework, and why statisticians in psychology, healthcare and economics, for instance, have different approaches and often use different language and mathematics. I myself draw upon all three, as well as Pearl’s. The utility of each, in my 30+ years’ experience, is case-by-case. There are also ad hoc approaches (some questionable).

He claims that “…even today, only a small percentage of practicing statisticians can solve any of the causal toy problems presented in the Book of Why” yet provides no evidence of this. They are not difficult, and claims are not evidence. Furthermore, the examples he gives in “Every chapter of The Book of Why” of the failure of statistics are, in general, not compelling and were part of the motivation for the article in the first place.

Simpson’s paradox in its various forms is something that generations of researchers and statisticians have been trained to look out for. And we do. There is nothing mysterious about it. (This debate regarding Simpsons, which appeared in The American Statistician in 2014, and which I link in the article, hopefully will be visible to readers who are not ASA members.)

Mediation, often confused with moderation, can be a tough nut to crack. Simple path diagrams or DAG with only few variables will frequently be inadequate and may badly mislead us. In the real world of a statistician, there are frequently a vast number of potentially relevant variables, including those we are unable to observe. There are also critical variables that, for many reasons, are not included in the data we have to work with and cannot be obtained. Measurement error may be substantial - this is not rare - and different causal mechanisms for different classes of subjects (e.g., consumers) may be in operation. These classes are often unobserved and unknown to us.

I should make clear that I greatly appreciate Pearl’s efforts at bringing the analysis of causation into the spotlight. I would urge statisticians to read him but also consult (as I do) with other veteran practitioners and academics for their views on his writings and on the analysis of causation. There are many ways to approach causation and Pearl’s is but one. As Pearl himself will be aware, to this day, philosophers disagree about what causation is, thus to suggest he has found the answer to it is not plausible in my view. Real statisticians know better than to look for a Silver Bullet.

Pearl then followed up with this response:

Dear Kevin,

I am not suggesting that you are only superficially acquainted with my works. You actually show much greater acquaintance than most statisticians in my department, and I am extremely appreciative that you are taking the time to comment on The Book of Why. You are showing me what other readers with your perspective would think about the Book, and what they would find unsubstantiated or difficult to swallow. So let us go straight to these two points (i.e., unsubstantiated and difficult to swallow) and give them an in-depth examination.You say that I have provided no evidence for my claim: “Even today, only a small percentage of practicing statisticians can solve any of the causal toy problems presented in the Book of Why.” I believe that I did provide such evidence, in each of the Book’s chapters, and that the claim is valid once we agree on what is meant by “solve.”

Let us take the first example that you bring, Simpson’s paradox, which is treated in Chapter 6 of the Book, and which is familiar to every red-blooded statistician. I characterized the paradox in these words: “It has been bothering statisticians for more than sixty years – and it remains vexing to this very day” (p. 201). This was, as you rightly noticed, a polite way of saying: “Even today, the vast majority of statisticians cannot solve Simpson’s paradox,” a fact which I strongly believe to be true.

You find this statement hard to swallow, because: “generations of researchers and statisticians have been trained to look out for it [Simpson’s Paradox]” an observation that seems to contradict my claim. But I beg you to note that “trained to look out for it” does not make the researchers capable of “solving it,” namely capable of deciding what to do when the paradox shows up in the data.This distinction appears vividly in the debate that took place in 2014 on the pages of The American Statistician, which you and I cite. However, whereas you see the disagreements in that debate as evidence that statisticians have several ways of resolving Simpson’s paradox, I see it as evidence that they did not even come close. In other words, none of the other participants presented a method for deciding whether the aggregated data or the segregated data give the correct answer to the question: “Is the treatment helpful or harmful?”

Please pay special attention to the article by Keli Liu and Xiao-Li Meng, both are from Harvard’s department of statistics (Xiao-Li is a senior professor and a Dean), so they cannot be accused of misrepresenting the state of statistical knowledge in 2014. Please read their paper carefully and judge for yourself whether it would help you decide whether treatment is helpful or not, in any of the examples presented in the debate.

It would not!! And how do I know? I am listening to their conclusions:

- They disavow any connection to causality (p.18), and

- They end up with the wrong conclusion. Quoting: “less conditioning is most likely to lead to serious bias when Simpson’s Paradox appears.” (p.17) Simpson himself brings an example where conditioning leads to more bias, not less.

I dont blame Liu and Meng for erring on this point, it is not entirely their fault (Rosenbaum and Rubin made the same error). The correct solution to Simpson’s dilemma rests on the back-door criterion, which is almost impossible to articulate without the aid of DAGs. And DAGs, as you are probably aware, are forbidden from entering a 5 mile no-fly zone around Harvard.

So, here we are. Most statisticians believe that everyone knows how to “watch for” Simpson’s paradox, and those who seek an answer to: “Should we treat or not?” realize that “watching” is far from “solving.” Moreover, the also realize that there is no solution without stepping outside the comfort zone of statistical analysis and entering the forbidden city of causation and graphical models.

One thing I do agree with you — your warning about the implausibility of the Causal Revolution. Quoting: “to this day, philosophers disagree about what causation is, thus to suggest he has found the answer to it is not plausible”. It is truly not plausible that someone, especially a semi-outsider, has found a Silver Bullet. It is hard to swallow. That is why I am so excited about the Causal Revolution and that is why I wrote the book. The Book does not offer a Silver Bullet to every causal problem in existence, but it offers a solution to a class of problems that centuries of statisticians and Philosophers tried and could not crack. It is implausible, I agree, but it happened. It happened not because I am smarter but because I took Sewall Wright’s idea seriously and milked it to its logical conclusions as much as I could.

It took quite a risk on my part to sound pretentious and call this development a Causal Revolution. I thought it was necessary. Now I am asking you to take a few minutes and judge for yourself whether the evidence does not justify such a risky characterization.

It would be nice if we could alert practicing statisticians, deeply invested in the language of statistics to the possibility that paradigm shifts can occur even in the 21st century, and that centuries of unproductive debates do not make such shifts impossible.

You were right to express doubt and disbelief in the need for a paradigm shift, as would any responsible scientist in your place. The next step is to let the community explore:

- How many statisticians can actually answer Simpson’s question, and

- How to make that number reach 90%.

I believe The Book of Why has already doubled that number, which is some progress. It is in fact something that I was not able to do in the past thirty years through laborious discussions with the leading statisticians of our time.

It is some progress, let’s continue,

Judea

And most recently, as of publication, Gray has provided us with the following.

Thank you once again for taking the time to respond to my blog post and earlier response. And thank you for the kind words, especially given that UCLA is on the cutting edge of both SEM and AI. No no-fly zones there.

I feel we are fighting the same battle, albeit in different ways, from different angles and often against different “enemies.” I tell people I’m obsessed with causation but don’t know why. ???? I approach this battle from the perspective of an applied marketing researcher and “data scientist” (a term I’m uncomfortable with and don’t actually understand myself). To give you an idea where I’m “coming from” let me reproduce a post I made on LinkedIn just a few hours ago:

Just a few more thoughts on causation...

Knowing the who, what, when, where, how, how often, etc., is vital in marketing and marketing research. Predictive analytics is also useful in many instances and for many organizations.

However, knowing the why is also often essential, and helps us better understand and predict the who, what, when, where, how, how often, etc.

Analysis of causation is extremely challenging, though, and some would say impossible.

The orthodoxy is that the best approach is through randomized experiments. While I concur, experiments in many cases are infeasible or unethical. They also can be botched or be so artificial that they do not generalize to real world conditions. They may also fail to replicate. They are not magic.

Non-experimental research may be our only option in many instances. But this does not mean data dredging or otherwise shoddy research. There are better and worse ways to conduct non-experimental research. That's the hitch - it ain't easy to get right and mistakes can prove very costly.

My last point is most important, I feel. Some folks seem to have misinterpreted your writings as suggesting theory and judgement are irrelevant and that the computer can find the “best model” automatically. Shotgun empiricism in its most modern form…This is exacerbated by point-and-click and click-and-drag software which is arguably easier to use than Microsoft Word. Shotguns (and sometimes DAG) in the hands of children…

In the big data age, people in my sort of role are sometimes tasked with determining the relative importance of thousands of variables, sometimes individually for each consumer. It is not generally recognized that that this is causal analysis. At the other extreme, sweeping causal implications are sometimes drawn based on a few focus groups. Again, this is frequently not recognized as causal research.

Regarding Simpson’s paradox, I’ll let you continue to slug it out with your academic colleagues. I approach this case-by-case with the data on hand and according to the objectives of the research. Surely, some statisticians and researchers who should know better drop the ball on this. To be clear, I am I referring to practical solutions, not to theoretical mathematical solutions. While not personally acquainted with Professor Meng, I have watched several of his lectures on YouTube and read a few of his papers. He has much to say regarding common big data fallacies.

In the applied world, I do note some people with excellent math and programing skills who are, nevertheless, not so hot at using statistics to solve real-world problems. This is not an original observation. Perhaps the ASA, RSS and other professional organizations would be interested in periodic skills assessments, purely voluntary and with the aim of professional development?

I imagine an on-line quiz with a small number of applied stats questions – not math, except, for some essential areas. It would be confidential. As an incentive, participants would be shown the correct answers after taking the test. Over time, participants would be able to get a sense of where they are strong and where they need to improve. We would also have some empirical evidence (if not from a truly representative sample) regarding the level of skill in key areas of practicing statisticians and researchers, which would be publishable. I would hope you would contribute some of the questions!

For statisticians and researchers in any field with a good background in stats, I regard Causation as must reading. The Book of Why I feel also has value, as I also noted. You’ll be familiar of course with Probabilistic Graphical Models (Koller and Friedman), and I understand Professors Fenton and Neil will be publishing a new edition of Risk Assessment and Decision Analysis. Since you wrote the preface of the first edition, you obviously also feel this excellent book is worth reading.

So, thank you once again for your many contributions to many fields over the course of your long and distinguished career. For me, unfortunately, it’s time to put on the hard hat and get back to work.

Kind regards,

Kevin

Related: