Text Classification & Embeddings Visualization Using LSTMs, CNNs, and Pre-trained Word Vectors

In this tutorial, I classify Yelp round-10 review datasets. After processing the review comments, I trained three model in three different ways and obtained three word embeddings.

By Sabber Ahamed, Computational Geophysicist and Machine Learning Enthusiast

Editor's note: This post summarizes the 3 currently-published posts in this series, while a fourth and final installment is soon on the way.

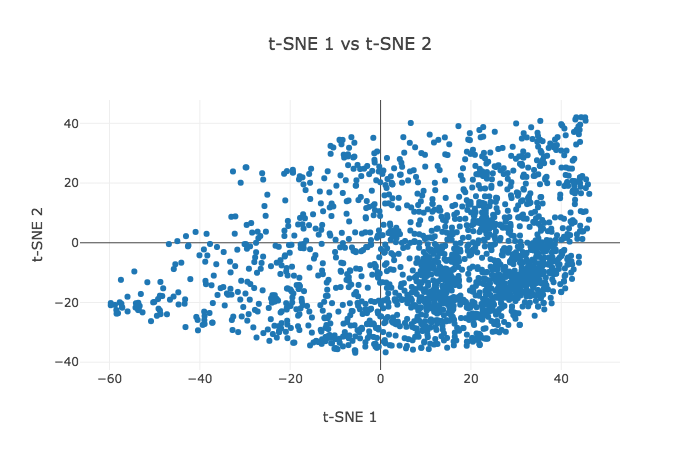

In this tutorial, I classify Yelp round-10 review datasets. The reviews contain a lot of metadata that can be mined and used to infer meaning, business attributes, and sentiment. For simplicity, I classify the review comments into two class: either as positive or negative. Reviews that have star higher than three are regarded as positive while the reviews with star less than or equal to 3 are negative. Therefore, the problem is a supervised learning. To build and train the model, I first clean the text and convert them to sequences. Each review comment is limited to 50 words. As a result, short texts less than 50 words are padded with zeros, and long ones are truncated. After processing the review comments, I trained three model in three different ways and obtained three word embeddings.

Part 1: Text Classification Using LSTM and visualize Word Embeddings

In this part, I build a neural network with LSTM and word embeddings were leaned while fitting the neural network on the classification problem.

The network starts with an embedding layer. The layer lets the system expand each token to a more massive vector, allowing the network to represent a word in a meaningful way. The layer takes 20000 as the first argument, which is the size of our vocabulary, and 100 as the second input parameter, which is the dimension of the embeddings. The third parameter is the input_length of 50, which is the length of each comment sequence.

Part 2: Text Classification Using CNN, LSTM and visualize Word Embeddings

In in this part, I add an extra 1D convolutional layer on top of LSTM layer to reduce the training time.

The LSTM model worked well. However, it takes forever to train three epochs. One way to speed up the training time is to improve the network adding “Convolutional” layer. Convolutional Neural Networks (CNN) come from image processing. They pass a “filter” over the data and calculate a higher-level representation. They have been shown to work surprisingly well for text, even though they have none of the sequence processing ability of LSTMs.

Part 3: Text Classification Using CNN, LSTM and Pre-trained Glove Word Embeddings

In this part-3, I use the same network architecture as part-2, but use the pre-trained glove 100 dimension word embeddings as initial input.

In this subsection, I want to use word embeddings from pre-trained Glove. It was trained on a dataset of one billion tokens (words) with a vocabulary of 400 thousand words. The glove has embedding vector sizes, including 50, 100, 200 and 300 dimensions. I chose the 100-dimensional version. I also want to see the model behavior in case the learned word weights do not get updated. I, therefore, set the trainable attribute for the model to be False.

Part 4: (Not yet published)

In part-4, I use word2vec to learn word embeddings.

Bio: Sabber Ahamed is the Founder of xoolooloo.com. Computational Geophysicist and Machine Learning Enthusiast.

Related:

- Natural Language Processing Nuggets: Getting Started with NLP

- On the contribution of neural networks and word embeddings in Natural Language Processing

- Detecting Sarcasm with Deep Convolutional Neural Networks

- The Key to LLMs: A Mathematical Understanding of Word Embeddings

- LSTMs Rise Again: Extended-LSTM Models Challenge the Transformer…

- How to Build a Text Classification Model with Hugging Face Transformers

- A Deep Dive into Image Embeddings and Vector Search with BigQuery…

- Multilabel Classification: An Introduction with Python's Scikit-Learn

- Understanding Classification Metrics: Your Guide to Assessing Model…