What is Text Classification?

We will define text classification, how it works, some of its most known algorithms, and provide data sets that might help start your text classification journey.

What is Text Classification?

Text Classification is the process of categorizing text into one or more different classes to organize, structure, and filter into any parameter. For example, text classification is used in legal documents, medical studies and files, or as simple as product reviews. Data is more important than ever; companies are spending fortunes trying to extract as many insights as possible.

With text/document data being much more abundant than other data types, new methods of utilizing them are imperative. Since data is inherently unstructured and extremely plentiful, organizing data to understand it in digestible ways can drastically improve its value. Using Text Classification with Machine Learning can automatically structure relevant text in a faster and more cost-effective way.

We will define text classification, how it works, some of its most known algorithms, and provide data sets that might help start your text classification journey.

Why Use Machine Learning Text Classification?

- Scale: Manual data entry, analysis, and organizing are tedious and slow. Machine Learning allows for an automatic analysis that can be applied to datasets no matter how big or small.

- Consistency: Human error occurs due to fatigue and desensitization to material in the dataset. Machine learning increases the scalability and drastically improves accuracy due to the unbiased nature and consistency of the algorithm.

- Speed: Data sometimes may need to be accessed and organized quickly. A machine-learned algorithm can parse through data to deliver information in a digestible manner.

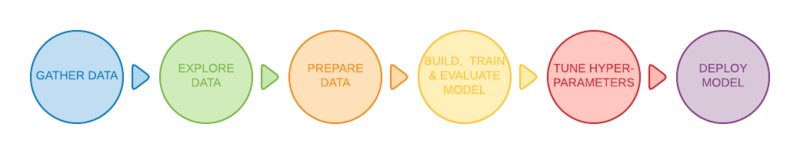

Getting Started With 6 Universal Steps

Some basic methods can classify different text documents to a certain degree, but the most commonly used methods involve machine learning. There are six basic steps that a text classification model goes through before being deployed.

1. Providing a High-Quality Dataset

Datasets are raw data chunks used as the data source to fuel our model. In the case of text classification, supervised machine learning algorithms are used, thus providing our machine learning model with labeled data. Labeled data is data predefined for our algorithm with an informative tag attached to it.

2. Filtering and processing the data

As machine learning models can only understand numerical values, tokenization and word embedding of the provided text will be necessary for the model to correctly recognize data.

Tokenization is the process of splitting text documents into smaller pieces called tokens Tokens can be represented as the entire word, a sub-word, or an individual character. For example, tokenizing the work smarter can be done as so:

- Token Word: Smarter

- Token Subword: Smart-er

- Token Character: S-m-a-r-t-e-r

Tokenization is important because text classification models can only process data on a token-based level and can not understand and process complete sentences. Further processing on the given raw dataset would be required for our model to easily digest the given data. Remove unnecessary features, filtering out null and infinite values, and more. Shuffling the entire dataset would help prevent any biases during the training phase.

3. Splitting our dataset into a training and testing datasets

We want to train out data on 80% of the dataset while reserving 20% of the data set to test the algorithm for accuracy.

4. Train the Algorithm

By running our model with the training dataset, the algorithm can categorize the provided texts into different categories by identifying hidden patterns and insights.

5. Testing and checking the model's performance

Next, test the model’s integrity using the testing data set as mentioned in step 3. The testing dataset will be unlabeled to test the model’s accuracy against the actual results. To accurately test the model the testing dataset must contain new test cases (different data than the previous training dataset) to avoid overfitting our model.

6. Tuning the model

Tune the machine learning model by adjusting the model's different hyperparameters without overfitting or creating a high variance. A hyperparameter is a parameter whose value controls the learning process of the model. You're now ready to deploy!

How Does Text Classification Work?

Word Embedding

In the filtering process mentioned earlier, machine and deep learning algorithms can only understand numerical values, forcing us to perform some word embedding techniques on our data set. Word embedding is the process of representing words into real value vectors that can encode the meaning of the given word.

- Word2Vec: An unsupervised word embedding method developed by Google. It utilizes neural networks to learn from large text data sets. As the name implies, the Word2Vec approach converts each word into a given vector.

- GloVe: Also known as Global Vector, is an unsupervised machine learning model for obtaining vector representations of words. Similar to the Word2Vec method, the GloVe algorithm maps words into meaningful spaces where the distance between the words is related to semantic similarity.

- TF-IDF: Short for term frequency-inverse document frequency, TF-IDF is a word embedding algorithm that evaluates how important a word is inside a given document. The TF-IDF assigns each word a given score to signify its importance in a set of documents.

Text Classification Algorithms

Here are three of the most well-known and effective text classification algorithms. Keep in mind there are further defining algorithms embedded within each method.

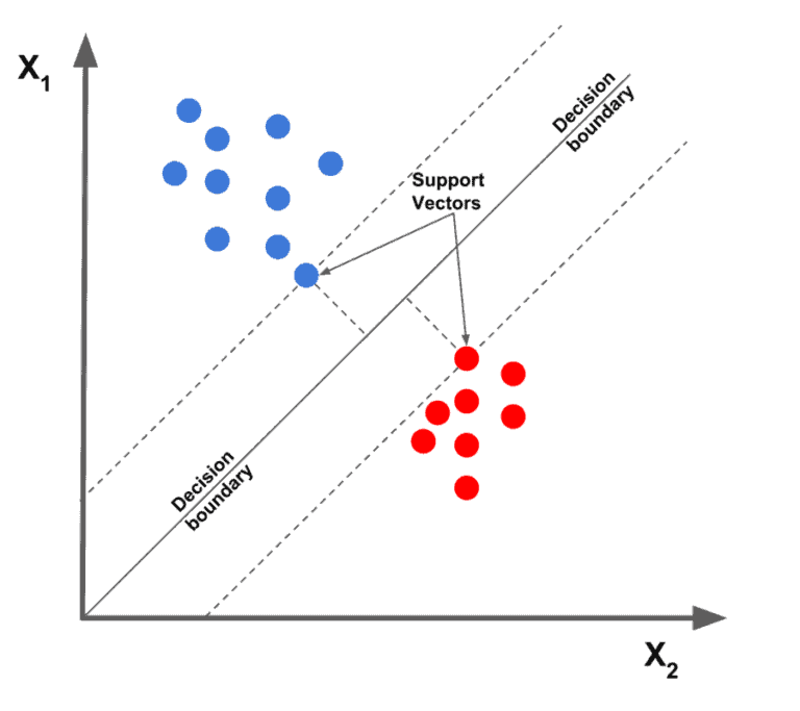

1. Linear Support Vector Machine

Regarded as one of the best text classification algorithms out there, the linear support vector machine algorithm plots the given data points concerning their given features, then draws a best fit line to split and categorize the data into different classes.

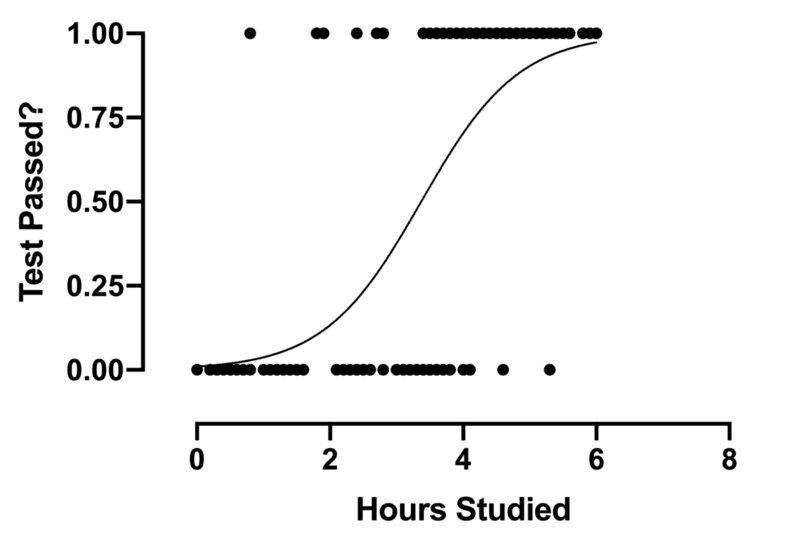

2. Logistic Regression

Logistic regression is a sub-class of regression that focuses mainly on classification problems. It uses a decision boundary, regression, and distance to evaluate and classify the dataset.

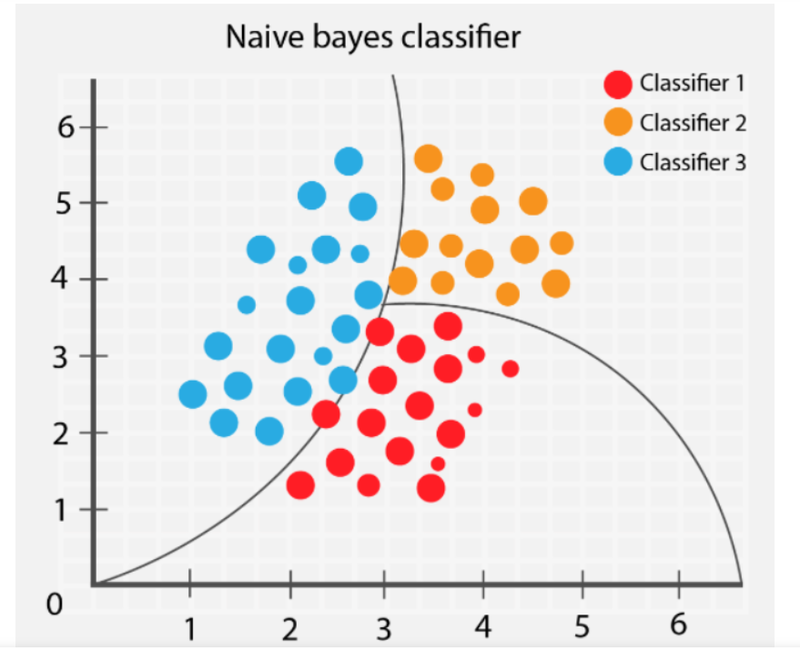

3. Naive Bayes

The Naive Bayes algorithm classifies different objects depending on their provided features. It then draws group boundaries to extrapolate those group classification to solve and categorize further.

What to Avoid When Setting Up Text Classification

Overcrowded Training Data

Providing your algorithm with low-quality data will result in poor future predictions. However, a very common problem among machine learning practitioners is feeding the training model with a data set that is too detailed that include unnecessary features. Overcrowding the data with irrelevant data can result in a decrease in model performance. When it comes to choosing and organizing a data set, Less is More.

Wrong training to testing data ratios will can greatly affect your model's performance and affect shuffling and filtering. With precise data points that are not skewed by other unneeded factors, the training model will perform more efficiently.

When training your model choose a data set that fits your model's requirements, filter the unnecessary values, shuffle the data set, and test your final model for accuracy. Simpler algorithms take less computing time and resources; the best models are the simplest ones that can solve complex problems.

Overfitting and Underfitting

Accuracy of models when training reaches a peak and then slowly tapers off as training continues. This is called overfitting; the model begins to learns unintended patterns since training has lasted too long . Be cautious when achieving high accuracy on the training set since the main goal is to develop models that have their accuracy rooted in the testing set (data the model has not seen before).

On the other end, underfitting is when the training model still has room for improvement and has not yet reached its maximum potential. Poorly trained models stem from the length of time trained or is over-regularized to the dataset. This exemplifies the point of having concise and precise data.

Finding the sweet spot when training a model is crucial. Splitting the dataset 80/20 is a good start, but tuning the parameters may be what your specific model needs to perform at its best.

Incorrect Text Format

Although not heavily mentioned in this article, using the correct text format for your text classification problem will lead to better results. Some approaches to representing your textual data include GloVe, Word2Vec, and embedding models.

Using the correct Text Format will improve how the model reads and interprets the dataset and in turn, helps it understand the patterns.

Text Classification Applications

- Filtering Spam: By searching for certain keywords, an email can be categorized as useful or spam.

- Categorizing Text: By using text classifications, applications can categorize different items(articles, books, etc) into different classes by classifying related texts such as the item name, description, and so on. Using such techniques can improve the experience as it makes it easier for users to navigate throughout a database.

- Identifying Hate Speech: Certain social media companies use text classification to detect and ban comments or posts with offensive mannerisms as not allowing any variation of profanity to be typed out and chatted in a multiplayer children's game.

- Marketing and Advertising: Companies can make specific changes to satisfy their customers by understanding how users react to certain products. It can also recommend certain products depending on user reviews toward similar products. Text classification algorithms can be used in conjunction with recommender systems, another deep learning algorithm that many online websites use to gain repeat business.

Popular Text Classification Datasets

With tons of labeled and ready-to-use datasets out there, you can always search for the perfect data set that matches your model's requirements.

While you can face some problems when deciding which one to use, in the coming part we will recommend some of the most well-known datasets out there that are available for public use.

- IMDB Dataset

- Amazon Reviews Dataset

- Yelp Reviews Dataset

- SMS Spam Collection

- Opin Rank Review Dataset

- Twitter US Airline Sentiment Dataset

- Hate Speech and Offensive Language Dataset

- Clickbait Dataset

Websites such as Kaggle contain a variety of datasets covering all topics. Try running your model on a couple of the above-mentioned data sets for practice!

Text Classification in Machine Learning

With machine learning having enormous impact in the last decade, companies are trying every possible method to utilize machine learning to automate processes. Reviews, comments, posts, articles, journals, and documentation all hold priceless value in text. With Text Classification used in many creative ways to extract user insights and patterns, companies can make decisions backed by data; professionals can obtain and learn valuable information quicker than ever.

Kevin Vu manages Exxact Corp blog and works with many of its talented authors who write about different aspects of Deep Learning.

Original. Reposted with permission.