Deep Learning for NLP: An Overview of Recent Trends

Deep Learning for NLP: An Overview of Recent Trends

A new paper discusses some of the recent trends in deep learning based natural language processing (NLP) systems and applications. The focus is on the review and comparison of models and methods that have achieved state-of-the-art (SOTA) results on various NLP tasks and some of the current best practices for applying deep learning in NLP.

By Elvis Saravia, Affective Computing & NLP Researcher

In a timely new paper, Young and colleagues discuss some of the recent trends in deep learning based natural language processing (NLP) systems and applications. The focus of the paper is on the review and comparison of models and methods that have achieved state-of-the-art (SOTA) results on various NLP tasks such as visual question answering (QA) and machine translation. In this comprehensive review, the reader will get a detailed understanding of the past, present, and future of deep learning in NLP. In addition, readers will also learn some of the current best practices for applying deep learning in NLP. Some topics include:

- The rise of distributed representations (e.g., word2vec)

- Convolutional, recurrent, and recursive neural networks

- Applications in reinforcement learning

- Recent development in unsupervised sentence representation learning

- Combining deep learning models with memory-augmenting strategies

What is NLP?

Natural language processing (NLP) deals with building computational algorithms to automatically analyze and represent human language. NLP-based systems have enabled a wide range of applications such as Google’s powerful search engine, and more recently, Amazon’s voice assistant named Alexa. NLP is also useful to teach machines the ability to perform complex natural language related tasks such as machine translation and dialogue generation.

For a long time, the majority of methods used to study NLP problems employed shallow machine learning models and time-consuming, hand-crafted features. This lead to problems such as the curse of dimensionalitysince linguistic information was represented with sparse representations (high-dimensional features). However, with the recent popularity and success of word embeddings (low dimensional, distributed representations), neural-based models have achieved superior results on various language-related tasks as compared to traditional machine learning models like SVM or logistic regression.

Distributed Representations

As mentioned earlier, hand-crafted features were primarily used to model natural language tasks until neural methods came around and solved some of the problems faced by traditional machine learning models such as curse of dimensionality.

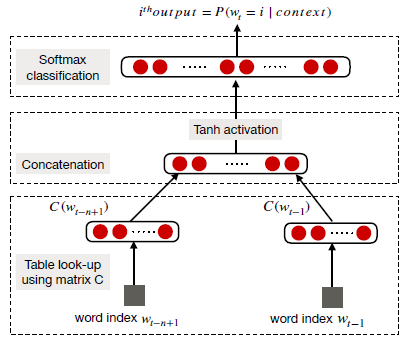

Word Embeddings: Distributional vectors, also called word embeddings, are based on the so-called distributional hypothesis — words appearing within similar context possess similar meaning. Word embeddings are pre-trained on a task where the objective is to predict a word based on its context, typically using a shallow neural network. The figure below illustrates a neural language model proposed by Bengio and colleagues.

The word vectors tend to embed syntactical and semantic information and are responsible for SOTA in a wide variety of NLP tasks such as sentiment analysisand sentence compositionality.

Distributed representations were heavily used in the past to study various NLP tasks, but it only started to gain popularity when the continuous bag-of-words (CBOW) and skip-gram models were introduced to the field. They were popular because they could efficiently construct high-quality word embeddings and because they could be used for semantic compositionality (e.g., ‘man’ + ‘royal’ = ‘king’).

Word2vec: Around 2013, Mikolav et al., proposed both the CBOW and skip-gram models. CBOW is a neural approach to construct word embeddings and the objective is to compute the conditional probability of a target word given the context words in a given window size. On the other hand, Skip-gram is a neural approach to construct word embeddings, where the goal is to predict the surrounding context words (i.e., conditional probability) given a central target word. For both models, the word embedding dimension is determined by computing (in an unsupervised manner) the accuracy of the prediction.

One of the challenges with word embedding methods is when we want to obtain vector representations for phrases such as “hot potato” or “Boston Globe”. We can’t just simply combine the individual word vector representations since these phrases don’t represent the combination of meaning of the individual words. And it gets even more complicated when longer phrases and sentences are considered.

The other limitation with the word2vec models is that the use of smaller window sizes produce similar embeddings for contrasting words such as “good” and “bad”, which is not desirable especially for tasks where this differentiation is important such as sentiment analysis. Another caveat of word embeddings is that they are dependent on the application in which they are used. Re-training task specific embeddings for every new task is an explored option but this is usually computationally expensive and can be more efficiently addressed using negative sampling. Word2vec models also suffer from other problems such as not taking into account polysemy and other biases that may surface from the training data.

Character Embeddings: For tasks such as parts-of-speech (POS) tagging and named-entity recognition (NER), it is useful to look at morphological information in words, such as characters or combinations thereof. This is also helpful for morphologically rich languages such as Portuguese, Spanish, and Chinese. Since we are analyzing text at the character level, these type of embeddings help to deal with the unknown word issue as we are no longer representing sequences with large word vocabularies that need to be reduced for efficient computation purposes.

Finally, it’s important to understand that even though both character-level and word-level embeddings have been successfully applied to various NLP tasks, there long-term impact have been questioned. For instance, Lucy and Gauthier recently found that word vectors are limited in how well they capture the different facets of conceptual meaning behind words. In other words, the claim is that distributional semantics alone cannot be used to understand the concepts behind words. Recently, there was an important debate on meaning representation in the context of natural language processing systems.

Convolutional Neural Network (CNN)

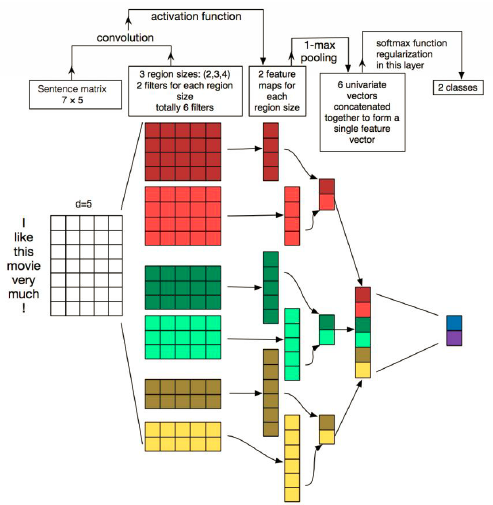

A CNN is basically a neural-based approach which represents a feature function that is applied to constituting words or n-grams to extract higher-level features. The resulting abstract features have been effectively used for sentiment analysis, machine translation, and question answering, among other tasks. Collobert and Weston were among the first researchers to apply CNN-based frameworks to NLP tasks. The goal of their method was to transform words into a vector representation via a look-up table, which resulted in a primitive word embedding approach that learn weights during the training of the network (see figure below).

In order to perform sentence modeling with a basic CNN, sentences are first tokenized into words, which are further transformed into a word embedding matrix (i.e., input embedding layer) of d dimension. Then, convolutional filters are applied on this input embedding layer which consists of applying a filter of all possible window sizes to produce what’s called a feature map. This is then followed by a max-pooling operation which applies a max operation on each filter to obtain a fixed length output and reduce the dimensionality of the output. And that procedure produces the final sentence representation.

By increasing the complexity of the aforementioned basic CNN and adapting it to perform word-based predictions, other NLP tasks such as NER, aspect detection, and POS can be studied. This requires a window-based approach, where for each word a fixed size window of neighboring words (sub-sentence) is considered. Then a standalone CNN is applied to the sub-sentence and the training objective is to predict the word in the center of the window, also referred to as word-level classification.

One of the shortcoming with basic CNNs is there inability to model long distance dependencies, which is important for various NLP tasks. To address this problem, CNNs have been coupled with time-delayed neural networks (TDNN) which enable larger contextual range at once during training. Other useful types of CNN that have shown success in different NLP tasks, such as sentiment prediction and question type classification, are known as dynamic convolutional neural network (DCNN). A DCNN uses a dynamic k-max pooling strategy where filters can dynamically span variable ranges while performing the sentence modeling.

CNNs have also been used for more complex tasks where varying lengths of texts are used such as in aspect detection, sentiment analysis, short text categorization, and sarcasm detection. However, some of these studies reported that external knowledge was necessary when applying CNN-based methods to microtexts such as Twitter texts. Other tasks where CNN proved useful are query-document matching, speech recognition, machine translation (to some degree), and question-answer representations, among others. On the other hand, a DCNN was used to hierarchically learn to capture and compose low-level lexical features into high-level semantic concepts for the automatic summarization of texts.

Overall, CNNs are effective because they can mine semantic clues in contextual windows, but they struggle to preserve sequential order and model long-distance contextual information. Recurrent models are better suited for such type of learning and they are discussed next.

- Job Trends in Data Analytics: NLP for Job Trend Analysis

- Understanding Machine Learning Algorithms: An In-Depth Overview

- Understanding Supervised Learning: Theory and Overview

- Machine Learning Evaluation Metrics: Theory and Overview

- Statistics in Data Science: Theory and Overview

- A Comparative Overview of the Top 10 Open Source Data Science Tools in 2023

Pages: 1 2

Deep Learning for NLP: An Overview of Recent Trends

Deep Learning for NLP: An Overview of Recent Trends