The 6 Most Useful Machine Learning Projects of 2018

Let’s take a look at the top 6 most practically useful ML projects over the past year. These projects have published code and datasets that allow individual developers and smaller teams to learn and immediately create value.

The past year has been a great one for AI and Machine Learning. Many new high-impact applications of Machine Learning were discovered and brought to light, especially in healthcare, finance, speech recognition, augmented reality, and more complex 3D and video applications.

We’ve seen a big push towards more application driven research, rather than theoretical. Although this can have its drawbacks, it has for the time being made some great positive impacts, generating new R&D that can rapidly be turned into business and customer value. This trend is strongly reflected in much of the ML open source work.

Let’s take a look at the top 6 most practically useful ML projects over the past year. These projects have published code and datasets that allow individual developers and smaller teams to learn and immediately create value. They may not be the most theoretically ground breaking works, but they are applicable and practical.

Fast.ai

The Fast.ai library was written to simplify training fast and accurate neural nets using modern best practices. It abstracts away all of the nitty gritty work that can come with implementing deep neural networks in practice. It’s very easy to use and is designed with a practitioner's application building mindset. Originally created for the students of the Fast.ai course, the library is written on top of the easy to use Pytorch library in a clean and concise way. Their documentation is top notch too.

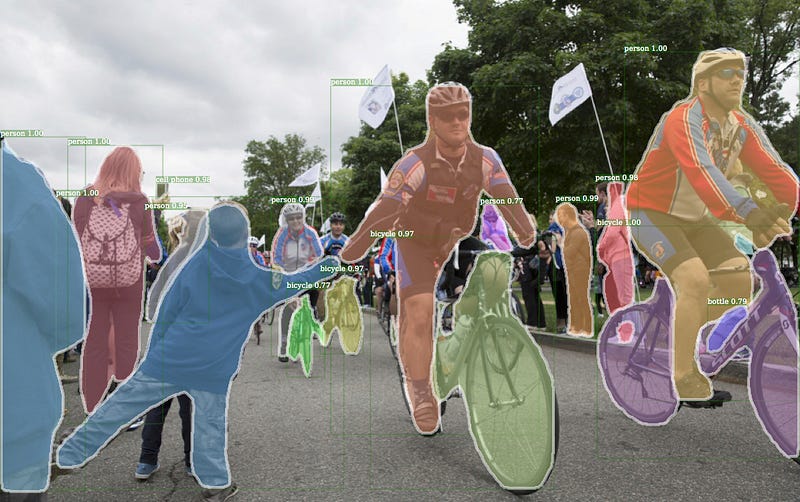

Detectron

Detectron is Facebook AI’s research platform for object detection and instance segmentation research, written in Caffe2. It contains implementations of a wide variety of object detection algorithms including:

- Mask R-CNN: Object detection and instance segmentation using a Faster R-CNN structure

- RetinaNet: A feature pyramid-based network with a unique Focal Loss to handle hard examples.

- Faster R-CNN: The most common structure for object detection networks

All of the networks can use one of several optional classification backbones:

- ResNeXt{50,101,152}

- ResNet{50,101,152}

- Feature Pyramid Networks (with ResNet/ResNeXt)

- VGG16

Even more, all of these come with pre-trained models on the COCO dataset so you can use them right out of the box! They’ve all been tested already using standard evaluation metrics in the Detectron model zoo.

Detectron

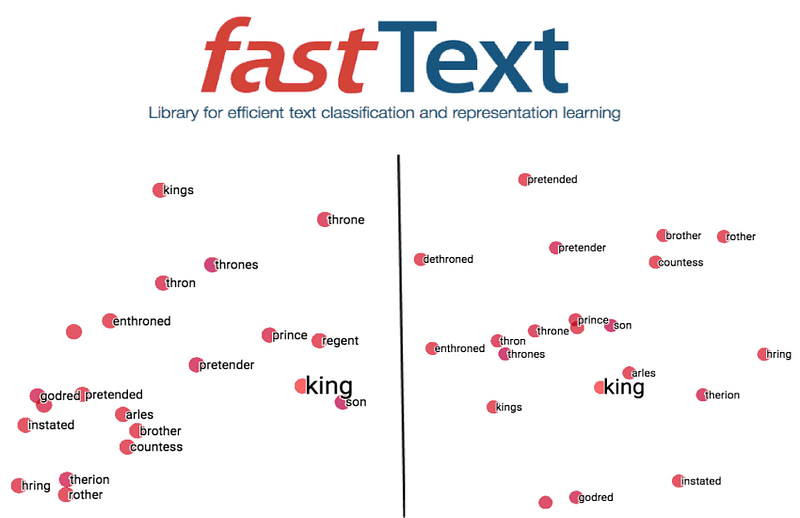

FastText

Another one from Facebook research, the fastText library is designed for text representation and classification. It comes with pre-trained models of word vectors for over 150 languages. Such word vectors can be used for many tasks including text classification, summarisation, and translation

AutoKeras

Auto-Keras is an open source software library for automated machine learning (AutoML). It was developed by DATA Lab at Texas A&M University and community contributors. The ultimate goal of AutoML is to provide easily accessible deep learning tools to domain experts with limited data science or machine learning background. Auto-Keras provides functions to automatically search for the best architecture and hyperparameters for deep learning models.

Dopamine

Dopamine is a research framework for fast prototyping of reinforcement learning algorithms, created by Google. It aims to be flexible yet easy to use, implementing standard RL algorithms, metrics, and benchmarks.

According to Dopamine’s documentation, their design principles are:

- Easy experimentation:To help new users run benchmark experiments

- Flexible development: To facilitate the generation of new and innovative ideas for new users

- Compact and reliable: Provide implementations for some of the older and more popular algorithms

- Reproducible: Ensure results are reproducible

vid2vid

The vid2vid project is a public Pytorch implementation of Nvidia’s state-of-the-art video-to-video synthesis algorithm. The goal with video-to-video synthesis is to learn a mapping function from an input source video (e.g., a sequence of semantic segmentation masks) to an output photo-realistic video that precisely depicts the content of the source video.

The great thing about this library is its options: it provides several different vid2vid applications including self-driving / urban scenes, faces, and human pose. It also comes with extensive instructions and capabilities including dataset loading, task evaluation, training functionality, and multi-gpu!

Converting a segmentation map to a real image

Honourable mentions

- ChatterBot: machine learning for conversational dialog engine and creating chat bots

- Kubeflow: machine learning toolkit for Kubernetes

- imgaug: image augmentation for deep learning

- imbalanced-learn: a python package under scikit learn specifically for tacking imbalanced datasets

- mlflow: open source platform to manage the ML lifecycle, including experimentation, reproducibility and deployment.

- AirSim: simulator for autonomous vehicles built on Unreal Engine / Unity, from Microsoft AI & Research

Like to learn?

Follow me on twitter where I post all about the latest and greatest AI, Technology, and Science!

Bio: George Seif is a Certified Nerd and AI / Machine Learning Engineer.

Original. Reposted with permission.

Related:

- Top Python Libraries in 2018 in Data Science, Deep Learning, Machine Learning

- 5 Quick and Easy Data Visualizations in Python with Code

- The 5 Clustering Algorithms Data Scientists Need to Know

- The 7 Most Useful Jupyter Notebook Extensions for Data Scientists

- Tips for Building Machine Learning Models That Are Actually Useful

- Data access is severely lacking in most companies, and 71% believe…

- Time 100 AI: The Most Influential?

- The Most Popular KDnuggets Articles of 2024

- Most Candidates Fail These SQL Concepts in Data Interviews