The Elements of Statistical Learning: The Free eBook

Check out this free ebook covering the elements of statistical learning, appropriately titled "The Elements of Statistical Learning."

Welcome to another edition of KDnuggets' The Free eBook. Over the past few weeks we have spotlighted a different freely available publication in the world of data science, machine learning, statistics, etc. As long as readers continue to enjoy them, we will continue to showcase them on a weekly basis.

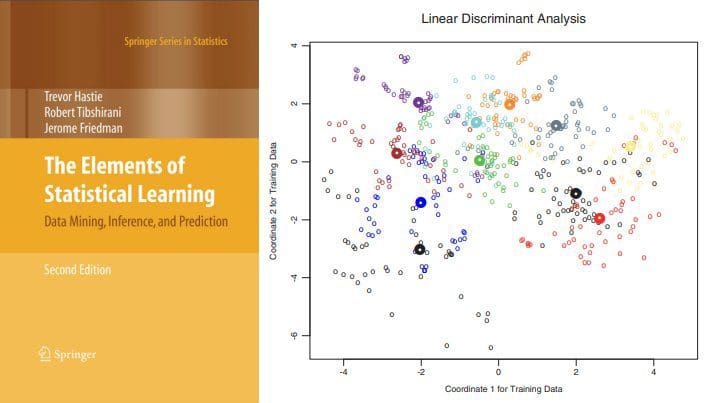

This week we bring you The Elements of Statistical Learning, by Trevor Hastie, Robert Tibshirani, and Jerome Friedman. The first edition of this seminal work in the field of statistical (and machine) learning was originally published nearly 20 years ago, and quickly cemented itself as one of the leading texts in the field. The elements of statistical learning have not remained static over the intervening years, however, and so a second edition of the book was published in 2009. It is this second edition we discuss today, specifically its 12th printing from 2017.

First off, why "statistical learning?" If you aren't aware of the term, or perhaps have only previously heard it used in this book's title, fret not. This is not some distinct domain of study far different than what you are currently learning or have been interested in. The quote below from the book's website can help put the term in perspective (emphasis added):

During the past decade there has been an explosion in computation and information technology. With it have come vast amounts of data in a variety of fields such as medicine, biology, finance, and marketing. The challenge of understanding these data has led to the development of new tools in the field of statistics, and spawned new areas such as data mining, machine learning, and bioinformatics.

The authors go on to concisely explain the concept of "learning," and its importance:

This book is about learning from data. In a typical scenario, we have an outcome measurement, usually quantitative (such as a stock price) or categorical (such as heart attack/no heart attack), that we wish to predict based on a set of features (such as diet and clinical measurements). We have a training set of data, in which we observe the outcome and feature measurements for a set of objects (such as people). Using this data we build a prediction model, or learner, which will enable us to predict the outcome for new unseen objects. A good learner is one that accurately predicts such an outcome.

The Elements of Statistical Learning is quite literally about the application of new tools in the field of statistics to the process of learning, and building good learning models. If you are reading this article, or any article on KDnuggets, this is likely right up your alley.

The book is exhaustive in its content, and covers everything you would expect such a book to cover. The table of contents are below.

- Introduction

- Overview of Supervised Learning

- Linear Methods for Regression

- Linear Methods for Classification

- Basis Expansions and Regularization

- Kernel Smoothing Methods

- Model Assessment and Selection

- Model Inference and Averaging

- Additive Models, Trees, and Related Methods

- Boosting and Additive Trees

- Neural Networks

- Support Vector Machines and Flexible Discriminants

- Prototype Methods and Nearest-Neighbors

- Unsupervised Learning

- Random Forests

- Ensemble Learning

- Undirected Graphical Models

- High-Dimensional Problems

The chapters each focus on a specific aspect of statistical learning of importance. Model Assessment and Selection, for example, is identified as important enough of a concept to be awarded its own chapter, which is both apt and refreshing. That this chapter appears early on after the introduction of a few chapters on modeling techniques is also worthy of note; relegating such a chapter to after the introduction of a series of classification techniques might mean that it is never reached by the reader, who may have already felt they had gotten everything they needed out of the book after learning the algorithms, which would be a real mistake.

All this is to say that the authors, who are also researchers and instructors, have an approach to how they are conveying their expertise. Their method seems to follow a logical ordered approach to what, and when, readers should be learning. However, individual chapters stand on their own as well, and so picking up the book and heading straight to the chapter on model inferences, for example, will work perfectly well, so long as you already have an understanding of what comes in the book before it.

Reviews of this book over the years are plentiful and generally positive. Critical reviews tend to focus on a couple of specific issues: the book is written for those with an advanced understanding of statistics, and the book is presented in a disorganized or unfriendly manner. The book's introduction explains:

This book is designed for researchers and students in a broad variety of fields: statistics, artificial intelligence, engineering, finance and others. We expect that the reader will have had at least one elementary course in statistics, covering basic topics including linear regression.

So these aforementioned issues are not insurmountable. There is a reason this book is generally so highly regarded, and by taking your time with it, even as a beginner, you can reap the same rewards others over the years have. But just be warned, at more than 750 pages this isn't a casual read; you need to put in the time.

You can find the PDF of the book here. The book's official website contains links to datasets used in the book, instructor info, a link to a short course on the topics, and other complementary material.

Give The Elements of Statistical Learning a try, and follow in the footsteps of so many other learners who have come before you. Since the price is right, you have nothing to lose.

Related:

- A Concise Course in Statistical Inference: The Free eBook

- Mathematics for Machine Learning: The Free eBook

- Dive Into Deep Learning: The Free eBook