Decision Tree Pruning: The Hows and Whys

Decision trees are a machine learning algorithm that is susceptible to overfitting. One of the techniques you can use to reduce overfitting in decision trees is pruning.

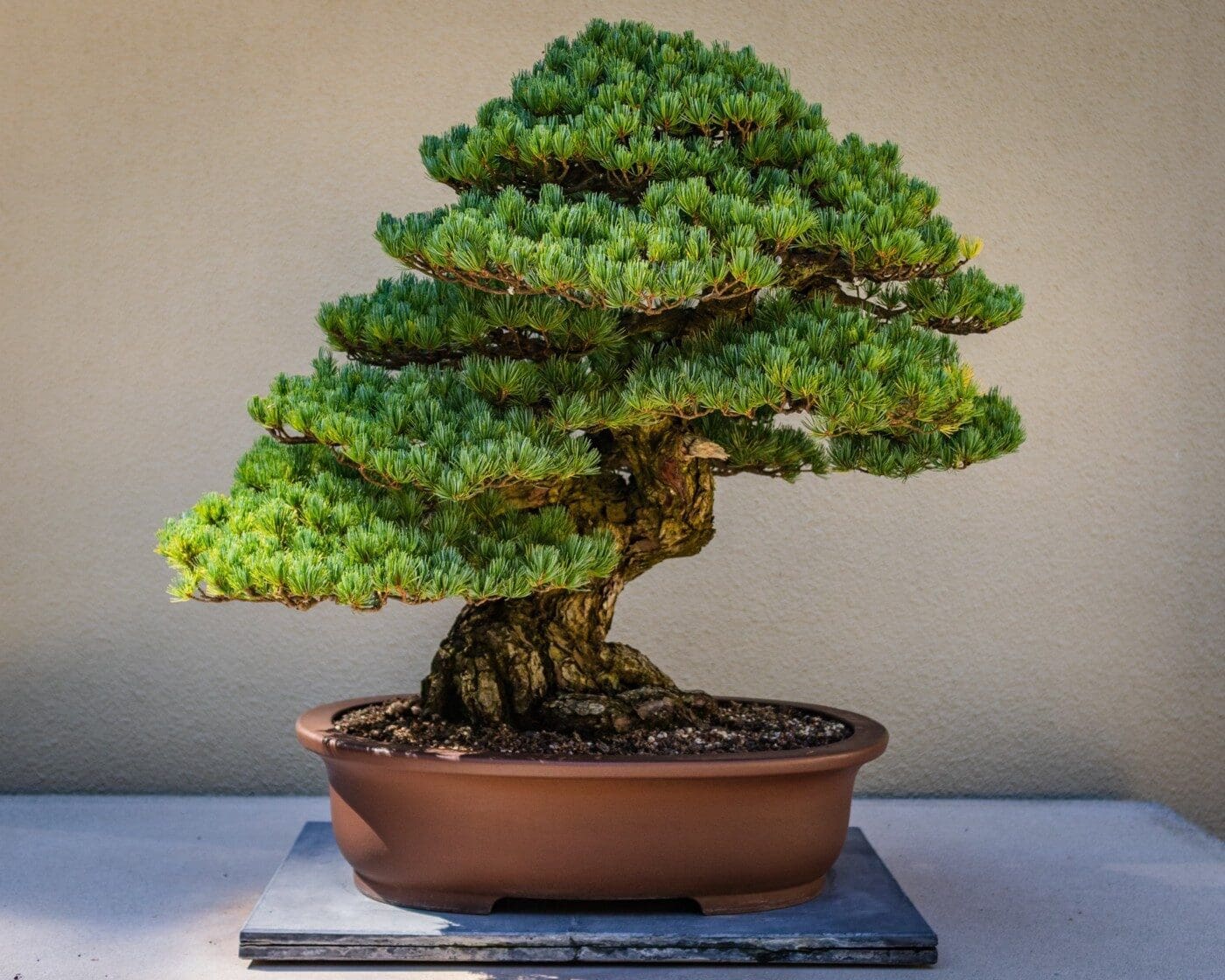

Devin H via Unsplash

Let’s recap Decision Trees.

Decision Trees are a non-parametric supervised learning method that can be used for classification and regression tasks. The goal is to build a model that can make predictions on the value of a target variable by learning simple decision rules inferred from the data features.

Decision Trees are made up of

- Root Node - the very top of the decision tree and is the ultimate decision you’re trying to make.

- Internal Nodes - this branches off from the Root Node, and represent different options

- Leaf Nodes - these are attached at the end of the branches and represent possible outcomes for each action.

Just like any other machine learning algorithm, the most annoying thing that can happen is overfitting. And Decision Trees are one of the machine learning algorithms that are susceptible to overfitting.

Overfitting is when a model completely fits the training data and struggles or fails to generalize the testing data. This happens when the model memorizes noise in the training data and fails to pick up essential patterns which can help them with the test data.

One of the techniques you can use to reduce overfitting in Decision Trees is Pruning.

What is Decision Tree Pruning and Why is it Important?

Pruning is a technique that removes the parts of the Decision Tree which prevent it from growing to its full depth. The parts that it removes from the tree are the parts that do not provide the power to classify instances. A Decision tree that is trained to its full depth will highly likely lead to overfitting the training data - therefore Pruning is important.

In simpler terms, the aim of Decision Tree Pruning is to construct an algorithm that will perform worse on training data but will generalize better on test data. Tuning the hyperparameters of your Decision Tree model can do your model a lot of justice and save you a lot of time and money.

How do you Prune a Decision Tree?

There are two types of pruning: Pre-pruning and Post-pruning. I will go through both of them and how they work.

Pre-pruning

The pre-pruning technique of Decision Trees is tuning the hyperparameters prior to the training pipeline. It involves the heuristic known as ‘early stopping’ which stops the growth of the decision tree - preventing it from reaching its full depth.

It stops the tree-building process to avoid producing leaves with small samples. During each stage of the splitting of the tree, the cross-validation error will be monitored. If the value of the error does not decrease anymore - then we stop the growth of the decision tree.

The hyperparameters that can be tuned for early stopping and preventing overfitting are:

max_depth, min_samples_leaf, and min_samples_split

These same parameters can also be used to tune to get a robust model. However, you should be cautious as early stopping can also lead to underfitting.

Post-pruning

Post-pruning does the opposite of pre-pruning and allows the Decision Tree model to grow to its full depth. Once the model grows to its full depth, tree branches are removed to prevent the model from overfitting.

The algorithm will continue to partition data into smaller subsets until the final subsets produced are similar in terms of the outcome variable. The final subset of the tree will consist of only a few data points allowing the tree to have learned the data to the T. However, when a new data point is introduced that differs from the learned data - it may not get predicted well.

The hyperparameter that can be tuned for post-pruning and preventing overfitting is: ccp_alpha

ccp stands for Cost Complexity Pruning and can be used as another option to control the size of a tree. A higher value of ccp_alpha will lead to an increase in the number of nodes pruned.

Cost complexity pruning (post-pruning) steps:

- Train your Decision Tree model to its full depth

- Compute the ccp_alphas value using

cost_complexity_pruning_path() - Train your Decision Tree model with different

ccp_alphasvalues and compute train and test performance scores - Plot the train and test scores for each value of

ccp_alphasvalues.

This hyperparameter can also be used to tune to get the best fit models.

Tips

Here are some tips you can apply when Decision Tree Pruning:

- If the node gets very small, do not continue to split

- Minimum error (cross-validation) pruning without early stopping is a good technique

- Build a full-depth tree and work backward by applying a statistical test during each stage

- Prune an interior node and raise the sub-tree beneath it up one level

Conclusion

In this article, I have gone over the two types of pruning techniques and their uses. Decision Trees are highly susceptible to overfitting - therefore pruning is a vital phase for the algorithm. If you would like to know more about post-pruning decision trees with cost complexity pruning, click on this link.

Nisha Arya is a Data Scientist and Freelance Technical Writer. She is particularly interested in providing Data Science career advice or tutorials and theory based knowledge around Data Science. She also wishes to explore the different ways Artificial Intelligence is/can benefit the longevity of human life. A keen learner, seeking to broaden her tech knowledge and writing skills, whilst helping guide others.