The Mistake Every Data Scientist Has Made at Least Once

How to increase your chances of avoiding the mistake.

If you use a tool where it hasn’t been verified safe, any mess you make is your fault… AI is a tool like any other, so the same rule applies. Never trust it blindly.

Instead, force machine learning and AI systems to earn your trust.

If you want to teach with examples, the examples have to be good. If you want to trust your student’s ability, the test has to be good.

Always keep in mind that you don’t know anything about the safety of your system outside the conditions you checked it in, so check it carefully! Here is a list of handy reminders that apply not only to ML/AI but also to every solution based on data:

- If you didn’t test it, don’t trust it.

- If you didn’t test it in your environment, don’t trust it in your environment.

- If you didn’t test it with your user population, don’t trust it with your user population.

- If you didn’t test it with your data population, don’t trust it with your data population.

- If an input is unusual, don’t trust your system to output something sensible. Consider using outlier detection and safety nets (e.g. flagging an unusual instance for human review).

Designing good tests is what keeps us all safe.

One difference between a data newbie and a data science expert is that the expert has some whopping trust issues… and is happy to have them.

SOURCE: Pixabay

The Mistake Experts Make

In our previous article, we looked at the testing mistake that beginners make and how to avoid it. Now it’s time to look at a more insidious mistake that even experts make.

For those who haven’t read the previous article, let’s catch you up on the setup (in a parallel universe where we didn’t make the newbie mistake, that is):

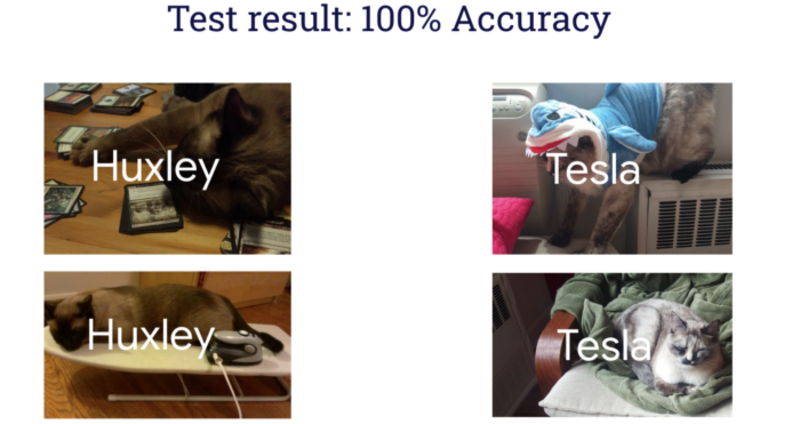

We competently trained a decent model using 70,000 input images (each one you see in the image above is a placeholder that stands for 10,000 similar photos with the label Banana, Huxley, or Tesla; one of these is my breakfast and the other two are my cats, you figure out which is which) and then tested it on 40,000 pristine images.

And we get… perfect results! Wow.

When you observe perfect performance on a machine learning task, you should be very worried indeed. Just as the previous article warned you against having the wrong attitude towards 0%, it’s a good habit to smell a rat whenever you see perfect results. An expert’s first thought would be that either the test was much too easy or the labels leaked or the data weren’t as pristine as we hoped. Exactly the same thoughts a seasoned college professor would have upon discovering that every student answered every question flawlessly. They’d be furiously debugging instead of patting themselves on the back for good teaching.

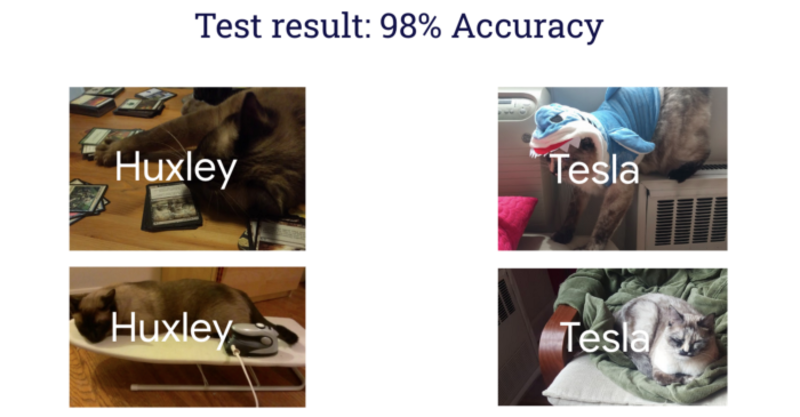

Since 100% isn’t a realistic result for a lifelike scenario, let’s imagine we got some other high-but-not-too-high score, say 98%.

Great performance, right? Sounds like great performance in pristine data to boot. With all the statistical hypothesis testing bells and whistles taken care of! We’ve built an awesome Tesla/Huxley detector, let’s launch it!!!

Watch out.

You’ll be tempted to jump to conclusions. This might not be a Tes/Hux detector at all, even if it gives great results in testing.

The mistake that experts make has less to do with the technical and more to do with the human side of things: giving in to wishful thinking.

Experts aren’t immune to the mistake of wishful thinking.

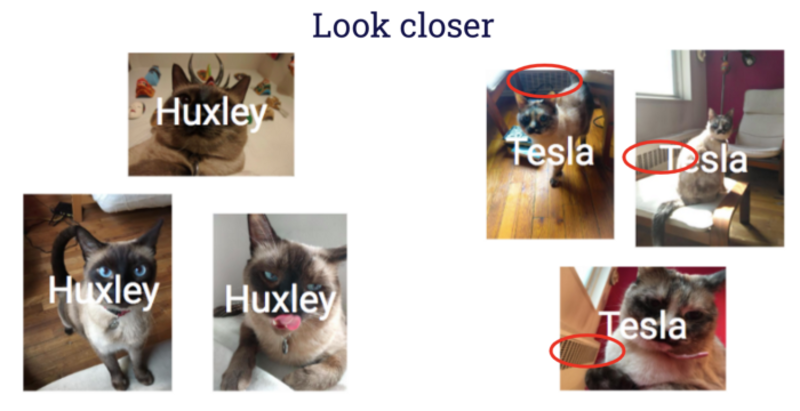

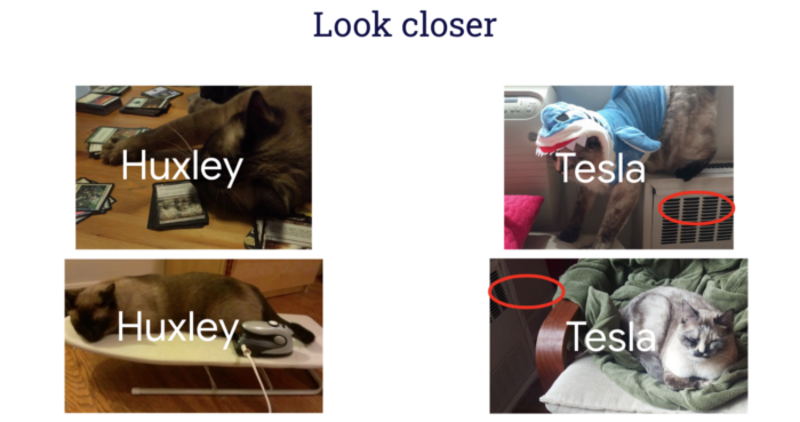

Don’t assume these results mean anything at all about what the system is actually detecting. We have no evidence that it has learned — or worse, that “it understands” — how to tell Hux and Tes apart. Look closer…

Did you notice that little detail that’s present in the background of all photos of Tes? Whoops, turns out we accidentally built a radiator / not-radiator classifier!

It’s a very human mistake to over-focus on the parts of the problem and data that are interesting to us — like the identity of the two cats — and snooze past the other bits, like the presence of the radiator in most of the photos of Tes since that’s where she likes to hang out and therefore it’s also where she’s most likely to be photographed. Unfortunately, she loves the radiator so much that the same issue turns up in both the training data and the test data, so you won’t catch the problem by testing on data from the same population you used for training.

All this is (somewhat) okay as long as you (and your stakeholders and users) have the steely discipline of:

- Not reading cute stories into the results, like “This is a Hux/Tes classifier.” This kind of language implies something far more general than we have evidence for. You’re probably doing it wrong if your understanding of what a machine learning system is actually doing fits into a pithy newspaper headline… It should, at a minimum, bore you to death with its details.

- Staying painfully aware that if there’s a difference between the setting you tested your system in and the setting you’re training it in, you have no guarantee that your solution will work.

This kind of discipline is rare, so until we have better data literacy, all I can do is dream big and do my bit for educating people.

This system will only work with photos taken in the same manner as the testing and training datasets — photos from the same apartment in the winter, since that’s when Tesla tended to hang out by the radiator while Huxley didn’t. If you always take photos in the same way and you had a lot of them, who cares how your system does the trick of assigning labels as long as it gets you the right ones. If it uses the radiator to win at the labeling game, that’s fine… as long as you don’t expect it to work in any other context (it won’t). For that, you need better, broader training and testing datasets. And if you move the system to a different apartment, even if you think you understand how your system works, test it afresh anyway to ensure it still works.

The world represented by your data is the only one you’re going to succeed in.

Remember, the world represented by your data is the only one you’re going to succeed in. So you need to think carefully about the data you’re using.

Avoiding the Expert Mistake

Never assume you understand what an AI system is doing. Believing that your simple understanding of a complex thing is bulletproof is the height of arrogance. You’ll be punished for it.

Never assume you understand what an AI system is doing. Believing that your simple understanding of a complex thing is bulletproof is the height of arrogance. You’ll be punished for it.

It’s easy to miss real-world subtleties in your data. No matter how talented you are at it, if you work long enough in data science, you’ll almost surely make this wishful-thinking mistake at least once until you learn the hard way to severely curtail your conclusions — the best habit you can build is to avoid reading extra meaning into things until you’ve thoroughly checked the evidence. You’ll know you’ve arrived at the next level when your inner monologue about your results sounds less like a TED talk and more like the fine-print of the world’s most boring contract. This is a good thing. You can dazzle with your fast talk later if you insist, just don’t embarrass data literacy by believing your own hyperbolae.

The only thing you can learn from good testing results is that the system works well in environments, situations, datasets, and populations that are similar to the testing conditions. Any guesses you’d be tempted to make about its performance outside those conditions are fiction. Test carefully and don’t jump to conclusions!

Thanks for Reading! How about a YouTube Course?

If you had fun here and you’re looking for an applied AI course designed to be fun for beginners and experts alike, here’s one I made for your amusement:

Enjoy the entire course playlist here: bit.ly/machinefriend

Cassie Kozyrkov is a data scientist and leader at Google with a mission to democratize Decision Intelligence and safe, reliable AI.

Original. Reposted with permission.